Annotating for Domain-Specific Fine-Tuning: Tailoring Models to Your Use Case

Fine-tuning a model for a specific subject area starts with one crucial step: annotation. During domain-specific fine-tuning, the quality and accuracy of your annotations determine how well the model adapts to your unique needs. Whether you work in the legal, medical, retail, or any other specialized industry, raw data alone is insufficient.

For fine-tuning to be truly effective, your annotations must reflect your subject area's nuances as accurately as possible. This often means going beyond general categories and creating labels, ranges, or notes that capture fine details. When done carefully, subject area-centric annotation turns a general-purpose model into a highly specialized assistant that understands your tasks and delivers results that seem customized to your workflows.

Key Takeaways

- Domain-specific fine-tuning adapts pre-trained models to specific tasks.

- Quality annotation is crucial for effective fine-tuning.

- Fine-tuning reduces the need for extensive domain-specific data.

- Expert annotation services ensure accurate and relevant data labeling.

- Tailored AI solutions can significantly improve business outcomes.

Definition and Importance

Annotation for fine-tuning domain-specific data means adding structured labels, tags, or notes to the data in a way that teaches the model to perform specialized tasks or use language. It transforms expert knowledge into a form that a machine-learning model can understand and learn from. Unlike general annotations, which can focus on broad categories, domain-specific annotations are closely related to a particular industry or application's specific needs, standards, and expectations.

This process is essential because it directly affects how well the model can work in your actual use case. Without domain-specific annotations, even the most powerful models can miss key details, misunderstand context, or produce results that seem generic or untargeted. Good annotations help models capture the most important differences, making them more accurate and valid in practical settings.

Differences from General Fine-Tuning

The main difference between domain-specific fine-tuning and general fine-tuning is the focus and level of detail. General fine-tuning typically involves training a model on a broad set of examples to improve its overall performance slightly or to adapt it to a broad category of tasks. The goal is to improve the model in many situations without over-specializing. In contrast, domain-specific fine-tuning is much more targeted. It uses carefully selected, annotated examples from a particular industry or application to improve the model's understanding of specific concepts, language, and workflows.

Because of this, annotation strategies for domain-specific fine-tuning are typically more detailed and customized. Instead of relying on generic labels such as "positive" or "negative", you can label complex medical conditions, legal arguments, technical procedures, or other fine-grained differences.

Key Steps in Domain-Specific Fine-Tuning

The first key step in fine-tuning for a specific domain is to prepare and annotate the data. A collection of examples reflecting the types of input data the model will encounter must be collected and carefully labeled according to the domain's specific requirements. Once the dataset is ready, it is usually divided into training, validation, and sometimes test sets.

Once the data is prepared, the fine-tuning process begins by taking a pre-trained model and continuing to train it on the newly annotated, domain-specific dataset. Parameters such as learning rate and batch size are adjusted to ensure the model adapts without losing its overall capabilities. During fine-tuning, the validation performance is closely monitored to detect signs of overfitting or learning gaps. After training, the model is evaluated on real-world tasks or unseen examples to verify its performance in the target domain.

Gathering Data

Gathering data for fine-tuning specific to the subject area involves identifying and collecting examples that closely match the real-world tasks the model is expected to perform. This data should reflect the language, structure, and problems typical of the target domain, whether from existing internal resources, public datasets, or newly created material. Quality is often more important than quantity at this stage; a smaller, well-chosen dataset can train a model more effectively than an extensive collection of loosely related examples.

In many cases, the available data may need to be carefully curated to remove noise, irrelevant content, or inconsistencies that could mislead the model during fine-tuning. Depending on the subject area, it may also be necessary to anonymize sensitive information or balance the dataset to ensure that all critical subcategories are adequately represented. Collecting the correct data lays the foundation for successful fine-tuning by shaping how well the model can learn the patterns, terminology, and reasoning unique to the intended use case.

Techniques for Effective Annotation

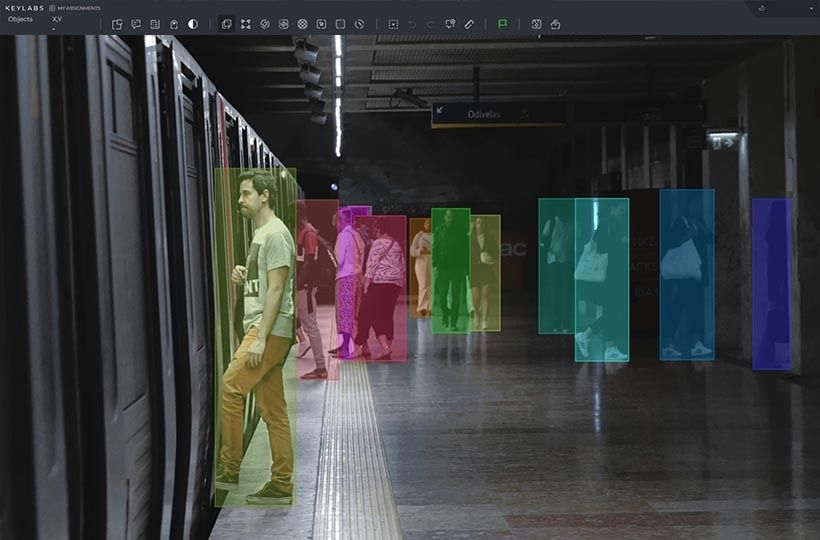

Methods for practical annotation within domain-specific fine-tuning focus on achieving consistency, clarity, and relevance. One critical approach is to develop detailed annotation guidelines before starting any work, clearly defining how different cases should be labeled and providing examples of correct and incorrect annotations. These guidelines help maintain uniformity, especially when multiple annotators are involved. Pilot annotation phases, where a small subset of data is tagged and reviewed, are often used to refine these guidelines and identify misunderstandings early.

Another technique is iterative annotation, where a dataset is created over several rounds, each based on model feedback or error analysis from previous rounds. This allows the annotation strategy to evolve based on real performance issues rather than assumptions. Using annotation tools that support structured labeling, version control, and easy correction also increases efficiency and reduces errors. Throughout the process, prioritizing examples that are diverse and representative of the full range of domain-specific tasks ensures that the model learns not only simple patterns but also the complex or rare ones that matter most in practice.

Quality Assurance in Annotation

One of the primary methods of maintaining high-quality annotations is regular review and validation. This may involve periodic spot checks or random sampling of annotations to ensure that they align with the guidelines and are consistent across annotators. In some cases, dual annotation is used, where two annotators annotate the same data, and a third-party expert or consensus decision-making resolves differences.

Another important aspect of quality assurance is feedback loops. Annotators should receive feedback on their work promptly, mainly if errors are found, to correct mistakes and improve their future annotations. It is also helpful to conduct periodic refresher sessions for annotators, especially as the complexity of the subject area or task increases. Tools that track annotator performance and flag potential inconsistencies can further support the process by allowing real-time monitoring and adjustments.

Ethical Considerations in Annotation

One of the key ethical issues is the privacy and security of the data being annotated. For example, when dealing with medical or legal data, it is essential to anonymize personal information and ensure that any sensitive data is handled by privacy regulations such as HIPAA or GDPR. Ethical annotation practices also include obtaining proper consent from individuals whose data is being used and ensuring transparency about how the data will be used.

Another consideration is the potential for bias in the annotation process. To mitigate this, it is essential to use a diverse pool of annotators and to review the data regularly to ensure that the labels are balanced and reflect all relevant perspectives.

Bias and Fairness in Data

Data bias and fairness are central issues in the annotation process, as they directly affect a fine-tuned model's performance and ethical implications. Bias can enter the data in various ways, such as through the selection of unrepresentative examples, the language used in annotations, or the personal biases of the annotators themselves. Suppose the dataset is skewed toward certain demographic groups or viewpoints. In that case, the model may learn to make decisions that disproportionately favor those groups, leading to biased results that harm underrepresented individuals or communities.

To reduce bias, it is essential to design the annotation process carefully with equity in mind. This includes ensuring the data represents various scenarios, voices, and experiences relevant to the subject area. Annotators should be trained to recognize their potential biases and follow guidelines encouraging objective and inclusive labeling. Regular checks of annotated data can also help identify patterns of bias and prompt corrective action. Equity metrics should be included in model evaluation, allowing teams to assess whether a finely tuned model produces equitable results for different groups.

Measuring Success in Fine-Tuning

Measuring success in fine-tuning a specific subject area involves more than just tracking basic performance metrics; it requires assessing how well the model meets the specific goals of the intended application. Traditional metrics such as precision, accuracy, completeness, and F1-score are often helpful starting points, especially when annotated data contains clear labels or expected outcomes. However, additional, domain-specific evaluation criteria are needed for many specialized tasks to account for nuances that general benchmarks may miss.

Success is also measured through real-world testing, where a finely tuned model is evaluated against tasks that accurately reflect how it will be used in practice. This includes peer reviews, user studies, or performance on specially selected validation sets representing complex or critical examples. In some cases, measuring improvements in workflow efficiency, decision support, or error reduction provides a clearer picture of the model's value than raw numbers alone.

User Feedback and Iteration

User feedback and iteration are essential to refining a fine-tuned model, especially in domain-specific applications where success is often determined by how well the model fits into real-world workflows. Once deployed or tested, honest user feedback provides insights beyond what automated evaluation metrics can capture. Users can identify areas where the model is useful, where it is not perfect, and where it misunderstands important context or details that only someone immersed in the subject matter would notice.

Using this feedback, teams can return to the fine-tuning process with clearer priorities. Sometimes, this involves collecting additional annotated data focused on weaknesses in the model, adjusting the annotation scheme to reflect better real-world needs, or even re-fine-tuning the model with updated goals. Iteration based on user feedback helps a model evolve from merely functional to truly integrated and robust within its domain, creating a system that adapts to the complexities and requirements of the people it is meant to help.

Summary

Technical accuracy and effective annotation methods address deeper issues such as bias, fairness, and ethical responsibility. They recognize that a model's behavior reflects the data it learns from and that careful quality control, diverse representation, and respect for privacy are not optional extras but essential elements of building reliable systems. Using strategies such as iterative improvement, user feedback, and crowdfunding platforms allows teams to balance scalability with accuracy, creating datasets that are not only large enough to train robust models but also detailed enough to capture the complexity of the subject area. Through thoughtful annotation, fine-tuning becomes the path to creating models that are smart and meaningfully aligned with real-world needs.

FAQ

What is domain-specific fine-tuning?

Domain-specific fine-tuning involves adapting pre-trained AI models for specialized tasks in a particular field. This process enables models to deeply grasp industry-specific terms, methods, and nuances.

Why is annotation critical in domain-specific fine-tuning?

Annotation is vital because it gives models high-quality, labeled data that accurately reflects the domain. This data enables the model to learn the domain's unique characteristics, terminology, and patterns.

What are the key steps in domain-specific fine-tuning?

Key steps include identifying your domain's unique aspects, gathering relevant data, annotating it effectively, and evaluating its quality. This ensures the data is accurate and relevant before fine-tuning.

What are some effective annotation techniques for domain-specific fine-tuning?

Effective techniques include manual annotation for complex tasks, automated annotation for large-scale tasks, and crowd-annotation platforms.

Are there any ethical considerations in domain-specific annotation?

Yes, ethical considerations are crucial. Address bias and fairness, comply with regulations like GDPR and CCPA, and protect sensitive information.

How is the success of fine-tuning the subject area measured?

Measure success with domain-specific KPIs like accuracy, precision, recall, and metrics. Collect user feedback to evaluate performance and guide improvements.