Video annotation tool

Keylabs: elevating video data annotation with unmatched performance.

Our platform, strategically powered by geo-oriented servers for every project, delivers high-speed, efficient annotation.

Featuring advanced capabilities like Object Linking, Object Interpolation, AI Assistance, and sophisticated post-annotation techniques, Keylabs ensures a swift workflow without compromising on quality.

With a 99.9% precision level, our solution is tailored for those who demand excellence in video data annotation.

Trust Keylabs to transform your video annotation process with speed, accuracy, and unparalleled performance.

Video annotation types

Keylabs allows AI developers to craft exceptional datasets. The Keylabs platform features a full suite of video annotation methods, matching up with any video annotation tool.Keylabs data annotation tool allows annotators to meet the specific needs of AI projects through a combination of labeling techniques. The key video annotation types are:

Semantic segmentation

This annotation type divides each pixel in a video frame into classes. Annotators use the Keylabs annotation platform to outline objects or people in video frames.These objects and people are then labeled accurately. Everything in the video frame is also treated in this manner, including background features. In this way information is added to every pixel in a piece of video training data.

Instance segmentation

This annotation type extends semantic segmentation by adding more detail to video training data. Instance segmentation means that every recurring instance of an object or person is given its own label and highlight colour. Instance segmentation adds more information to video training data. This can lead to higher performing AI models.

Video annotation techniques

There are a wide variety of video annotation techniques that can be applied to training data. Keylabs is a video annotation tool that gives AI companies access to a full range of video annotation techniques. These techniques are used to create video training data for a huge number of different AI use cases. All are available with attractive data annotation tool pricing:

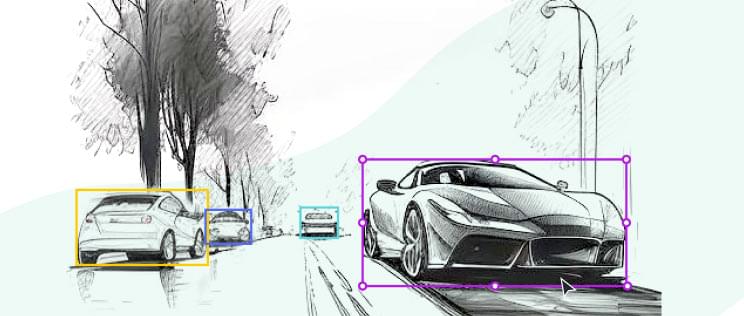

Bounding box

To accomplish this technique annotators drag boxes around objects to locate them in a video frame. The advantage of bounding box annotation is that it is fast and easy to do over thousands of frames. However, it can be less accurate than segmentation methods.

Polygon

This annotation technique allows annotators to capture more complex shapes. To accomplish polygon annotation annotators connect small lines around an object. This allows them to precisely define the shape of any object or person. As a result this technique is essential for segmentation methods.

Rotating bounding box

The rotating bounding boxes data annotation tool for videos offers a sophisticated solution to annotate objects at varying orientations, ensuring accurate representation in non-orthogonal perspectives. By allowing rotation of bounding rectangles, it caters to the dynamic nature of videos where objects rarely maintain a static axis, enhancing both precision and recall rates in subsequent model training. Leveraging such advanced annotation techniques is crucial for intricate applications, particularly in fields like autonomous driving and drone surveillance where orientation fidelity is paramount.

Skeletal

This annotation technique is used to show the position of the human body in frames of training video. To achieve this technique annotators draw lines on human limbs joined together at joint positions.

Cuboid

The cuboid data annotation tool is designed to capture objects in videos with a three-dimensional perspective, providing depth, height and width context. By encompassing targets within 3D boxes rather than flat 2D rectangles, it ensures a more holistic representation of objects, especially pivotal in applications like AR/VR and advanced robotics. Adopting the cuboid approach in data annotation significantly elevates the spatial understanding of machine learning models, bridging the gap between 2D imagery and 3D spatial comprehension.

Key points annotation

This technique is primarily used to find facial features in video frames. Annotators do key point annotation by adding points on the eyes, nose and mouth of people in video training data.

Mesh

The mesh data annotation tool delves deeper into object representation, leveraging interconnected vertices to form intricate polygonal structures that map objects with unparalleled detail. Unlike traditional bounding boxes or cuboids, the mesh approach can outline the nuanced contours and undulations of targets, making it invaluable for tasks requiring high-fidelity 3D reconstructions, such as digital twins or complex AR/VR simulations. By capturing these intricate geometries, machine learning models can achieve a richer understanding of object complexities, elevating their performance in detailed spatial tasks.

Lane annotation

When AI developers want video training data to show linear structures like roads or pipelines they turn to lane annotation techniques. To do lane annotation, annotators draw parallel lines along these structures in each frame of the training video.

3D point cloud

The 3D Point Cloud Data Annotation tool is instrumental in processing and interpreting data primarily sourced from LIDAR sensors. By capturing and characterizing vast arrays of spatially-referenced points, it vividly represents objects and terrains in three-dimensional space. Especially vital for industries like autonomous driving, where LIDAR-generated point clouds are foundational, this tool aids in crafting models with a deeper understanding of their environments.

Bitmask

This annotation type links individual pixels to specific objects, allowing for annotations that are separated or contain gaps.

Custom annotation

The Keylabs platform allows AI developers to combine the annotation methods and techniques shown above to create bespoke video training datasets.

Industries we serve

Keylabs is created as a platform that incorporates state-of-the-art, performance oriented and user friendly annotation tools with built in machine learning and operation management capabilities.

Keylabs: a powerful tool for video annotation

Quality results from a team of annotation experts

Tap into decades of annotation experience from the team that created the Keylabs video annotation tool.

Video annotation tool pricing

Keylabs offers competitive video annotation tool pricing for AI developers. Choose a smaller flexible package or take advantage of our volume based discounts. In our demo call we evaluate the needs of your project and identify the pricing options that are right for you.

Start Free Trial

Transparent Plans & Prcing with No Hidden Fees!