Annotating Low-Resource Languages: Building NLP Datasets from Scratch

Did you know that of the approximately 7,000 languages worldwide, only about 20 have access to modern natural language processing (NLP) tools? This staggering statistic emphasizes the urgent need to develop NLP for under-resourced languages, as language inequality is becoming increasingly evident in the tech world.

Annotating such languages is a technical challenge and a key step towards linguistic inclusiveness and technological equity.

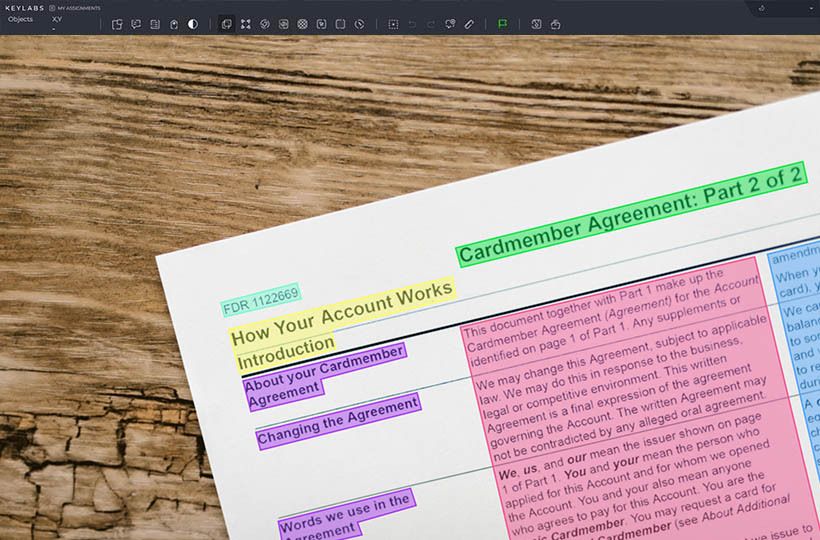

In this article, we will discuss methodologies and tools for effectively annotating low-resource languages. Thanks to advanced technologies and expert validation, we are opening up new opportunities for preserving and developing linguistic diversity in AI.

Key Takeaways

- Significant Gaps: Only about 20 languages have access to NLP tools among 7,000 worldwide languages.

- Technological and Human Collaboration: Combining advanced technologies with expert human validation.

- Essential Methodologies: Effective annotation strategies are critical for building NLP datasets from scratch.

Understanding Low-Resource Languages and Their Challenges

Resource-limited languages have limited digital representation and insufficient resources for natural language processing (NLP). According to the World Economic Forum, of the approximately 7,000 documented languages currently in existence, about half may be endangered, and it is predicted that about 1,500 languages could disappear by the end of the century.

The role of such languages in the field of NLP is crucial for technological inclusion. However, determining which languages are considered resource-limited is a difficult task. For example, about 360,000 people who speak Icelandic may have more annotated data than Swahili, which is spoken by about 200 million people but has limited online content. Key challenges include:

- Lack of written materials: The lack of high-quality, labeled data makes developing effective language processing models challenging.

- Lack of digital tools: The lack of reliable digital tools complicates the annotation and model training processes.

- Obtaining high-quality annotations: It is difficult to create accurate annotations when native speakers are involved, and they are often few in number.

Methods such as back translation, synthetic data generation, and data sharing are used to overcome these difficulties. Governments and funding agencies are key in supporting research in these languages. Strategies such as multilingual training and data augmentation are necessary to improve NLP capabilities.

Importance of Language Annotation for NLP

Well-annotated datasets allow AI to perform complex language tasks more efficiently, increasing productivity and accuracy. For example, in the healthcare industry, annotated data has improved deep learning models for analyzing medical records, leading to more accurate predictions.

However, annotating text for NLP requires a systematic approach to ensure quality and consistency. Challenges include ambiguity, high costs, and the need for industry knowledge. These problems are more pronounced when working with languages with limited resources, where data is scarce.

To solve these problems, AI tools and semi-automated methods are being actively implemented to simplify annotation processes and increase accuracy.

Strategies for Collecting Data in Low-Resource Languages

Effective data collection strategies are key to overcoming the shortage of annotated and unannotated data for under-resourced languages. One promising approach is to use crowdsourcing platforms and digital spaces to engage communities. This method utilizes the collective knowledge of native speakers, ensuring cultural sensitivity and inclusiveness.

For example, a study conducted by Microsoft Research showed that it is possible to collect annotated language data directly from low-income workers, which not only enriches language data but also provides additional income opportunities for these communities.

Another example comes from the ELLORA project, which uses innovative data collection methods, including gamification and crowdsourcing, to develop speech and natural language processing technologies for under-resourced languages.

Involving native speakers in the crowdsourced data collection process contributes to the creation of more diverse and representative language resources, which is critical for the development of natural language processing technologies for low-resource languages.

Best Practices for Low-Resource Language Annotation

In natural language processing (NLP), developing effective models for resource-constrained languages is challenging due to the lack of training data and the limited number of native speakers. However, research shows that involving non-native annotators can significantly improve the quality of such models.

Recommendations for improving the quality of markup:

- Documentation and instructions: Provide detailed documents and step-by-step instructions for annotators to ensure unambiguous and consistent markup.

- Training of annotators: Conduct initial and regular training sessions to ensure they understand the task and have the necessary skills.

- Quality control: Implement regular quality checks on the annotation using gold standard data and other control methods.

- Use of technology: Leverage tools and technologies to manage and validate markup that can automate part of the process and reduce errors.

- Use native speakers: Whenever possible, engage native speakers to review and add to the markup to account for cultural and linguistic sensitivities.

Ethical Considerations in Language Annotation

The ethical aspects of language annotation are extremely important, especially for languages with limited resources. These languages often lack sufficient textual data to train models, which can lead to bias and inaccuracies in machine learning.

Informed consent and respect for communities. The primary ethical principle is to obtain informed consent from participants who provide language data. They must be informed about how their data will be used and the potential consequences. For example, research on machine learning for low-resource languages emphasizes the need to take into account the specific needs and concerns of communities related to the use of their language data.

Eliminating bias in data. Machine learning models trained on large datasets often perform better for languages with large resources. To create fairer models, it is necessary to identify and reduce bias in training data, which includes regular checks and involving diverse perspectives when creating datasets.

Transparency and inclusiveness. Transparency is a key element of ethical practices in annotation. It promotes trust among users and stakeholders. For example, modern Armenian has different forms, such as Eastern Armenian, that are spoken in other regions. Including this diversity in datasets is important for the development of inclusive AI.

Adherence to ethical principles. Principles such as data minimization and anonymization help prevent risks associated with data leaks during model training. Developers should adhere to moral standards throughout the annotation and model development process. By balancing technical expertise with ethical considerations, more socially conscious and culturally sensitive natural language processing technologies can be developed.

Summary

Collecting data for such languages often requires collaboration with local communities, increasing the availability of language resources and ensuring greater cultural sensitivity. In addition, it is important to actively work to eliminate bias in the data and create inclusive, equitable models.

New technologies, such as automatic speech recognition and crowdsourcing, can help collect data even for languages with limited resources. At the same time, ethical principles such as confidentiality and accountability for data use should be at the center of developers' attention to avoid exploitation of local linguistic and cultural heritages.

Overall, integrating these approaches can contribute to the development of more inclusive and culturally sensitive natural language processing technologies.

FAQ

What is low-resource language annotation?

Low-resource language annotation involves creating and labeling datasets for languages with limited digital presence. It is vital to make these languages more inclusive in NLP and machine learning.

Why is annotation critical in NLP for low-resource languages?

Annotation is key in NLP for creating richly labeled datasets. These datasets are essential for training machine learning models. They support AI development in underrepresented languages and preserve cultural insights.

What are the common challenges in annotating low-resource languages?

Challenges include a lack of written material and insufficient digital tools. Also, getting high-quality annotations without native speakers is difficult. These hurdles make developing accurate NLP models for these languages hard.

How does language annotation help in preserving cultural insights?

Language annotation systematically documents and labels linguistic data. This process archives cultural and linguistic information. It makes this information accessible for future generations and enriches global datasets for more nuanced AI.

How can crowdsourcing and community collaboration aid in low-resource language annotation?

Crowdsourcing and community collaboration use social media and online communities to gather and annotate data. These methods pool resources and native speaker knowledge. They result in culturally sensitive and robust datasets.

What tools and technologies are available for annotating low-resource languages?

Tools like 'Dragonfly' and open-source software are available. Automated annotation techniques using machine learning algorithms also speed up the process.

What are the best practices for ensuring quality control in language annotation?

Best practices include setting robust annotation guidelines and training annotators well. Implementing strict quality control measures is also key. These steps help reduce errors and improve dataset reliability.

What ethical considerations must be addressed in language annotation?

Ethical considerations include securing informed consent and respecting intellectual property rights. Minimizing biases in datasets is also essential. These principles ensure the sustainability and fairness of language annotation projects, even for low-resource languages.