Benchmarking Annotation Quality: Setting Industry-Standard KPIs

The Cost-Performance Index (CPI) is important in KPI design and is a valuable metric for evaluating annotation performance. CPI measures cost-effectiveness and resource efficiency. This principle is used to benchmark annotation quality against industry KPI standards.

Industry KPI standards help organizations identify performance gaps, collect insights, and implement strategies to improve annotation. Benchmarks help companies track annotation performance and achieve operational excellence, which leads to long-term success.

Quick Take

- Key Performance Indicators (KPIs) like CPI are critical in measuring annotation efficiency.

- Setting industry-standard KPIs helps identify performance gaps and implement improvements.

- Benchmarks serve as baseline metrics for tracking progress and guiding strategic decisions.

- Effective benchmarking can foster operational excellence and drive long-term success.

Understanding Quality Benchmarking in Annotation

Quality benchmarking evaluates and compares different approaches or strategies for data annotation using quality metrics to test accuracy, consistency, and impact on machine learning models.

Definition of Quality Benchmarking

Quality Benchmarking is the process of evaluating the quality and comparing AI models, annotations, or algorithms to find the best approach.

Quality benchmarking measures and compares annotations using precision, recall, and F1 score metrics.

- Precision – measures what proportion of predicted positives are correct.

- Recall measures the proportion of all true positives that were correctly identified.

- F1-score – balances precision and completeness, treating them as equal factors.

By comparing annotated data to these metrics, companies can identify inconsistencies and misclassifications early, improving the quality of annotations.

Benchmarking Quality Across Industries

Benchmarking quality ensures compliance with standards and regulations in industries such as healthcare and finance. It compares medical algorithms, diagnostic systems, and patient care in healthcare. Finance assesses the quality of forecasts, risk management, and investment strategies.

Companies can improve the quality of their annotations by focusing on benchmarks in the labeling queue and using consensus scoring.

The Role of Key Performance Indicators (KPIs)

Key Performance Indicators (KPIs) are quantitative metrics that measure the success of a company, project, or process in achieving strategic goals. They measure performance, enable decision-making, and focus on goals.

Types of KPIs for Annotation Quality:

- Accuracy measures how accurate annotations are compared to a standard test.

- Efficiency measures the speed of the annotation process.

- Cost-effectiveness measures the financial value of the annotation process.

- Consistency indicates the consistency of annotations across datasets.

- Conformance ensures compliance with industry standards and regulatory requirements.

How to Select the Right KPIs

- Alignment with business goals. Ensure that KPIs are aligned with the organization's goals.

- Benchmarking with industry standards to compare KPIs with industry standards.

- Assess resource allocation. Choose KPIs that show how effectively resources, time, and money are being used.

- Track performance over time. Choose performance metrics that track performance trends and provide insights for improvement.

- Include customer engagement metrics that measure customer satisfaction, which impacts the quality of service provided.

These strategies will create a robust KPI system that drives continuous improvement in annotation quality.

Standard Tools for Quality Benchmarking

Quality tools and benchmarking software offer detailed assessments of performance and operational metrics.

- Performance dashboards track key performance indicators (KPIs).

- Analytics software helps visualize complex data.

- Benchmarking matrices allow organizations to compare benchmarks against various criteria.

- Benchmarking reports show performance metrics against industry standards, highlighting areas for improvement.

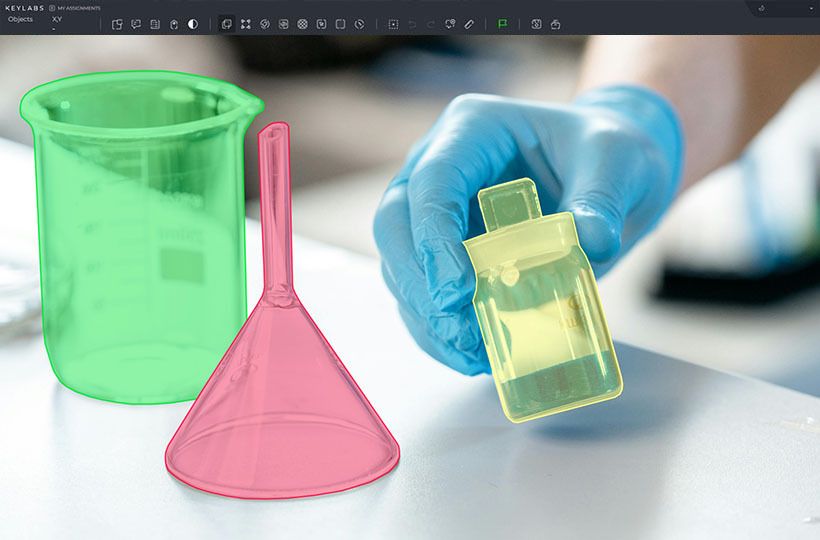

Keylabs’ Role in Quality Benchmarking

Keylabs offers data annotation and labeling solutions that ensure accurate benchmarking, especially in machine learning and artificial intelligence. With a focus on accuracy, Keylabs ensures that benchmarking processes are supported by high-quality annotated data. This enables organizations to make the right decisions based on data.

Comparison of Benchmarking Software

Benchmarking software compares the performance of different systems, models, or processes. This includes performance testing tools that measure speed and system load, platforms for analyzing the accuracy and quality of machine learning models, and data visualization and analysis tools that compare business process outcomes and verify code quality. The choice of tool depends on your goals, scalability needs, and compatibility with existing technologies.

Establishing Industry Standards for Quality

Setting industry quality standards can ensure compliance with expectations by adhering to generally accepted practices for quality comparison. Current industry standards and practices, such as ISO certifications, are required for quality benchmarking. ISO certifications are international certificates that certify that products, services, or management systems comply with international standards developed by the International Organization for Standardization (ISO). They cover various aspects of operations, from quality and safety to environmental requirements and information security management.

Quality Benchmarking Practices

Formulating a benchmarking strategy requires the following:

- Establishing clear objectives to guide the benchmarking effort.

- Ensure data consistency.

- Continuous comparisons and audits.

- Use different benchmarking methods.

- Maintain documentation.

- Cost-benefit analysis.

Engaging Stakeholders in the Process

Involving stakeholders improves understanding of the benchmarking goals and methods. Including data owners, business users, and stakeholders in the process is important. This will provide a complete picture of the quality of the data and how to improve it.

Key points:

- Define the objectives and goals.

- Identify relevant partners for the benchmarking.

- Involve stakeholders.

- Use public reporting.

Data Collection Methods for Quality Benchmarking

Quantitative data provides numerical statistics for benchmarking performance metrics, including accuracy and timeliness. Qualitative data offers descriptive information needed to understand operational nuances. It encompasses survey and interview data to understand human behavior and customer experiences.

Ensuring Data Accuracy and Reliability

Data accuracy ensures data quality standards through validation and early detection of issues. Involving stakeholders in the data collection process maintains data integrity.

Data governance tools automate data validation and improve accuracy. Benchmarking improves decision-making and business development through accurate data collection.

Analyzing Benchmarking Results

Understanding the various analytical techniques is essential for practical data interpretation. Performance metrics such as revenue growth, market share, and profitability are essential for benchmarking.

Making Data-Driven Decisions

Analyzing performance gaps and predicting future levels can set benchmarks for improvement. Benchmarking across industries shows how a company performs against its competitors and allocates its resources.

Competitive benchmarking, combined with tools, helps to understand customer needs and set clear goals. This approach improves the quality management system.

Continuous Improvement Through Quality Benchmarking

Benchmarking allows companies to improve their processes. This method is used to maintain a competitive advantage. Benchmarking is based on iterative processes. Each cycle involves a detailed review of current practices, identification of areas for improvement, and development of strategies for change. This process involves several important steps:

- Comparing performance metrics, such as unit cost and cycle time, with industry leaders.

- Identifying performance gaps and areas for improvement.

- Implementing strategies to improve operational efficiency.

Future Trends in Annotation Quality Benchmarking

New technologies such as artificial intelligence (AI) and machine learning (ML) promise to change the way data annotation quality is assessed and maintained. Most enterprises will use synthetic data for AI and analytics. Synthetic data will outperform real data, driving innovation across industries.

The success of AI models depends on the quality and realism of their training data. This emphasizes the need to maintain high-quality standards.

Organizations regularly audit datasets to stay relevant. They use model audit processes to reduce bias and improve data generation methods. Through these practices, industries are meeting the growing demand for high-quality data annotation.

FAQ

What is quality benchmarking in annotation?

Quality Benchmarking is the process of evaluating the quality and comparing AI models, annotations, or algorithms to find the best approach.

Why is quality benchmarking important in industries?

Quality benchmarking is needed to identify performance gaps. It helps in strategic planning for operational excellence, ensuring the competitiveness of companies.

What types of KPIs are used for measuring annotation quality?

Key Performance Indicators (KPIs) for annotation quality include accuracy, efficiency, and cost-effectiveness. These metrics assess how well annotation processes align with industry standards and organizational objectives.

How to select the right KPIs for quality benchmarking in data annotation?

Choosing the right KPIs involves understanding the specific demands of the annotation industry. Aligning them with broader business outcomes ensures KPIs reflect the organization's objectives.

Which tools are commonly used for quality benchmarking?

Standard tools include performance dashboards, analytics software, and benchmarking matrices.

What are some current industry standards and best practices for annotation quality?

Current industry standards for annotation quality involve setting clear, measurable, and attainable benchmarks that are aligned with best practices. Successful implementations lead to improved accuracy, consistency, and reliability, which are essential for machine learning and AI projects.

What is the best way to develop a benchmarking strategy?

A robust benchmarking strategy includes defining clear objectives and identifying benchmark partners. Establishing a systematic approach to data collection and analysis is also key.

How do you ensure data accuracy and reliability in quality benchmarking?

Ensuring data accuracy and reliability involves meticulous data collection methods and rigorous verification. Continuous monitoring is also essential. Both quantitative and qualitative data should be accurately measured and contextualized.

How to interpret benchmarking data and make data-driven decisions?

Interpreting benchmarking data requires analysis to recognize meaningful patterns and trends. Data-driven decisions are made based on analytical results. This informs evidence-based improvement strategies.

How does continuous improvement work in quality benchmarking?

Continuous improvement of quality benchmarking is an iterative process. It requires regular review and adjustment to meet changing industry standards.

What emerging technologies will impact quality benchmarking in the future?

New technologies, such as AI and machine learning, will increase the accuracy of benchmarking processes and drive innovation in the industry.