Beyond Benchmarks: Real-World Applications of YOLOv7 for Various Tasks

What renders YOLOv7 a genuine standout is its unprecedented achievement of top-tier performance sans any pre-trained weights – a testament to its refined and robust architecture. The machine learning community has taken note, hailing it as the next ‘official’ YOLO. A glance at the YOLOv7 repository reveals a trove of complex functionalities designed for excellence, such as multi-GPU training loops, innovative data augmentations, and efficient learning rate schedulers. These features form the backbone of a model that not only changes the game but evolves it for object detection applications that reach far beyond a proof of concept.

The real thrill comes in applying YOLOv7 to real-world scenarios. Its architectural prowess is evident in tasks like transforming autonomous vehicle technology to reading the shelves of retail analytics with uncanny accuracy. Real-world applications are as vast as the dataset it was trained on, perhaps even broader, as YOLOv7 continues to break new ground in adaptive and intelligent object detection.

Key Takeaways

- YOLOv7 holds the title of the fastest and most accurate real-time object detection model currently available.

- It achieved this high mark of performance without the help of pre-trained weights, a significant milestone.

- Accepted as the official YOLO model, it’s poised to revolutionize how we approach real-time object detection.

- YOLOv7's complex functionalities are designed to empower wide-ranging applications in different industries.

- The open-vocabulary capability of YOLOv7 makes it particularly effective in detecting a myriad of objects in diverse environments.

Introduction to YOLOv7 in Object Detection

The landscape of real-time object detection has been fundamentally reshaped with the advent of the YOLOv7 architecture. Released in 2022, YOLOv7 represents the culmination of advancements in speed and accuracy in object detection, setting a new benchmark for performance and efficiency in the computer vision community.

The Genesis and Evolution of YOLO Algorithms

Since its inception in 2016, the YOLO (You Only Look Once) series has iterated through several versions, each refining the balance between detection speed and accuracy. YOLOv7, the latest iteration, builds on this legacy by enhancing the foundational single-shot detector framework, which processes images in one pass, thus accelerating the detection process without compromising precision.

YOLOv7: A Breakthrough in Speed and Accuracy

The YOLOv7 architecture is instrumental in pushing the boundaries of both speed and accuracy. Compared to its predecessors and other real-time detectors like YOLOv5 or YOLOX, YOLOv7 reduces computational overhead by about 50% while achieving faster inference speeds. This model not only expedites the detection process but also maintains high levels of accuracy, making it a state-of-the-art tool in a variety of real-world applications.

Adapting YOLOv7 for Diverse Environments

YOLOv7's flexibility is evident in its adaptability across different computing environments and real-world scenarios that involve variable object shapes and sizes. Whether it’s detecting traffic signs in smart city infrastructure or monitoring retail spaces for security, the architecture efficiently handles diverse object classes and irregular sizes, demonstrating its robustness and versatility. This adaptability is encoded within the model through innovative training procedures and model scaling techniques introduced by architects WongKinYiu and Alexey Bochkovskiy.

The continuous evolution of YOLOv7, indicated by its sustained enhancements in pose and instance segmentation tasks, promises more comprehensive applications, ensuring its relevance and applicability in ever-changing operational landscapes.

YOLOv7 Architecture Explained

The latest iteration of YOLO, YOLOv7, incorporates extensive architectural changes that significantly enhance its functionality and performance. This model not only leverages advanced technologies for improved efficiency but also introduces versatility in handling various computational environments, from edge devices to cloud-based systems.

Key Architectural Enhancements from Previous Versions

YOLOv7 distinguishes itself with a series of architectural refinements aimed at optimizing both speed and accuracy. The reduction of parameters by approximately 40% and the cutting of computational demand in half exemplifies its efficiency. Additionally, YOLOv7 broadens its utility with variants such as YOLOv7-tiny for Edge AI and YOLOv7-W6 for enhanced GPU performance in the cloud, showcasing its flexibility to operate under diverse computing conditions.

Understanding the Training Process and Data Augmentation Techniques

The training recipe of YOLOv7 is intricate yet effective, incorporating modern methodologies such as anchor boxes, feature pyramid networks, and novel assignment strategies like optimal transport for increased model robustness. Data augmentation plays a critical role in this process; techniques like mosaic and mixup not only enhance the dataset variability but also significantly boost the performance of the model under various object detection scenarios.

The modular nature of YOLOv7's architecture offers flexibility in customization, allowing users to tweak learning rates and apply different data augmentation methods according to specific needs. These adaptations are vital for tailoring the model to align perfectly with unique operational requirements, ensuring optimal performance across varying applications.

| Feature | YOLOv7 | Previous Versions |

|---|---|---|

| Parameter Reduction | 40% | Less efficient |

| Computational Efficiency | 50% less computation | Higher computational demand |

| Versatility | Edge AI, cloud GPU optimization | Limited to general purposes |

| Data Augmentation | Mosaic, Mixup | Basic augmentation |

This comprehensive approach not only reinforces YOLOv7's dominance in speed and accuracy but also underscores its adaptability, making it a formidable tool in the ongoing evolution of object detection technologies.

YOLOv7 Use Cases

YOLOv7, as the latest benchmark in real-time object detection, has revolutionized multiple sectors through its advanced capabilities. The model offers substantial enhancements in surveillance optimization, traffic analysis, and medical imaging advancements, making it a pivotal tool in transforming these fields.

Optimizing Surveillance Systems with YOLOv7

Deployed within surveillance systems, YOLOv7 significantly boosts the detection and monitoring capabilities essential for security. By leveraging this technology, facilities can track movements in real time, effectively enhancing safety measures. Its ability to swiftly process and analyze visual data ensures that any unusual activity is detected promptly, making it an invaluable asset in surveillance optimization.

Improving Traffic Analysis and Vehicle Recognition

In the realm of transportation, YOLOv7 aids in sophisticated traffic analysis by providing precise vehicle recognition capabilities. This functionality not only supports the management of traffic flows but also feeds into the broader scope of smart city infrastructure. By analyzing traffic patterns and vehicle behavior, YOLOv7 helps in devising strategies to alleviate congestion and enhance road safety.

Pioneering Medical Imaging and Diagnostics

YOLOv7 stands out in the healthcare sector by propelling medical imaging advancements. It can quickly identify and categorize various anatomical structures, which assists healthcare professionals in diagnosing diseases with greater accuracy and speed. This application of YOLOv7 proves essential in emergency situations where time is critical, as well as in routine check-ups, where it streamlines various diagnostic procedures.

Across these applications, YOLOv7 not only demonstrates its versatility but also brings forth significant improvements in how data is processed and analyzed in critical real-world scenarios. By integrating YOLOv7 into these sectors, industries can achieve higher operational efficiency, bolster security measures, and enhance service delivery, ultimately contributing to safer, smarter, and more responsive environments.

The Role of YOLOv7 in Autonomous Vehicle Technology

Utilizing the cutting-edge capabilities of YOLOv7, autonomous vehicles can now recognize a spectrum of critical elements like pedestrians, traffic signals, and other vehicles with an unprecedented level of precision. This ability underpins numerous safety features such as automatic braking, pedestrian alerts, and adaptive cruise control, making autonomous vehicles smarter and safer.

Among the greatest strengths of YOLOv7 is its adaptability and the speed at which it processes visual data. These attributes ensure that vehicles equipped with this technology can make immediate decisions in response to real-time road conditions. For instance, its advanced object recognition capabilities are finely tuned to identify pedestrians in densely populated urban environments, greatly enhancing pedestrian safety and reducing urban traffic accidents.

Moreover, enhancing these capabilities, sensor fusion integrates YOLOv7 with other sensor modalities like LiDAR and radar, further boosting the vehicle’s environmental awareness and operational safety. This integrative approach not only expands the visual range but also adds layers of data redundancy, crucial for navigating complex scenarios such as inclement weather or low-light conditions.

| Feature | Enhancement in Autonomous Vehicles |

|---|---|

| Pedestrian Detection | Enhanced safety in pedestrian-rich environments |

| Traffic Signal Recognition | Supports adherence to traffic laws, reducing violations |

| Environmental Adaptability | Reliable performance in diverse lighting and weather conditions |

| Real-Time Processing | Enables split-second decision making for collision avoidance |

The robust processing power of YOLOv7 allows for a deeper and more nuanced understanding of the vehicle's immediate environment, a critical aspect for the future expansion of autonomous vehicle technology into more complex and unpredictable environments.

Lastly, the potential of YOLOv7 extends beyond just fundamental object detection; its continuous training and refinement process empowers developers and engineers to tailor the system, further enhancing its efficiency and integration within autonomous driving systems. This ongoing enhancement cycle ensures that YOLOv7 remains at the forefront of vehicle technology, driving advancements in real-time object detection and paving the way for more autonomous innovations.

Customizing YOLOv7 for Industry-Specific Needs

The flexibility of YOLOv7 allows for industry-specific customization, making it a powerful tool for adapting to diverse operational requirements and environments. By tailoring the model through modifying anchor box sizes and loss functions, organizations can significantly enhance the model's intuitiveness and effectiveness for specific scenarios.

Defining Custom Anchors and Loss Functions

Addressing specific industry challenges begins with defining custom anchors in YOLOv7. By adjusting the anchor box sizes, companies can optimize the model to better recognize object dimensions and shapes pertinent to their specific field, such as irregularly sized components in manufacturing or unique retail items. Furthermore, customizing loss functions allows the model to focus more precisely on aspects that are critical for a particular industry, thereby improving the accuracy and reliability of detections.

Deploying YOLOv7 on Edge Devices for Real-World Applications

YOLOv7 deployment on edge devices is instrumental for industries requiring immediate data processing without the latency involved in cloud computing. Edge device implementation of YOLOv7 facilitates quicker decision-making in real-world applications, such as monitoring equipment on a factory floor or managing inventory in retail. Deploying this model locally enhances operational efficiency and can lead to significant advancements in automated systems.

Both setting up YOLOv7 for industry-specific customization and its deployment on edge devices involve a profound understanding of the environment and a strategic approach to implementing the technological adjustments. These transformations not only empower businesses to leverage the full potential of YOLOv7 but also push the boundaries of what can be achieved with automated object detection systems.

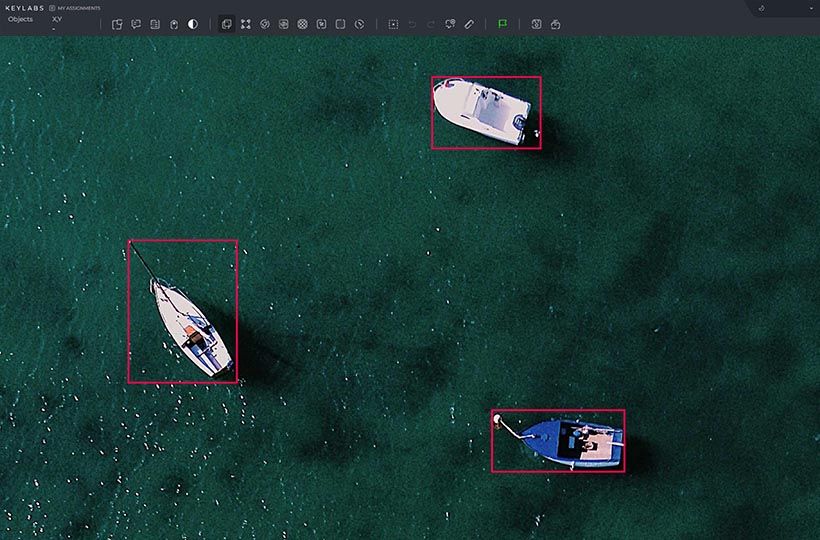

Advancements in Aerial Imagery Analysis with YOLOv7

The integration of YOLOv7 advancements into aerial imagery fields spans a diverse array of applications, emphasizing object detection from above. This technological leap facilitates enhanced precision in identifying and cataloging elements within large-scale landscapes, crucial for sectors such as agriculture and urban planning.

Employing YOLOv7 within the realm of aerial imagery, experts have been able to surpass previous limitations associated with drone-based monitoring and analysis. Key utilities include the detailed assessment of crop health in agriculture, where YOLOv7 enables the detection of issues unseen to the naked eye from ground level, and the meticulous planning of urban infrastructures, offering planners a bird's-eye view of sprawling cityscapes with unprecedented clarity.

- Enhanced model efficiency tailored for handling aerial imagery, recognizing even minuscule objects from elevated perspectives.

- Optimization techniques like sparse convolution, which concentrates processing power on crucial data points, thereby conserving computational resources.

- The fusion of feature maps boosts detection accuracy, a critical aspect when every detail counts in environmental surveillance and monitoring.

This functionality is pivotal in pushing forward data-driven strategies, which in turn bolster operational efficiencies across various fields. For instance, in environmental surveillance, YOLOv7's role in object detection from above leverages enhanced algorithmic strategies to monitor changes across expansive natural landscapes, crucial for conservation and disaster management.

Moreover, the adaptation of YOLOv7 for drone technologies marks a significant shift towards more agile and responsive environmental monitoring systems. The algorithm’s capability to swiftly interpret and react to data not only increases the timeliness of responses but also significantly reduces human error in critical monitoring tasks.

YOLOv7's Impact on Retail Analytics and Customer Behavior Tracking

As the retail industry continues to evolve, integrating advanced technologies such as YOLOv7 plays a pivotal role in enhancing both customer service and operational efficiency. The utility of this advanced object detection model goes beyond mere surveillance; it emerges as a core technology in shaping the future of retail through improved inventory management and in-store security enhancement.

Use of Object Detection in Inventory Management

The introduction of YOLOv7 has significantly advanced the capabilities of inventory management systems by providing accurate, real-time data on stock levels and product placements. Retailers can now monitor items with unprecedented precision, reducing discrepancies and ensuring that stock replenishment aligns perfectly with consumer demand, thus minimizing overstock and stockouts. This not only optimizes the supply chain but also boosts customer satisfaction by ensuring product availability.

Enhancing In-Store Security with Real-Time Detection

Enhancing in-store security is another critical application of YOLOv7. Its ability to quickly identify and notify staff about unauthorized activities or suspicious behavior in real-time is transformative. By integrating YOLOv7 into their security systems, retailers can prevent theft and provide a safer shopping environment, fostering a sense of security for both customers and staff.

| Feature | Impact on Retail | Example Application |

|---|---|---|

| Real-Time Object Detection | Reduces shoplifting by identifying suspicious actions as they occur | Monitoring entry and exit points |

| Accurate Product Tracking | Improves inventory accuracy, reduces the need for manual checks | Automated shelf scanning |

| Behavioral Analytics | Enhances retail analytics by providing insights into customer shopping behaviors | Analyzing customer movement and interaction with products |

By leveraging YOLOv7, retailers not only embrace digital transformation but also enhance operational efficiencies and customer experiences. As this technology continues to evolve, its impact on retail analytics, inventory management, and in-store security will undoubtedly expand, marking a new era in the retail sector.

Scaling YOLOv7 for Large-Scale Applications

The impressive strides in object detection using YOLOv7 are not limited to small-scale implementations. The real challenge and measure of its efficiency come into play when scaling YOLOv7 for extensive, large-scale applications such as city-wide surveillance and global retail chains. Integrating YOLOv7's advanced features into large systems requires an understanding of both its modular design and strategies for effectively managing datasets.

Leveraging Modularity and Readability in Implementation

The modularity in implementation of YOLOv7 makes it particularly scalable and adaptable. This design allows for easier adjustments and customization of the model to fit specific application needs without a complete overhaul of the system. For instance, adjustments can be made in the layer configurations or feature extractors based on the requirements of the real-world environment in which they operate. Such flexibility ensures that YOLOv7 remains robust across various domains and use cases.

Strategies for Managing Large and Diverse Datasets

Effective managing datasets is pivotal when deploying YOLOv7 on a large scale. The vast diversity of data requires strategies that accommodate wide-ranging scenarios and object types. Employing techniques like data augmentation and advanced feature pyramid networks helps in enhancing the model's understanding and responsiveness to different datasets. Additionally, meticulous selection of weight decay parameters is crucial to maintain the model's efficacy and prevent overfitting, thereby ensuring reliable and consistent object detection across myriad applications.

Here's how YOLOv7 performs under various configurations:

| Configuration | Inference Speed | Accuracy |

|---|---|---|

| YOLOv7-tiny (edge GPU) | Fast | High |

| YOLOv7-W6 (cloud GPU) | Faster | Very High |

| Custom YOLOv7 | Varies | Customizable |

Preparing Data for YOLOv7: An In-Depth Guide

Effective data preparation for YOLOv7 is crucial for the successful application of this advanced object detection model. This involves careful dataset loading, understanding various annotation formats, and organizing the data correctly to enhance the model's learning and performance accuracy.

Dataset loading is one of the first steps in data preparation for YOLOv7. It includes selecting a relevant dataset that is representative of the real-world scenarios where the model will be deployed. For instance, if YOLOv7 is to be used for road sign detection, the dataset must have a diverse set of images featuring different road signs under various environmental conditions.

The annotation formats used in YOLOv7 are essential to correctly training the model. YOLOv7 utilizes a specific format where annotations for each image are stored in a .txt file, with each line describing one bounding box around an object. The data includes the object class ID, as well as the x and y coordinates, width, and height of the bounding box normalized to the dimensions of the image.

Including negative images in your dataset can significantly improve the robustness of the model by teaching YOLOv7 what not to detect. This step ensures the model avoids false positives, making it more reliable in real-world applications.

Let's explore how annotation formats translate across different datasets:

| Annotation Format | Description | Used in Model | Conversion Needed |

|---|---|---|---|

| PASCAL VOC XML | Stores data in XML format with detailed object positioning and class name. | No | Yes, to YOLOv7 .txt |

| YOLOv7 Format | Simple text file with coordinates and class ID per line. | Yes | No |

| COCO JSON | JSON format with extensive metadata including object segmentation info. | No | Yes, to YOLOv7 .txt |

Developing functionalities such as a routine to convert PASCAL VOC XML annotations to the YOLOv7 format highlights the adaptability required in data preparation for YOLOv7. This not only includes converting object tags but also normalizing bounding box coordinates relative to image dimensions.

You can easily use Keylabs for preparing and annotating all your datasets for future YOLOv7 training.

Summary

In the dynamic field of computer vision, the emergence of YOLOv7 has marked a definitive leap forward, showcasing its impact through an impressive deployment across various domains. By achieving a mean average precision of 91.1% in autonomous rack inspections and an extraordinary precision rate of 96.7% for tea leaf disease identification, YOLOv7 has set a new standard for object detection algorithms. The efficient design of YOLOv7 not only flaunts these high precision rates but also a 97.3% accuracy in detecting tea leaf diseases, underlining the robustness of this architecture in ensuring superior model performance.

When considering its potential use cases, the versatility of YOLOv7 is further exemplified in its application to agriculture, as seen in the significant accuracy obtained for tea leaf disease detection, critical to regions like Bangladesh with its 162 tea gardens. The healthcare sector also witnesses YOLOv7's precision with a 0.944 average precision in identifying ringworm infections in camels, contributing to the welfare of livestock. In more technologically focused use cases, YOLOv7's implementation on a Raspberry PI to detect pallet racking damages with an mAP of 92.7% demonstrates the model's adaptability and compatibility with edge computing.

FAQ

What sets YOLOv7 apart from its predecessors in terms of architecture?

YOLOv7 features several architectural enhancements including custom components for multi-GPU training, a variety of data augmentation techniques, multiple learning rate schedulers, and a thoughtfully designed training loop, leading to significantly improved object detection performance.

How is YOLOv7 optimized for real-world scenarios?

YOLOv7 is tailored to cater to variable object shapes, sizes, and classes beyond traditional fixed categories. This adaptability ensures that it can be customized to meet specific industry needs, from autonomous vehicles and retail analytics to medical imaging and agricultural monitoring.

What are the practical applications of YOLOv7 in different sectors?

In the security sector, YOLOv7 enhances surveillance systems for safety and monitoring. In transportation, it aids in vehicle recognition and traffic flow optimization. In healthcare, YOLOv7 accelerates the diagnosis of medical conditions and improves patient care through its precise detection and segmentation capabilities.

How does YOLOv7 contribute to the development of autonomous vehicles?

YOLOv7 plays a crucial role in autonomous vehicle technology by providing swift and accurate real-time object detection, which is vital for recognizing pedestrians, traffic signs, and other vehicles, thus ensuring safety and reliability on the road.

Can YOLOv7 be deployed on edge devices? What are the benefits?

Yes, YOLOv7 can be deployed on edge devices, enabling real-time processing in situations where quick decision-making is essential, such as automated checkouts in retail and machine monitoring in manufacturing environments.

How does YOLOv7 enhance aerial imagery analysis?

YOLOv7 accurately detects and classifies objects from above, which assists in agricultural monitoring, urban planning, and environmental surveillance. It’s capable of recognizing objects of various scales from aerial perspectives.

What impact does YOLOv7 have on the retail industry?

YOLOv7 optimizes inventory management by accurately tracking products on shelves and aids in automating stock replenishment. It also enhances in-store security by monitoring customer behavior and detecting potential security breaches in real-time.

How can YOLOv7 be scaled for large-scale applications?

Through its modularity and scalability in implementation, YOLOv7 can manage large and diverse datasets crucial for city-wide surveillance systems, retail chain management, and other large-scale applications.

What are the advantages of YOLOv7 over conventional CNN and R-CNN models?

YOLOv7 outperforms conventional CNN models in speed, essential for real-time object detection. It also offers a balance of precision and efficiency compared to R-CNNs, which tend to be computationally intensive.

How is data prepared for YOLOv7 to ensure optimal model performance?

Preparing data for YOLOv7 involves selecting an appropriate dataset, understanding the required annotation formats, incorporating negative images, and employing dataset adaptors for efficient model training and pre-processing.