Building an Image Classifier for Self-Driving Cars

In 2019, Elon Musk boldly stated, "Anyone relying on LiDARs is doomed." This sparked a debate within the autonomous vehicle industry about the future of sensor technology. As we explore the realm of image classifiers for self-driving cars, it's evident that computer vision is crucial for the future of transportation.

At the core of autonomous vehicle technology lies image classification. It empowers cars to perceive and interpret their environment, making decisions in mere seconds for safe navigation. The Cityscape dataset, boasting over 100,000 images, is a treasure trove for training these intelligent systems. Utilizing convolutional neural networks (CNNs), self-driving cars can swiftly detect and categorize objects such as pedestrians, vehicles, and traffic signs in real-time.

The creation of image classifiers for self-driving cars is a complex task. It necessitates a fusion of advanced technologies like deep learning algorithms and robust hardware. Google Colab emerges as a preferred platform for researchers and developers, offering powerful GPUs and TPUs. This process entails crafting models that can decipher complex visual data from multiple cameras, capable of capturing images up to 200 meters away.

While cameras serve as the "eyes" for self-driving cars, other sensors like RADAR enhance visual data with radio wave-based systems. These excel in calculating distances and forecasting object movements, even under adverse weather conditions. The amalgamation of various sensors and sophisticated algorithms underpins a self-driving car's decision-making framework.

Exploring this topic further, you'll uncover how image recognition in self-driving cars is transforming the automotive sector. The path from raw visual data to intelligent decision-making is lined with groundbreaking solutions in computer vision and artificial intelligence.

Key Takeaways

- Image classifiers are vital for detecting objects in self-driving cars

- CNNs analyze visual data to identify pedestrians, vehicles, and signs

- The Cityscape dataset is vital for training autonomous vehicle models

- Google Colab provides essential resources for developing machine learning models

- Combining cameras, RADAR, and AI algorithms facilitates safe autonomous navigation

- Python and TensorFlow are pivotal tools in constructing image classifiers for self-driving cars

Introduction to Image Classification in Autonomous Vehicles

Image classification is essential for self-driving technology. It allows autonomous vehicles to understand and make sense of their environment, ensuring safety on the roads. Deep learning has transformed object detection, making it more accurate and efficient.

Computer Vision in Self-Driving Technology

Computer vision is the core of autonomous vehicles' perception. It employs sophisticated algorithms to analyze visual data from various sources. This technology equips self-driving cars with the ability to recognize and categorize objects, like pedestrians, vehicles, and traffic signs.

Importance of Accurate Object Detection

Accurate object detection is crucial for autonomous vehicles to navigate safely and make sound decisions. It enables them to react correctly to different road situations. For example, correctly identifying a pedestrian versus a traffic sign is critical for safe maneuvers.

Challenges in Dynamic Environments

Dynamic road environments pose significant challenges for image classification systems. Factors such as varying lighting, weather, and complex urban settings complicate the task. To overcome these hurdles, robust and flexible algorithms are necessary.

- Machine learning techniques help identify shapes and objects

- Deep learning outperforms traditional algorithms in complex scenarios

- Convolutional Neural Networks (CNNs) excel at image analysis for autonomous vehicles

By tackling these challenges, we can improve road safety and advance self-driving technology reliability.

Understanding the Fundamentals of Image Classifiers

Image classifiers are essential in the realm of computer vision for self-driving cars. These systems employ Machine Learning to interpret visual data, allowing vehicles to understand their environment. At their essence, they rely on Neural Networks to dissect digital images, pinpointing objects such as pedestrians, traffic signs, and other vehicles.

The essence of image classification resides in its capacity to sort visual data. This process encompasses various types:

- Binary: Sorts images into two categories

- Multiclass: Categorizes into three or more classes

- Multilabel: Assigns multiple labels to a single image

- Hierarchical: Organizes classes in a structure based on similarities

Image Processing techniques are vital for data preparation. These include resizing, cropping, and noise reduction. Feature extraction, a pivotal step, identifies visual patterns that distinguish between classes.

Convolutional Neural Networks (CNNs) are at the forefront for image classification. These deep learning models can outperform human accuracy in tasks like face recognition. As autonomous vehicles evolve, edge AI is gaining traction, shifting Machine Learning tasks to edge devices for enhanced real-time performance.

| Classification Type | Description | Application |

|---|---|---|

| Binary | Two distinct categories | Tumor classification |

| Multiclass | Three or more classes | Medical diagnosis |

| Multilabel | Multiple labels per image | Scene understanding |

| Hierarchical | Structured class organization | Efficient knowledge transfer |

Deep Learning Architectures for Image Classification

Deep learning has transformed how self-driving cars classify images. These systems employ advanced algorithms to interpret visual data. This allows vehicles to navigate complex environments safely.

Convolutional Neural Networks (CNNs)

CNNs are essential for image classification in autonomous vehicles. They are adept at processing visual data. They learn to identify features from basic edges to intricate patterns. In self-driving cars, CNNs achieve high accuracy in object detection. This is crucial for understanding the vehicle's surroundings.

Transfer Learning and Pre-trained Models

Transfer learning uses pre-trained models to boost performance on specific tasks. This method enables self-driving car systems to leverage models trained on vast datasets. It reduces development time and enhances accuracy.

State-of-the-art Architectures

ResNet, Inception, and EfficientNet are at the forefront of image classification. These models boast advanced capabilities. They are pushing the limits of what's achievable in autonomous vehicle vision systems.

| Architecture | Key Feature | Application in Self-Driving Cars |

|---|---|---|

| ResNet | Deep networks with residual learning | Accurate object recognition at various distances |

| Inception | Efficient use of computing resources | Real-time processing of multiple visual inputs |

| EfficientNet | Balanced network scaling | Optimized performance on edge devices |

These deep learning architectures are vital for enhancing self-driving cars' capabilities. They enable them to interpret complex visual scenes with growing accuracy and efficiency.

Data Collection and Preparation for Self-Driving Car Datasets

Dataset preparation is vital for creating effective image classification systems in autonomous vehicles. The Cityscape dataset is a key resource for training these systems, offering images with annotations for object detection and segmentation. Experts in computer vision use this dataset to develop precise models for real-world scenarios.

First, you must download and delve into the images. This step involves assessing the diversity of scenes, lighting conditions, and objects within the dataset. The dataset's quality and variety are crucial for a dependable image classifier.

Image annotation is a pivotal step in dataset preparation. It involves marking objects like vehicles, pedestrians, and traffic signs in each image. Precise annotation ensures your model can accurately identify and categorize objects across different scenarios.

Image preprocessing is also essential. This includes resizing, normalizing, and augmenting images to boost the model's generalization ability. These techniques enhance your image classifier's performance and resilience against real-world variations.

- Download and explore the Cityscape dataset

- Perform image annotation for object detection

- Preprocess images for optimal model training

- Apply data augmentation techniques

The success of your self-driving car's computer vision system relies on the dataset's quality and diversity. By dedicating time and effort to thorough dataset preparation, you lay a solid foundation for developing precise and dependable image classifiers for autonomous vehicles.

Image Classifier for Self-Driving Cars: Building the Model

Creating an image classifier for self-driving cars is a complex task. It demands a deep understanding of several key elements. The success of your model depends on selecting the right CNN architecture, employing effective training strategies, and using data augmentation techniques.

Selecting the Appropriate CNN Architecture

Choosing the right Convolutional Neural Network (CNN) architecture is essential. Popular choices include ResNet and EfficientNet, renowned for their performance in computer vision tasks. These networks mimic the human brain's learning process, passing inputs through multiple hidden layers. The deeper the network, the more it can learn over time.

Training Strategies and Hyperparameter Tuning

Model training is a time-consuming process, often taking up to 50% of the total development time. Hyperparameter tuning is crucial for optimizing your model's performance. This involves adjusting parameters like learning rate, batch size, and number of epochs to achieve the best results.

Implementing Data Augmentation Techniques

Data augmentation is vital for improving your model's ability to generalize. This technique involves creating variations of your training images through transformations like rotation, flipping, and color adjustments. It helps your model learn to recognize objects under different conditions, essential for the dynamic environment of self-driving cars.

| Model Building Stage | Time Allocation | Key Activities |

|---|---|---|

| Data Preparation | 30% | Loading, preprocessing, creating validation set |

| Architecture Definition | 10% | Selecting CNN type, defining layers |

| Model Training | 50% | Hyperparameter tuning, data augmentation |

| Performance Estimation | 10% | Testing, prediction, evaluation |

By focusing on these aspects, you can build a robust image classifier. This model should be capable of handling the complex visual tasks required for self-driving cars. The goal is to create a model that performs well in controlled environments and adapts to the unpredictable nature of real-world driving scenarios.

Object Detection and Segmentation in Autonomous Driving

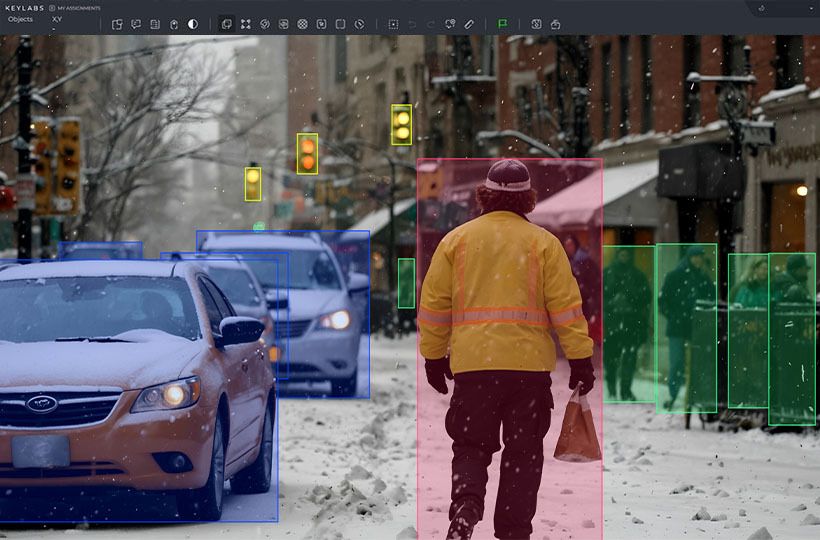

Object detection and image segmentation are key to autonomous driving systems. These techniques enable self-driving cars to safely navigate by identifying and tracking various road objects.

Image segmentation divides digital images into segments, making analysis easier for autonomous vehicles. It aids in recognizing lanes, traffic signs, and pedestrians. There are two primary types:

- Semantic Segmentation: Groups objects based on predefined categories

- Instance Segmentation: Provides detailed classification of individual objects

Object detection finds and identifies objects in images or videos. It's essential for real-time spotting and naming of objects, ensuring vehicles navigate safely. Convolutional Neural Networks (CNNs) have greatly improved object detection by learning features from images autonomously.

In autonomous driving, object detection software integrates with sensor data from cameras, radar, and ultrasonic sensors. This fusion accurately distinguishes between pedestrians, vehicles, and obstacles for precise tracking and classification.

| Sensor | Functionality |

|---|---|

| Cameras | High-resolution visual data for accurate object recognition |

| Radar | Effective in adverse weather conditions |

| Ultrasonic Sensors | Efficient for nearby object detection |

Continuous research in object detection and image segmentation is crucial for enhancing autonomous vehicle systems. Advancements in algorithms and computer vision technology will lead to safer and more efficient self-driving cars.

Real-Time Processing and Inference Optimization

Self-driving cars need to make decisions swiftly to ensure safety. Real-Time Processing is essential for this. Engineers employ Edge Computing and Model Optimization to achieve this.

Hardware Acceleration and Edge Computing

Edge Computing moves processing closer to where data originates. In self-driving cars, this means quicker response times. For instance, NVIDIA's DRIVE PX computer can process 30 frames per second. This allows for rapid reactions to road conditions.

Model Compression Techniques

Compression is vital for running complex models on limited hardware. Convolutional Neural Networks (CNNs) have fewer parameters than traditional models. A typical system might use a 9-layer network with 27 million connections. This is optimized for speed and accuracy.

Balancing Accuracy and Speed

It's crucial to balance precision with speed. The LSFM P model processes 30 frames per second across various datasets while keeping accuracy reasonable. This balance ensures dependable performance in real-world driving.

| Model | Speed (FPS) | Accuracy (mAP) |

|---|---|---|

| LSFM P | 30 | Reasonable |

| Traditional Detectors | <30 | High |

By focusing on these areas, self-driving cars can process information swiftly and accurately. This leads to safer autonomous driving experiences.

Integration with Sensor Fusion Systems

Self-driving cars use sensor fusion to get a full view of their surroundings. This technology blends data from cameras, LiDAR, and radar. By combining image classifiers with these systems, autonomous vehicles understand their environment more accurately.

Sensor fusion boosts the reliability of self-driving cars by checking information from different sensors against each other. This method cuts down on false alarms and sharpens object detection. For instance, cameras offer detailed visual data, while radar works well in bad weather. Merging these sensor inputs forms a stronger perception system.

Integrating multiple data sources in autonomous vehicles brings many advantages:

- Longer perception range for spotting hazards early

- Better object classification and tracking

- Improved performance in tough weather

- Less false positives in detecting objects

The automotive sector sees sensor fusion as key for advanced driver assistance systems (ADAS) and full autonomy. As self-driving tech advances, combining various sensor data is vital for reaching higher automation levels.

| Sensor Type | Strengths | Limitations |

|---|---|---|

| Camera | High-resolution visual information | Affected by poor lighting and weather |

| LiDAR | Precise 3D mapping | Limited range in adverse weather |

| Radar | Effective in poor visibility | Lower resolution than cameras |

| Ultrasonic | Short-range object detection | Limited range and resolution |

Through sensor fusion, autonomous vehicles can handle complex situations better, moving us closer to a future of safer, more efficient transport.

Ethical Considerations and Safety Implications

Self-driving cars are becoming a reality, bringing AI ethics and autonomous vehicle safety into the spotlight. The development of image classifiers for these vehicles raises critical ethical questions and safety concerns. These issues require our attention and careful consideration.

Decision-making algorithms in autonomous vehicles face significant challenges. For example, the Trolley Problem, a hypothetical scenario involving fatal outcomes, has sparked debates on how AVs should react in unavoidable accidents. This thought experiment, though not directly applicable to current technology, emphasizes the importance of ethical considerations in the deployment of AVs.

Research on ethical dilemmas in AV design is intensifying. In December 2021, the IEEE Computer Society published 28 articles addressing these critical issues. Experts like Philip Koopman, a specialist in AV safety engineering, contribute valuable insights to these discussions.

The Moral Machine experiment, conducted by MIT, gathered preferences from millions worldwide on how AVs should make decisions. This research highlights the significance of public input in shaping ethical guidelines for autonomous vehicles.

Safety implications go beyond ethical dilemmas. Ensuring the reliability of computer vision systems is paramount. Inaccuracies in image classification could lead to misidentifications or incorrect responses, potentially compromising autonomous vehicle safety.

| Ethical Concern | Potential Impact | Mitigation Strategy |

|---|---|---|

| Bias in facial recognition | Higher false identifications for certain ethnicities | Diverse training data and algorithm audits |

| Privacy violations | Unauthorized collection of personal data | Strict consent protocols and data protection measures |

| Decision-making in accidents | Ethical dilemmas in unavoidable collisions | Transparent policies and public engagement |

To address these challenges, the tech sector is exploring solutions like homomorphic encryption and secure federated learning. These technologies aim to enhance data security and privacy, which are crucial for ethical AI implementation in autonomous vehicles.

Summary

Image classifiers are pivotal in the realm of self-driving cars, driven by computer vision technology. As AI evolves, these systems are becoming more advanced. By 2024, we anticipate significant strides in the future of autonomous vehicles, with enhanced safety and better decision-making.

Self-driving cars utilize a blend of sensors, including cameras, radar, and lidar, for precise navigation. Machine learning algorithms, such as SIFT, AdaBoost, and YOLO, are essential for recognizing objects and interpreting scenes. These technologies empower autonomous vehicles to make swift decisions, potentially outperforming human capabilities in some cases.

FAQ

What is the role of computer vision in self-driving technology?

Computer vision is crucial in self-driving tech, allowing vehicles to see and understand their environment. It's key for detecting and identifying objects, which helps in making safe decisions and navigating roads.

Why are convolutional neural networks (CNNs) used for image classification in self-driving cars?

CNNs lead the way in image classification for self-driving cars. They're designed to spot and learn from image features, from simple edges to complex patterns. This makes them ideal for recognizing images.

What datasets are commonly used for training image classifiers in self-driving cars?

The Cityscape dataset is a go-to for training self-driving car image classifiers. It's packed with labeled images tailored for detecting objects and segmenting images.

How are image classifiers integrated with other sensors in autonomous vehicles?

Self-driving cars combine image classifiers with sensor fusion systems. This mix includes data from cameras, LiDAR, and radar. It boosts the vehicle's ability to understand its surroundings, offering a fuller picture of the environment.

What are some techniques used to optimize image classifiers for real-time processing in self-driving cars?

To speed up processing, self-driving cars use hardware acceleration and edge computing. Model compression also helps fit the classifiers into embedded systems with limited resources. Finding the right balance between accuracy and speed is key for dependable road use.

How are image classifiers for self-driving cars evaluated and continuously improved?

Evaluating self-driving car image classifiers involves checking their accuracy, precision, and recall. They're often compared to human drivers to ensure they're as good or better. Improvements come from real-world tests and refining the model with new data and scenarios.

What are some ethical considerations and safety implications of using image classifiers in self-driving cars?

Image classifiers in self-driving cars bring up big ethical and safety questions. They make decisions in tricky situations, raise questions about who's to blame for mistakes, and affect society's use of autonomous vehicles. Making sure these systems are safe and reliable is essential.