Building Better Eyes: Training High-Performance AI Image Recognition Models

When it comes to redefining the borders of technology, few advancements bear the stamp of the future as prominently as the evolution of AI image recognition models. Remarkably, these intricate systems that unlock the hidden stories within visual data analysis are now closer to mimicking the sophisticated attributes of the human eye more than ever before. Data preparation for AI is more important than ever for the purposes of training computer vision applications.

Key Takeaways

- AI image recognition's advancements are greatly reducing costs and development times for a range of applications.

- The rise of practical applications for image recognition, spanning from healthcare to autonomous driving, underscores its growing significance.

- Open-source tools and libraries such as TensorFlow and PyTorch are driving the democratization of AI model development and training.

- Data processing and the choice of frameworks play a pivotal role in the successful deployment of AI image recognition models.

Understanding AI Image Recognition Fundamentals

The realm of AI image recognition has significantly advanced, relying heavily on the principles of computer vision and deep learning object recognition. These technologies enable systems to categorize and identify objects within images with precision, closely mirroring human visual understanding. The integration of these sophisticated technologies not only enhances the ability of machines to process visual data but also dramatically speeds up decision-making processes in real-time applications.

Deep learning object recognition leverages neural networks, characterized by their deep layers that mimic the neuron activities in the human brain. Such structures are pivotal for learning from vast amounts of data and making intelligent decisions without human intervention. This capacity for deep learning to adapt and learn from new image data has paved the way for AI systems that can recognize and understand images with minimal human oversight.

Expanding the capabilities of computer vision, recent algorithms like YOLOv7 and YOLOv9 have set new benchmarks in the field. These models are equipped to handle complex object recognition tasks more efficiently, thus broadening the scope of machine-based visual interpretation. This progress is critical in fields requiring precision and speed, such as autonomous driving and medical image analysis, where accurate object recognition is crucial for safety and diagnostic purposes.

| Model | Release Year | Inference Time | Notable Improvement Over Previous |

|---|---|---|---|

| Mask RCNN | 2017 | 330ms/frame | N/A |

| YOLOv3 | 2018 | 4ms/image (Tiny YOLO) | Improved speed over earlier versions |

| YOLOR | 2021 | 12ms/frame | Faster than YOLOv4 and YOLOv3 |

| YOLOv7 | 2022 | Superior speed and accuracy | Significant improvement over YOLOR |

| YOLOv9 | 2024 | Not yet reported | New architecture, potentially higher efficiency |

The shift towards utilizing deep learning object recognition is partly driven by the necessity to process and analyze the burgeoning volume of visual content generated across various media platforms. With tools like MobileNet and EfficientNet, AI models can now operate not just with higher accuracy but also with greater computational efficiency, making them suitable for deployment in environments with limited computational resources.

Ultimately, the advancement of computer vision through deep learning models marks a significant milestone towards achieving machines with human-like vision capabilities. The continuous evolution in this field promises to bring even more sophisticated solutions that will further enhance the interaction between computers and the visual world.

Embracing the Power of Deep Learning

The advent of deep learning has revolutionized the field of machine learning, especially in the area of image recognition. Unlike traditional methods that depend on manual feature extraction, deep neural network face recognition systems harness the computational power of neural networks to learn directly from vast datasets. This shift has enabled machines to achieve and sometimes surpass human-level accuracy in tasks such as object detection and classification.

Deep learning's capacity to parse and understand intricate patterns in visual data has been pivotal in advancing machine learning applications. Neural networks, in particular, have shown significant promise in a variety of sectors. From healthcare, where they analyze medical images for better diagnostics, to autonomous vehicles that rely on these systems to navigate safely, the implications of these technologies are immense.

Advancements in neural network architectures, such as recurrent neural networks (RNNs) and transformers, have further extended the possibilities of what machine learning can achieve in both image and language processing tasks. These developments have led to improved efficiency in recognizing facial features through deep neural network algorithms, making face recognition systems more reliable and accessible.

| Industry | Application | Impact of Deep Learning |

|---|---|---|

| Healthcare | Medical Image Analysis | Enhanced diagnosis accuracy |

| Automotive | Sensor Data Interpretation | Safer autonomous driving |

| Finance | Market Trend Analysis | Improved predictive models |

| Technology | Personalized Recommendations | Better user experience |

The continuous evolution in deep learning highlights the dynamic nature of machine learning. With each advancement in computing power and data availability, neural networks become more sophisticated. However, challenges such as data privacy, algorithmic bias, and the need for model interpretability remain prominent. Addressing these issues requires a multidisciplinary approach, blending expertise from various fields to refine and advance the application of deep neural network face recognition technologies in ethical and effective ways.

Training AI Image Recognition Models: A Step-by-Step Approach

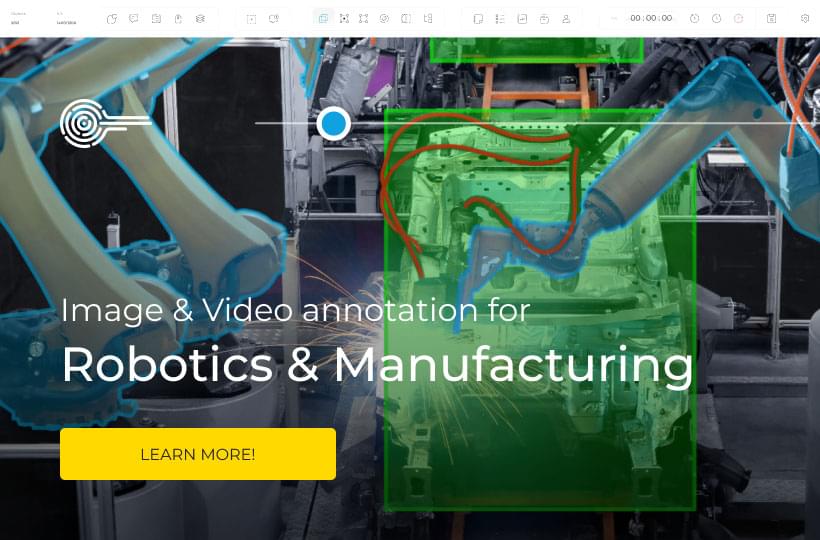

The journey of training AI image recognition models is meticulous and structured, integral for applications spanning from security surveillance to engaging consumer interactions. The foundation relies heavily on supervised learning and precise image annotation, essential for teaching models to accurately capture and interpret the nuances of visual data.

Initially, the process begins with assembling a curated dataset where each image is annotated with labels that describe features relevant to the model’s purpose. This dataset, typically consisting of a substantial number of images, undergoes a rigorous preprocessing phase to standardize and enhance image quality for better model performance.

- Image Annotation: Expert annotators label images in the dataset, marking various objects that the AI model will need to recognize. This step is crucial for the effectiveness of supervised learning, where the quality of image annotation directly influences the learning outcome.

- Model Training: Using the labeled images, the AI model learns to identify and categorize objects through iterative training. This stage is the most resource-intensive, requiring robust computational power, often supported by platforms like Google Colab.

- Validation and Testing: Post-training, the model is tested against a validation dataset not previously seen by the model to evaluate its performance and ability to generalize. Further adjustments and optimizations are made based on this feedback to refine the model.

The following time distribution offers a snapshot of how the training process is divided, underscoring the significance of each phase in developing reliable image recognition models:

| Phase | Time Spent |

|---|---|

| Loading and pre-processing data | 30% |

| Defining model architecture | 10% |

| Training the model | 50% |

| Estimating performance | 10% |

In supervised learning scenarios, especially in sectors like e-commerce where image recognition drives substantial revenue through apparel identification, the emphasis on high-quality image annotation cannot be overstated. Each image fed into the system sharpens the AI’s ability to not only recognize, but also reliably categorize images in real-time applications, thus enhancing user engagement and satisfaction.

Data Preparation for AI: The Bedrock of Image Recognition

The efficacy of neural networks heavily depends on the initial steps taken during the dataset acquisition and preparation phase. Ensuring that these steps are meticulously followed is crucial for the success of AI-driven image recognition systems.

Importance of Data Quality and Quantity

To build high-performing neural networks for image recognition, the role of dataset acquisition cannot be overstated. A dataset must not only be large; it must be representative of the real-world conditions the AI will function in. This includes diverse scenarios and varied subjects captured in the images to enhance the neural network's ability to generalize from its training. Accurate labeling is equally vital, as inaccuracies can mislead the training process, leading to erroneous model behavior.

Techniques for Data Augmentation

Augmenting a dataset is a formidable strategy to artificially expand the data available for training neural networks. Techniques such as rotating, flipping, and altering the lighting of images can help neural networks become more robust to different environments. Moreover, integrating synthetic data—artificially created images that mimic real-world images—can help cover scenarios not present in the initial dataset. This method is particularly important in dataset acquisition strategies where real data capturing might be restricted due to various constraints.

| Data Quality Factor | Technique | Impact on Neural Network Training |

|---|---|---|

| High Variability | Image Rotation and Flipping | Improves model robustness against orientation changes |

| Synthetic Data Incorporation | Adding synthetic scenarios | Expands training scenarios, enhancing adaptive capacity |

| Annotation Accuracy | Manual validation and correction | Ensures training data quality, leading to more reliable recognition |

| Real-time Adaptability | Dynamic dataset updates | Keeps the dataset relevant with evolving trends and technology |

Modern Algorithms in Image Recognition

The evolution of algorithms in image recognition has significantly influenced how machines interpret complex visual information. The integration of advancements in object detection, image classification, CNNs, and tools like YOLO have paved the way for more sophisticated and real-time data processing.

Exploring Convolutional Neural Networks (CNNs)

Convolutional Neural Networks (CNNs) are at the core of cutting-edge image recognition technology. Renowned for their efficiency in handling image data, CNNs use layers of convolution and pooling to extract vital features from images. This hierarchy is akin to the processing patterns found in natural vision systems and plays a crucial role in the advancement of deep learning in image recognition.

Python for Image Recognition: Programming Insights

Python's popularity in developing Python image recognition solutions stems from its comprehensive suite of libraries and frameworks tailored for deep learning applications. Among the powerful tools provided by this programming language is PyTorch, a standout framework praised for its flexibility and efficiency in both research prototyping and production deployment.

Deep learning applications, particularly those utilizing Python, benefit significantly from the wide array of resources accessible within the Python ecosystem. This promotes a streamlined, efficient development process, enabling developers to focus more on innovative solutions rather than the intricacies of underlying software mechanics.

For instance, the integration of Python in real-time object detection systems has been consequential in refining the accuracy and operation speed of these systems. The evolution from older algorithms like YOLOv4 to the faster, more accurate YOLOv9, highlights the pivotal role of efficient programming facilitated by Python in pushing the boundaries of deep learning applications in image recognition.

Improving Model Accuracy through Iterative Training

In the pursuit of enhancing model performance and training accuracy, understanding certain critical concepts such as overfitting and underfitting is essential. These concepts are foundational in addressing the inherent challenges posed during the development of machine learning models. A well-structured iterative training process, involving techniques like regularization and hyperparameter tuning, contributes significantly to refining these models, optimizing their accuracy, and ensuring they generalize well across new, unseen datasets.

Understanding Overfitting and Underfitting

Overfitting occurs when a model learns the detail and noise in the training data to an extent that it negatively impacts the performance of the model on new data, which is counterproductive. This typically results in a high training accuracy but poor model performance when applied outside the training dataset. Conversely, underfitting happens when a model is too simple, unable to learn the underlying pattern of the data and thus performing poorly on both the training and unseen data.

Regularization Techniques and Hyperparameter Tuning

Employing regularization techniques and hyperparameter tuning helps mitigate the drawbacks of overfitting and underfitting. Regularization techniques such as L1 and L2 regularization work by adding a penalty on the different parameters of the model to reduce the freedom of the model hence enhancing its generalization capabilities. On the other hand, hyperparameter tuning involves adjusting the algorithm parameters to find the optimal combination for the best model performance.

These iterative improvements in training strategies profoundly impact the reliability and efficiency of predictive models, thereby playing a critical role in achieving high training accuracy and enhanced model performance across various applications.

Visual Recognition vs. Object Detection: Clearing the Confusion

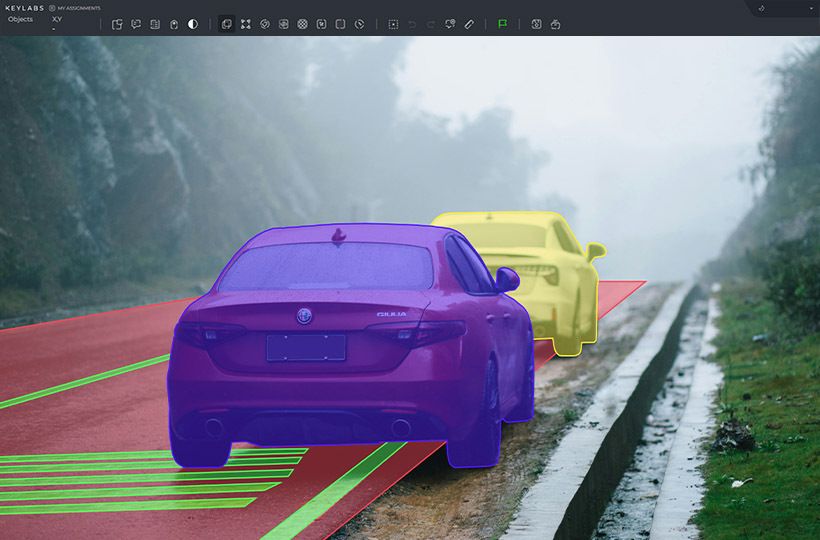

In the realms of computer vision, understanding the distinctions between image detection and object localization is paramount for applying the appropriate technologies and methodologies to various visual data challenges. Image detection is a comprehensive approach that entails discovering various objects within a visual frame, setting the stage for further processes like image recognition, which categorizes these detected objects.

Conversely, object localization goes a step further by pinpointing the exact spatial location of an object within the image. This precise localization is crucial in tasks where the context of an object's position relative to other elements in the scene affects the outcome, such as in autonomous driving systems where understanding the exact location of traffic signs or pedestrians dictates the vehicle's driving decisions.

The interplay between these two processes can often be misunderstood, leading to inefficiencies in project workflows and technology application. For example, effective image detection must reliably find and report the presence of objects without necessarily needing to classify them, which is often sufficient for preliminary stages of visual data analysis.

However, when precise interaction or identification of objects is needed, such as in retail analytics for identifying specific products on shelves, object localization becomes indispensable, elevating the utility of image detection by adding a layer of contextual understanding.

Understanding these differences not only refines the approach taken in handling visual data but also enhances the accuracy and effectiveness of the technology deployed in real-world scenarios.

By clearly delineating the utilities and applications of image detection and object localization, developers and practitioners can better design and deploy AI systems that are tailored to the nuanced needs of specific projects, thereby maximizing the potential of visual data processing technologies in a myriad of industries.

Hardware Considerations for Efficient Model Training

When diving into the world of AI and deep learning models, the significance of choosing the right AI hardware cannot be overstated. GPUs have emerged as powerhouses in accelerating the computations required for intricate deep learning tasks, reducing both the time and cost associated with training sophisticated models.

Given the intense computational demand of deep learning models, it is essential to consider the specs of the CPU in conjunction with the GPU capabilities. For instance, high-performance CPUs like Intel Xeon W-3300 and AMD Threadripper PRO 7000 Series are recommended for their ability to efficiently manage multiple GPUs through ample PCI-Express lanes, which are crucial for heavy compute loads.

| GPU Model | Video Memory | Use Case |

|---|---|---|

| NVIDIA RTX A6000 | 48 GB VRAM | High-resolution/3D image datasets |

| NVIDIA GeForce RTX 3080 | 10 GB GDDR6X | General ML/AI workloads |

| NVIDIA RTX A5000 | 24 GB VRAM | Professional, multi-GPU setups |

| NVIDIA RTX 4090 | 24 GB GDDR6X | Enthusiast level performance |

Another crucial aspect is the memory requirement. AI training processes are often memory-intensive, especially when dealing with large datasets. It is recommended to have at least double the CPU memory relative to the total GPU memory to handle such loads efficiently. For example, if an AI workstation is equipped with two NVIDIA RTX A6000 GPUs, at least 96 GB of CPU memory would be optimal for seamless performance.

The advancements in NVLink technology have also provided substantial benefits, especially for models that gain a considerable speed-up from high-performance inter-GPU communication, such as Recurrent Neural Networks (RNNs) and other time-series models. This technology is essential for realizing the full potential of GPUs in a network, enabling faster data transfer between GPUs and thus, more efficient deep learning operations.

Note the direct impact of GPUs enhanced by technologies such as NVLink on the feasibility and speed of training complex deep learning models.

Real-World Applications and Success Stories

The implementation of AI image recognition models has led to transformative changes across multiple sectors, providing compelling success stories in image recognition that underscore the practical benefits and efficiency boosts these technologies deliver. From enhancing security protocols through facial recognition to revolutionizing diagnostic processes in healthcare, the real-world use cases of image recognition are extensive and growing.

In the healthcare industry, image recognition models are improving patient outcomes dramatically. For instance, AI-driven systems are achieving a remarkable 97% accuracy rate in detecting diabetic retinopathy in retinal images. Similarly, IBM Watson Health leverages these technologies to draw insights from vast datasets for personalized cancer treatment recommendations.

The finance sector also benefits enormously from image recognition and related deep learning applications. Banks and financial institutions use anomaly detection and predictive modeling to prevent fraud, enhancing security and customer trust. Moreover, by analyzing customer data, these institutions are tailoring their services to individual needs, which not only improves customer experience but also enhances business profitability.

| Industry | Application | Impact |

|---|---|---|

| Healthcare | Cancer Detection | Enhanced diagnostic accuracy and personalized treatment plans. |

| Finance | Fraud Detection | Reduced fraud instances with predictive analytics and behavior analysis. |

| Automotive | Autonomous Driving | Improved safety and reduced traffic incidents through AI-driven navigation systems. |

| Retail | E-commerce Personalization | Increased sales through tailored product recommendations. |

More success stories in image recognition emerge from sectors like automotive and retail, where these technologies drive both innovation and efficiency. For instance, self-driving cars equipped with advanced image recognition systems are setting new standards for safety and are drastically reducing accident rates. In retail, AI-enhanced systems analyze customer behavior to optimize product placements and recommendations, significantly boosting sales and customer satisfaction.

Summary

The strides made in AI image recognition are undoubtedly impressive, painting a vivid picture of a future teeming with even more advanced visual data interpretation capabilities. From the pivotal moment in 2017 when the Mask RCNN algorithm became the forerunner in real-time object detection, to the epoch-making YOLOv9's innovative architecture introduced in 2024, the progression has been nothing short of extraordinary. These advancements highlight how deep learning continues to refine the precision and speed of recognizing and classifying images—a fundamental facet of AI that echoes human visual acuity.

One of the most remarkable transformations within this domain is the drastic reduction in the volume of training data required to educate algorithms effectively. Traditional methods that depended on extensive datasets have evolved; now, pioneering techniques unlock similar, if not heightened, levels of accuracy with just a fraction of data samples. This not only accelerates the training process but also makes efficacious AI image recognition more accessible across various sectors.

FAQ

What are AI image recognition models and their use in analyzing visual data?

AI image recognition models are sophisticated algorithms designed to interpret and analyze visual data by identifying and categorizing objects within images. They are crucial for a wide range of applications, from autonomous vehicles to healthcare diagnostics, by emulating human visual understanding through machine learning and deep neural networks.

How does computer vision differ from deep learning object recognition?

Computer vision is a broader field that encompasses various techniques for interpreting visual data. Deep learning object recognition is a specific approach within computer vision that uses deep neural networks to automatically and accurately recognize objects in images without the need for manual feature extraction.

Why is deep learning significant for AI image recognition?

Deep learning has transformed AI image recognition by enabling models to learn directly from large datasets and extract complex patterns. This results in improved accuracy and the capability to recognize nuanced features in images that traditional machine learning techniques might miss.

What are the essential steps in training AI image recognition models?

Training AI image recognition models involves several key steps, starting with data preparation, where a labeled dataset is curated for neural networks to learn from. This is followed by the training phase, where the model learns to classify images. Finally, testing the model ensures it performs well and accurately when confronted with unseen data.

How crucial is the quality and quantity of data for training image recognition models?

The quality and quantity of data are paramount in training effective image recognition models. High-quality, extensive, and accurately labeled datasets are necessary for neural networks to develop a precise understanding of the visual patterns they need to recognize.

What role do Convolutional Neural Networks (CNNs) play in image recognition?

CNNs are essential to image recognition as they process and analyze image data effectively through convolution and pooling layers. These layers extract and hierarchically learn significant features from images, mirroring the visual processing in biological systems.

How have recent advancements like YOLOv8 impacted image recognition technology?

Advanced models like YOLOv8 have significantly improved object detection and classification in image recognition. They provide a more refined framework to process images quicker and with greater accuracy, contributing to the rapid developments in computer vision technology.

Why is Python a preferred choice for developing image recognition solutions?

Python is favored for image recognition development due to its extensive range of libraries and frameworks supporting deep learning applications. Tools like PyTorch offer open-source resources that make the prototyping and deployment of image recognition models more agile and accessible.

What are overfitting and underfitting, and how do they affect model accuracy?

Overfitting happens when a model learns the training data too well, including the noise, which harms its ability to generalize to new data. Underfitting occurs when a model can't capture the data's underlying pattern, leading to inadequate training. Both issues can negatively impact the model's accuracy and performance.

What's the difference between visual recognition, image detection, and object localization?

Visual recognition is the overall process of identifying and categorizing objects in images. Image detection is about finding various objects within those images, and object localization refers to determining the precise location of these objects within the image.

How does AI hardware affect the training of deep learning models?

AI hardware, such as GPUs, plays a critical role in the training of deep learning models by accelerating computations. The right choice of hardware can significantly reduce training times, lower costs, and enable the development of more sophisticated models.

What functionalities does Google Cloud Vision AI offer in image recognition?

Google Cloud Vision AI offers a suite of image analysis services including object detection, OCR, and video content analysis. It integrates machine learning and deep learning to extract valuable insights from visual content within a managed environment, simplifying the use of computer vision technologies.