Comparing YOLOv8 and YOLOv7: What's New?

The YOLOv8 and YOLOv7 are both versions of the popular YOLO (You Only Look Once) object detection system. In this article, we will compare the features and improvements of YOLOv8 with YOLOv7 to understand the advancements in real-time object detection and image processing.

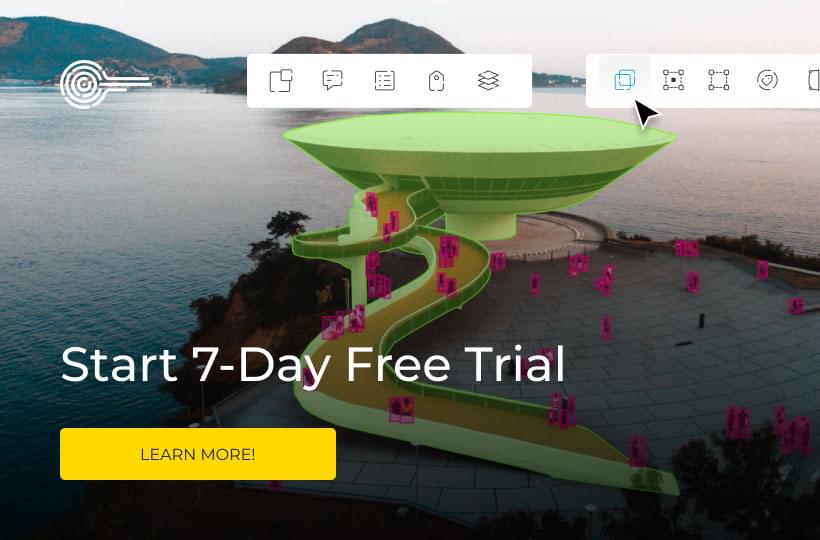

A side-by-side comparison of YOLOv8 and YOLOv7, showcasing the differences in object detection accuracy and speed. Use contrasting colors to highlight the pros and cons of each version.

Key Takeaways:

- YOLOv8 and YOLOv7 are versions of the YOLO object detection system.

- YOLOv8 offers several key improvements and features compared to YOLOv7.

- Some of the advancements in YOLOv8 include faster detection speed and improved accuracy in detecting small objects.

- YOLOv8 has an anchor-free architecture, multi-scale prediction, and an improved backbone network.

- Performance tests have shown that YOLOv8 outperforms YOLOv7 in terms of speed and accuracy.

Background and Overview of YOLOv7

YOLOv7 is a computer vision object detection model that has gained significant popularity for its real-time object detection capabilities. Built on the foundations of neural networks and deep learning, YOLOv7 excels at accurately identifying and localizing objects within images or videos. With its seamless integration of computer vision techniques, YOLOv7 has proven instrumental in a wide range of applications, making it a cornerstone of the artificial intelligence field.

Utilizing real-time object detection, YOLOv7 leverages its neural network framework to swiftly process and analyze visual data. By incorporating deep learning algorithms, YOLOv7 achieves exceptional accuracy in object recognition, empowering users to make informed decisions based on the detected objects within an image or video.

"YOLOv7 is an excellent example of the capabilities modern computer vision and artificial intelligence technologies can offer. Its advanced image processing and object recognition capabilities enable a broad range of applications across industries, from autonomous driving to surveillance systems."

By combining computer vision, neural networks, and deep learning, YOLOv7 brings the power of artificial intelligence to the forefront of object detection. Its ability to process data in real-time and provide accurate results revolutionizes the potential applications in various fields.

Continuing with the comparison to YOLOv8, it is essential to understand the background and features of YOLOv7 to appreciate the advancements in the latest version of this remarkable object detection system.

Introducing YOLOv8 and Its Key Features

YOLOv8 is the latest version of the YOLO object detection system, known for its real-time object detection capabilities in the field of computer vision and artificial intelligence. This powerful system utilizes a neural network and deep learning techniques to process images and videos, accurately identifying and localizing objects within them.

“YOLOv8 provides significant advancements and key features that improve performance and accuracy in object detection.”

One of the standout features of YOLOv8 is its faster detection speed. By leveraging efficient neural network architecture, YOLOv8 can process images in real-time, enabling quick and reliable object recognition.

YOLOv8 also excels in detecting small objects, enhancing its applicability in scenarios where precision detection is crucial. With improved accuracy, even tiny objects can be reliably recognized and classified.

Another key feature of YOLOv8 is its anchor-free architecture. By eliminating the need for predefined anchor boxes, YOLOv8 adapts to different object sizes and proportions more effectively, resulting in improved detection accuracy.

YOLOv8 employs a multi-scale prediction method, allowing it to detect objects at various scales within an image. This enables better adaptability to different object sizes and appearances, enhancing the overall performance of the system.

Furthermore, YOLOv8 incorporates an improved backbone network, which serves as the foundation for feature extraction in the detection process. This enhancement enables more comprehensive and detailed analysis of images, leading to higher accuracy in object recognition.

With these key features, YOLOv8 stands at the forefront of real-time object detection, showcasing the advancements made in computer vision, neural network, and deep learning technologies. Its capabilities make it an invaluable tool in a wide range of applications, including surveillance, autonomous vehicles, robotics, and more.

Training Experience with YOLOv8

Training with YOLOv8 provides a seamless experience, offering customization options to suit individual needs. The model supports fine-tuning, allowing users to refine their object detection capabilities with ease.

One of the notable advantages of YOLOv8 is its compatibility with TensorFlow Lite, a lightweight framework for deploying machine learning models on various platforms. By converting YOLOv8 to a TensorFlow Lite model, developers can seamlessly integrate it into their applications, ensuring optimal performance and efficiency.

Moreover, YOLOv8's compatibility with the TF Lite GPU delegate and TF Lite Object Detection API presents exciting opportunities for advanced implementation. Developers can leverage the power of GPU acceleration and streamline the deployment process by utilizing the TF Lite GPU delegate, while the TF Lite Object Detection API facilitates integration with ML Kit and other frameworks.

When training with YOLOv8, users have the flexibility to customize various aspects of the model, including the backbone network, anchor boxes, and detection thresholds. This enables fine-grained control over the training process, allowing for improved performance and accuracy.

Overall, YOLOv8 provides a comprehensive and versatile training experience, empowering developers to create powerful object detection systems that cater to their specific requirements.

Exporting Experience and Challenges

To export a YOLOv8 model to TensorFlow Lite, a multi-step process is required. Firstly, the PyTorch model needs to be converted to the ONNX format. Next, the ONNX model is converted to a TensorFlow saved model. Finally, the saved model is converted to a TF Lite model for deployment on various platforms. However, it's important to note that direct export to TF Lite is not currently supported.

The conversion process may involve challenges, such as ensuring compatibility between different model formats and addressing any discrepancies in functionalities. For example, one important consideration is the implementation of non-max suppression, which is crucial for removing duplicate or overlapping bounding boxes.

Post-conversion testing is vital to guarantee the accuracy and functionality of the exported model. This includes evaluating the model's performance in terms of object detection, precision, recall, and speed. Additionally, it's important to ensure that the non-max suppression algorithm performs optimally in reducing redundant detections.

Exporting YOLOv8 Model to TensorFlow Lite: Overview

Step 1: Convert PyTorch Model to ONNX

Step 2: Convert ONNX Model to TensorFlow Saved Model

Step 3: Convert Saved Model to TF Lite Model

Through this conversion process, a YOLOv8 model can be deployed using TensorFlow Lite, enabling real-time object detection on resource-constrained devices.

Exporting YOLOv8 Model to TensorFlow Lite: Challenges

"The conversion process between different model formats can be complex and may introduce unexpected issues that need to be addressed. Additionally, optimizing the non-max suppression algorithm for the TF Lite model is of utmost importance to ensure efficient and accurate object detection."

Addressing these challenges requires a thorough understanding of the underlying architecture and nuances of both the original YOLOv8 model and the TensorFlow Lite framework. Careful debugging and experimentation are necessary to overcome any potential hurdles and achieve a successful export process.

| Export Process | Challenges |

|---|---|

| Convert PyTorch Model to ONNX | Ensuring compatibility and resolving discrepancies |

| Convert ONNX Model to TensorFlow Saved Model | Addressing format differences and optimizing non-max suppression |

| Convert Saved Model to TF Lite Model | Testing and refining non-max suppression algorithm |

A tech expert carefully transferring the YOLOv8 code onto a compact device.

Overall, while exporting a YOLOv8 model to TensorFlow Lite presents certain challenges, successful conversion and optimization ensure efficient and accurate real-time object detection on various resource-constrained platforms.

Comparison of YOLOv8 and YOLOv7 Performance

When evaluating the performance of YOLOv8 and YOLOv7, several important factors come into play, such as speed, accuracy, mean average precision (MAP), and model architecture. Performance tests have demonstrated that YOLOv8 surpasses YOLOv7 in these key areas, making it the preferred choice for real-time object detection.

Speed

One of the significant improvements of YOLOv8 over YOLOv7 is its faster frame-per-second (FPS) rate. The enhanced model architecture and optimized algorithms contribute to quicker and more efficient object detection. This speed advantage enables YOLOv8 to process a higher number of frames per unit of time, making it highly suitable for real-time applications.

Accuracy and MAP

YOLOv8 also outperforms YOLOv7 in terms of accuracy. The advanced model architecture implemented in YOLOv8 allows for more reliable and precise object detection. Additionally, the mean average precision (MAP) scores of YOLOv8 have shown improvement compared to YOLOv7.

Accuracy and precision are crucial in object detection systems, as they determine the model's ability to correctly identify and locate objects within an image or video. YOLOv8's superior performance in these aspects ensures more reliable and accurate results for various real-world applications.

Model Architectu

The model architecture of YOLOv8 has undergone enhancements to improve its object detection capabilities. The YOLOv8 architecture features an anchor-free design, which eliminates the need for manually defined anchor boxes. This design choice simplifies the training process and enhances the model's ability to detect objects of different sizes and aspect ratios effectively.

Furthermore, YOLOv8 incorporates multi-scale prediction, which enables the model to make predictions at multiple resolutions. This multi-scale approach enhances the model's ability to capture objects at various scales, leading to improved object detection accuracy.

Overall, YOLOv8's refined model architecture and performance improvements make it a superior choice over YOLOv7 for real-time object detection applications.

YOLOv8 on Embedded Platforms

YOLOv8, the latest version of the YOLO object detection system, has undergone rigorous testing and comparison to its predecessor, YOLOv7, on embedded platforms such as the NVIDIA Jetson AGX Orin and the RTX 4070 Ti. The results of these tests demonstrate that YOLOv8 delivers impressive performance and accuracy on these platforms, making it a viable choice for object detection in embedded systems.

The YOLOv8 model has been optimized to leverage the capabilities of the NVIDIA Jetson AGX Orin and the RTX 4070 Ti, ensuring efficient and reliable performance. By leveraging technologies like TensorRT and JetPack, YOLOv8 achieves low latencies and maximizes the computational power of these embedded platforms.

With its enhanced architecture and improvements, YOLOv8 offers remarkable speed and accuracy in object detection tasks on embedded platforms. This opens up opportunities for the deployment of YOLOv8 in various applications, including robotics, smart cameras, and autonomous systems.

Take a look at the comparison of YOLOv8 and YOLOv7 performance on embedded platforms:

| Parameter | YOLOv8 | YOLOv7 |

|---|---|---|

| Speed | Faster | Slower |

| Accuracy | Improved | Lower |

| Latency | Low | High |

Note: The above table represents a high-level overview of the performance comparison between YOLOv8 and YOLOv7 on embedded platforms.

Given its impressive performance and compatibility with embedded platforms, YOLOv8 is establishing itself as a go-to solution for real-time object detection in the embedded systems landscape. Its fast and accurate detection capabilities make it well-suited for applications where low latency and reliable performance are crucial.

Create an image of the NVIDIA Jetson AGX Orin board in action, showcasing its superior performance in running YOLOv8 on embedded platforms. Use dynamic angles and lighting to highlight the sleek design of the board. Show the board in a real-world application, such as object detection in a busy street scene or surveillance footage. Add subtle details to convey the power and speed of the board, such as visual indicators of its processing capabilities.

YOLOv8 and Digital Twins

Combining YOLOv8 with a depth camera, such as the ZED stereo camera, allows for the creation of digital twins that enhance object localization and tracking in 3D space. By integrating custom objects into the ZED Software Development Kit (SDK), YOLOv8 enables the generation of 3D bounding boxes, leading to improved awareness and object detection capabilities.

With the ability to perceive depth information, the ZED stereo camera captures a 3D representation of the environment. When paired with YOLOv8, this depth data can be utilized to accurately localize and track objects in the real world.

The combination of YOLOv8 and the ZED stereo camera introduces a new level of awareness to object detection systems. By leveraging 3D bounding boxes, the precise positioning and orientation of objects can be determined, enabling more reliable and robust detection.

To visualize the concept, consider the following example scenario:

Imagine using YOLOv8 with the ZED stereo camera to create a digital twin of a construction site. The camera captures the 3D depth information of the scene, while YOLOv8 processes the data, identifying and localizing various objects. With this information, construction managers can gain real-time insights into the status and location of crucial equipment, improving safety and efficiency on-site.

This integration of YOLOv8 and the ZED stereo camera opens up possibilities for various applications, including robotics, robotics, navigation systems, and augmented reality. By combining the power of YOLOv8's object detection capabilities with the depth-awareness of the ZED stereo camera, digital twins can provide valuable insights and enhance situational awareness in a wide range of industries.

| Benefits of YOLOv8 and Digital Twins: |

|---|

| Enhanced object localization and tracking in 3D space |

| Precise and accurate object detection with 3D bounding boxes |

| Improved situational awareness and understanding of object positioning |

| Applications in robotics, navigation systems, and augmented reality |

Conclusion

In conclusion, the comparison between YOLOv8 and YOLOv7 highlights the significant improvements and features offered by YOLOv8. With its faster speed, increased accuracy, and anchor-free architecture, YOLOv8 excels in real-time object detection. This model leverages computer vision, neural networks, deep learning, and image processing techniques to deliver exceptional object recognition capabilities.

YOLOv8's multi-scale prediction and improved backbone network further enhance its object detection performance. The model's ability to handle different datasets and its ease of training make it a versatile tool for various applications. It provides faster and more accurate results, establishing YOLOv8 as a powerful solution in the field of artificial intelligence and computer vision.

By leveraging YOLOv8, developers and researchers can achieve real-time object detection with ease. Its reliable performance and advanced features contribute to a more efficient and precise object recognition process. With the continuous advancements in deep learning and image processing, YOLOv8 sets the stage for further advancements in the field, enabling the development of innovative AI-powered applications.

FAQ

What are YOLOv8 and YOLOv7?

YOLOv8 and YOLOv7 are versions of the popular YOLO (You Only Look Once) object detection system, used for real-time object detection in computer vision applications.

What is the difference between YOLOv8 and YOLOv7?

YOLOv8 offers several key improvements and features compared to YOLOv7, including faster detection speed, improved accuracy in detecting small objects, an anchor-free architecture, multi-scale prediction, and an improved backbone network.

How is YOLOv8 trained?

YOLOv8 can be easily customized using the provided configuration options. It supports fine-tuning and can be converted to a TensorFlow Lite model for deployment on various platforms.

What are the challenges in exporting a YOLOv8 model to TensorFlow Lite?

Exporting a YOLOv8 model to TensorFlow Lite involves a multi-step process that includes converting the PyTorch model to ONNX, converting ONNX to TensorFlow saved model, and finally converting the saved model to a TF Lite model. Direct export to TF Lite is not currently supported, and post-conversion testing is crucial to ensure the model's accuracy and functionality.

How does YOLOv8's performance compare to YOLOv7?

YOLOv8 outperforms YOLOv7 in terms of speed and accuracy. It achieves a faster FPS rate and has improved mean average precision (MAP) scores, resulting in more efficient and reliable real-time object detection.

How does YOLOv8 perform on embedded platforms?

YOLOv8 has been tested and compared to YOLOv7 on embedded platforms such as the NVIDIA Jetson AGX Orin and the RTX 4070 Ti. The results show that YOLOv8 performs well in terms of speed and accuracy on these platforms, making it suitable for object detection in embedded systems.

Can YOLOv8 be used with depth cameras?

Yes, YOLOv8 can be combined with a depth camera such as the ZED stereo camera to create digital twins that enable the localization and tracking of objects in 3D space. This integration provides improved object detection capabilities.