Creating Instruction Datasets for LLM Fine-Tuning: Complete Workflow

In today's AI world, LLMs are becoming increasingly powerful tools for processing text information, generating content, and automating complex tasks. However, the effectiveness of these models largely depends on the quality of the data used to train them. It is important to create instructional datasets — specialized datasets in which each example specifies a task as instructions and an expected response.

Understanding LLM Fine-Tuning and Data Preparation

LLM fine-tuning is the process of specialized training of a pre-trained model on a narrow data set to improve its performance on specific tasks. Unlike full training “from scratch”, fine-tuning requires significantly less time and computational resources while preserving the model's general language capabilities.

A key aspect of successful fine-tuning is data preparation. High-quality data determines how effectively the model understands the specifics of the tasks and generates accurate answers. For instructional datasets, this includes:

- Defining task types – classification, text generation, question-answer, summarization, translation, etc.

- Cleaning and normalization – removing duplicates, correcting grammatical errors, and bringing data to a single format.

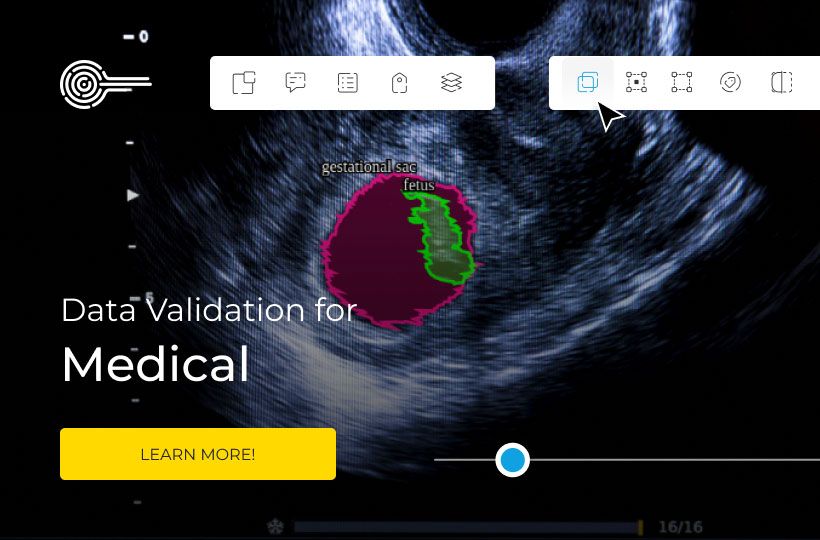

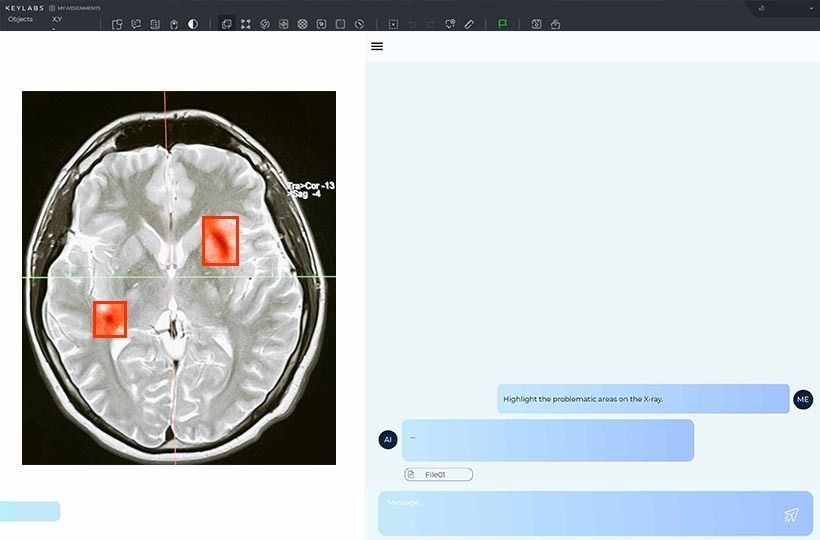

- Data annotation – formulating clear instructions and expected results for each example.

- Formatting for LLM – structuring in JSON, CSV, or specialized formats supported by a specific platform for fine-tuning.

Data Preprocessing: Extracting Text from Diverse Formats

The first step in preparing an instructional dataset for fine-tuning is to extract text from various sources and formats. Data for LLM can come from documents, web pages, PDF files, spreadsheets, JSON or XML files, chat logs, etc. Each format requires its own approach for efficient and accurate information extraction. The main steps include:

- Determining data sources: consider the document types (PDF, DOCX, HTML, Markdown) and their structures (tables, lists, paragraphs).

- Using specialized tools: for PDFs, use PyPDF2 or pdfplumber; for DOCX, use python-docx; for web content, use HTML parsers (BeautifulSoup).

- Processing structured data: JSON, XML, or CSV may contain nested fields that need to be converted to plain text or to individual instructional examples.

- Removing unwanted elements: advertising banners, HTML tags, watermarks, or duplicates that can reduce the quality of learning.

- Text normalization: bringing to a single style (punctuation marks, spaces, character encoding), which simplifies further annotation and formatting.

Filtering and Deduplication Techniques for High-Quality Datasets

Creating Instruction Formats for Model Fine-Tuning

The quality and structure of instruction-response pairs determine how well a model can learn task specifics and generate relevant, accurate, and consistent responses. In academic and applied contexts, this means that each example should be presented in a standardized format that minimizes ambiguity and allows the model to identify key components of the task, context, and expected response.

Following quality guidelines when generating instructional data ensures consistency across different parts of the dataset, increases its analytical value, and reduces the risk of introducing noise or bias. At the same time, applying strict annotation standards ensures that each instruction provides sufficient context to understand the task and that responses are presented in a clear, logically structured manner. This ensures that model results are reproducible and comparable across fine-tuning iterations.

When creating formats, special attention should be paid to unifying the presentation of text, including a single syntax, consistent encoding standards, and structured fields for context, instruction, and response. Standardized instruction-response pairs facilitate automated quality checks, implementation of filtering and deduplication procedures, and integration of new data into future dataset generation cycles.

Instruction Fine-Tuning Techniques and Architectural Enhancements

Summary

Creating high-quality instruction-response pairs is the foundation for effective fine-tuning of large language models. The process begins with careful dataset generation, including data collection from various formats, data cleaning and normalization, and extraction of relevant text. Filtering, deduplication, and adherence to quality guidelines ensure consistency and diversity of examples, while strict annotation standards guarantee the accuracy of instructions and expected responses.

The systematic combination of high-quality dataset generation, standardized instruction-response pair formats, and proven fine-tuning methods lays the foundation for reliable, reproducible model training that meets modern academic and practical standards for working with large language models.

FAQ

What are instruction-response pairs, and why are they important in LLM fine-tuning?

Instruction-response pairs are structured examples that link a task instruction to the expected output. They guide the model during dataset generation and are critical for aligning outputs with quality guidelines.

What is dataset generation in the context of LLM fine-tuning?

Dataset generation is the process of collecting, cleaning, and structuring data into instruction-response pairs. It ensures the model receives high-quality examples that follow annotation standards.

Why are quality guidelines necessary for instruction datasets?

Quality guidelines define consistency, clarity, and reliability in instruction-response pairs. Following them reduces noise, improves model accuracy, and ensures reproducibility across different fine-tuning iterations.

How do annotation standards influence dataset quality?

Annotation standards specify how instructions and responses should be labeled and formatted. Adhering to them ensures the instruction-response pairs are consistent, interpretable, and aligned with quality guidelines.

What techniques are used for filtering and deduplication?

Filtering and deduplication use keyword matching, semantic similarity, and hashing methods to remove irrelevant or repeated examples. This maintains dataset diversity and strengthens the reliability of instruction-response pairs.

Why is text preprocessing important in dataset preparation?

Preprocessing extracts meaningful content from diverse formats like PDF, DOCX, and HTML, normalizes text, and removes noise. It ensures that instruction-response pairs are clean and suitable for fine-tuning.

How do standardized instruction formats support LLM fine-tuning?

Standardized formats provide a consistent structure for instructions and responses, making automated validation and integration easier. This approach enforces annotation standards and aligns with quality guidelines.

What are some architectural enhancements used in instruction fine-tuning?

Techniques like LoRA, prefix-tuning, RLHF, and mixture-of-experts architectures optimize models for task-specific performance while preserving general capabilities. They leverage high-quality instruction-response pairs to improve learning efficiency.

How does supervised fine-tuning (SFT) differ from RLHF?

SFT trains the model directly on labeled instruction-response pairs, while RLHF refines outputs using human feedback to align them with expectations. Both methods rely on datasets that follow strict annotation standards.

Why is a data-centric approach important for continuous improvement?

A data-centric approach iteratively refines instruction-response pairs based on model performance. It ensures dataset generation remains aligned with quality guidelines and improves long-term fine-tuning results.