Curation for Pretraining: Annotating Data for Large Foundation Models

Creating robust baseline AI models requires a considerable amount of carefully selected and adequately annotated data. The curation process for pretraining is critical, as the input data quality affects the model's accuracy, generalizability, and efficiency.

Data annotation for such models differs from traditional labeling, as they must learn from various sources and types of information. Data is collected from numerous textual, image, and multimodal sources and then filtered and structured. The main goal at this stage is to remove low-quality, duplicate, or biased content that could affect the model's results.

An important aspect is the balance between automated and manual annotation. Computerized algorithms can quickly classify large amounts of text or images, identify key categories, and weed out irrelevant content. However, more fine-tuning requires human intervention, especially in complex cases where context or cultural sensitivities must be considered.

Understanding Pretraining Dataset Curation

Pretraining a model can be compared to raising a child. If a child hears only false or one-sided stories, his understanding of the world will be distorted. Similarly, if AI is trained on low-quality or biased data, it will generate inaccurate answers. Therefore, data curators use carefully considered strategies to select information that will contribute to developing an accurate, objective, and universal model.

One of the most critical aspects of curation is the selection of relevant sources. Texts can be taken from books, scholarly articles, official documents, blogs, and even social media, but it is essential to balance different types of information. For example, training only on encyclopedia articles can make the model very formal, while including colloquialisms improves its ability to understand live speech.

Another challenge is filtering and cleaning the data. Raw datasets can contain repetitions, spam, and incorrect or toxic content. Automated algorithms help find and filter out such data, but human intervention is sometimes required, especially for the subtleties of cultural or ethical contexts.

Types of Data Used in Pretraining

For large basic AI models to become truly "smart" they need vast data. But AI training is not just about loading random information. The model must receive diverse, structured, high-quality data to help it understand the world in all its complexity. Let's look at the data types used for preliminary training.

Text data is the basis for language models. It can include:

- Books and academic articles - help the model learn complex vocabulary, logical structures, and scholarly writing style.

- News and blogs - provide insights into current events, colloquialisms, and different points of view.

- Social networks and forums - contain live, informal language, jargon, slang, and memes, which helps the model better understand real-world dialog between people.

- Documents and reports - important for AI's legal, financial, and technical applications.

- Photos with descriptions allow the model to understand what a "red car" or "sunny day" is.

- Footage from videos allows for the analysis of the movement and interaction of objects in the real world.

- Tabular and structured data are the basis for models that work with numerical information. These can be:

- Databases of financial transactions - for forecasting economic trends.

- Measurements from scientific experiments - for analyzing large amounts of data in research.

- Product and purchase information - to help recommendation algorithms determine what a user might be interested in.

Enhanced data curation capabilities in Keylabs 1.91

The Keylabs 1.91 update introduces advanced data curation capabilities, enhancing the quality of training datasets for artificial intelligence.

Filter by Object Classes and Attributes: Need to find files containing specific objects? This release introduces a new capability to filter your file list based on object classes and their attribute values.

Target Multiple Classes and Attributes: Select one or multiple object classes to see files containing at least one object from your selection. You can further refine your search by specifying attribute values for those classes, ensuring only files with objects that match ALL your criteria are displayed.

Layer Multiple Object Filters: Create complex search queries by adding multiple object filters. Only files containing objects that meet the conditions of EVERY filter will be shown.

Easy Management: Add, remove, or adjust object filters at any time, providing you with the flexibility to fine-tune your search as needed. New tools help eliminate noise, detect anomalies, and optimize dataset selection, which is crucial for high-precision AI applications. Keylabs continues to refine the annotation process, making it faster and more effective, unlocking new opportunities for researchers and developers in the field of artificial intelligence.

Challenges in Dataset Curation

One of the biggest challenges is collecting high-quality and balanced data. Training large models requires terabytes of information, and this data must be diverse and relevant. For example, if AI is trained primarily on academic articles, it may be able to understand scientific language perfectly but not recognize colloquialisms or slang. On the other hand, if it receives too much informal content, its answers may lose accuracy and reliability.

- Another serious problem is data bias. A model trained on a one-sided information set can start reproducing or reinforcing certain stereotypes. For example, AI may produce distorted results if a dataset contains news primarily related to a particular country or group of people. To avoid this, curators should carefully analyze the composition of the dataset and apply balancing methods by adding various sources and points of view.

- Data cleansing is another challenging task. Filtering out harmful content requires detecting spam, toxic statements, fake information, or simply irrelevant data. Algorithms can automatically filter out some of this content, but complex cases, such as sarcasm or hidden bias, often require manual review.

- In addition, there are technical challenges related to data size and processing. Modern AI models' training datasets are so large that they need to be efficiently stored, structured, and processed quickly. This requires powerful computing resources and well-designed algorithms to optimize performance.

- Finally, the issue of ethics and privacy remains essential. In a world where a huge amount of data is collected from open sources, confidential or personal information must be avoided. AI should not learn from private data without permission, so curators use unique anonymization methods to protect users.

Defining Clear Objectives

Clearly defined goals are a kind of "road sign" for the model, guiding its development in the right direction. For example, if a system is designed to recognize objects in images automatically, it needs to learn to distinguish between thousands of categories, from animals to road signs. But does it require information about background color or surface texture? If this is not determined at the beginning, the model can waste resources on unimportant details, which reduces its efficiency.

Another important aspect is the balance between generalization and specialization. Some models, such as large language systems, need to handle a wide variety of texts, from scientific articles to colloquial slang. Others, however, focus on narrow tasks, such as recognizing medical images. If the goals are not clearly defined, the model may become either too specialized or too general, reducing its accuracy.

In addition, it is important to consider ethical and practical aspects. For example, if AI is used to analyze financial risks or make medical decisions, even a minimal error can have serious consequences. In such cases, training goals include not only maximizing accuracy but also mechanisms for verifying and explaining the results.

The Annotation Process Explained

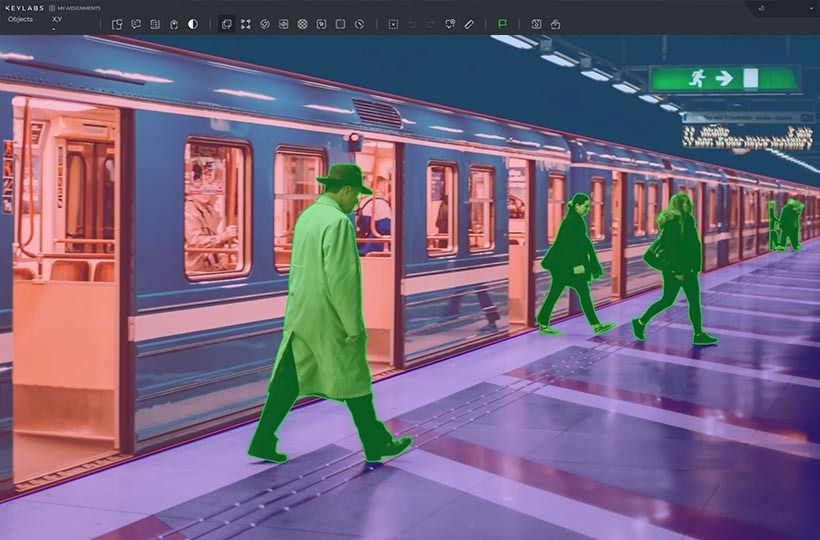

The annotation process begins with data collection: texts, images, videos, or 3D scans. After that, annotators add metadata: they label objects in a picture, mark up texts, or mark objects in a video. In the case of LiDAR data used for autonomous vehicles, the annotation includes the definition of the outlines of pedestrians, cars, roads, and other environmental elements.

For textual data, annotation can mean identifying emotions in a sentence, marking parts of speech, or identifying key topics. For example, to teach a chatbot to understand user queries, texts are categorized into questions about the weather, finance, technical support, etc.

There are several levels of annotation accuracy. For example, object recognition in an image can use simple rectangular boxes (bounding boxes) or more complex contours (segmentation masks) to more accurately determine an object's shape. In video, annotation can include tracking the movement of objects over time to train models to predict the behavior of pedestrians or other road users.

Automation partially simplifies this process. AI can independently label data, and humans check and correct its work. This significantly speeds up the process, especially regarding vast amounts of information, as in the case of training autonomous systems.

The quality of the annotation determines how well AI will recognize the world around it. Incorrect or inaccurate labels can lead to serious errors—for example, a car autopilot may not recognize a pedestrian, or a medical model may miss important symptoms in an image.

Transparency accountability

Transparency means that AI's learning and decision-making processes are not a black box. This includes explaining where the training data comes from, the algorithms used, and how the model makes predictions. If companies are open about data sources and processing methods, it helps users and developers better understand the technology's capabilities and limitations.

Accountability means that developers and organizations are responsible for the actions of their AI systems. For example, if a neural network makes decisions in the financial or medical sphere, it is essential to know who is responsible for possible errors or biases in conclusions. Some countries are already introducing laws obliging companies to explain how AI works and ensure that it does not discriminate against certain groups of people.

To increase transparency, researchers are introducing "explainable AI" (XAI) - systems that can explain why they made confident choices. For example, if a model determines whether to give a person a loan, it can show what factors influenced the decision instead of just giving a yes or no answer.

The Future of Dataset Curation

One of the main trends will be intelligent automation. Algorithms can already clean data from duplicates, spam, or false information. But in the future, they will be able to distinguish quality content even better, for example, recognize fakes, determine the bias of texts, or even select materials based on their reliability.

Another significant change is the growing personalization of datasets. Today's models are trained on universal datasets, but in the future, adaptive models may appear that can be tailored to specific areas. For example, the same neural network can receive specialized training for medicine, law, or creativity, and its essential experience will be supplemented according to the context.

Ethical curation is also worth mentioning. The more models interact with the world, the more critical it is to control what data they learn from. Future curation systems will pay more attention to eliminating bias, ensuring transparency of sources, and adherence to ethical standards. This will make AI not only bright but also fair.

A breakthrough in generative datasets is also possible. Imagine that AI creates new training data - texts, images, or even simulations of real-life situations - to help improve its learning. Such models can learn from synthetic data, especially when collecting accurate data is difficult or limited (for example, in biomedical research or robotics).

The future of dataset curation involves automation, an ethical approach, and adaptive learning. The more accurately we teach machines to select and analyze information, the smarter, more useful, and safer AI will become. And who knows, maybe in the future, neural networks will participate in their training, selecting the best sources just like humans do now.

Summary

As AI continues to evolve, dataset curation becomes more critical than ever. The quality, diversity, and integrity of the data we provide to AI models directly influence their accuracy, fairness, and adaptability. Looking ahead, the future of dataset curation will be shaped by intelligent automation, ethical considerations, and the emergence of synthetic datasets that enhance model training in ways previously unimaginable.

Advancements in AI-driven filtering and validation will make dataset selection more precise, reducing biases and improving the reliability of AI-generated insights. Personalized dataset curation will enable models to specialize in various domains while maintaining a strong foundational understanding of the world. Meanwhile, developing generative datasets will open new possibilities for training AI in fields where real-world data is limited or difficult to obtain.

Ultimately, dataset curation is not just about collecting and annotating information - it is about shaping future AI systems' knowledge and reasoning abilities. By refining this process, we ensure that AI becomes more intelligent, ethical, and capable of assisting humanity across diverse industries and challenges. As we step into the next era of AI development, one thing remains clear: the more intelligent we curate, the smarter AI will become.

FAQ

What is pretraining dataset curation?

Pretraining dataset curation organizes, cleans, and enriches data before it's used for AI model training. It ensures the data quality and diversity, meeting the AI model's specific needs.

Why is dataset curation important in AI development?

Let curation is key in AI development to achieve accurate and reliable models. Maturity in curation, such as automated cleaning and annotation, significantly enhances AI model performance.

What does data annotation involve?

Data annotation labels the data for AI systems, providing an apparent learning reference. This can involve tagging images, transcribing audio, or categorizing text.

What are the benefits of proper annotation?

Proper annotation boosts AI's accuracy in interpreting data. Well-annotated datasets lead to efficient training and finely tuned models. This reduces time to market and improves performance.

What are the challenges in dataset curation?

Challenges include ensuring data quality, overcoming biases, and scaling curation processes. High quality must be error-free and representative, requiring rigorous validation and scalable solutions.

How can we define clear objectives for effective dataset curation?

Understanding the AI system's end goals sets clear objectives. These could be language understanding, image recognition, or predictive modeling. Aligning the dataset with these objectives ensures it meets the AI's needs.

What strategies can enhance the efficiency of dataset curation?

Automation tools significantly improve curation efficiency. Automated data cleaning, annotation, and quality checks ensure datasets meet AI modeling standards efficiently.

What ethical considerations are involved in dataset curation?

Ethical considerations involve transparency and accountability. Documenting and communicating curation processes is essential. Sensitive information must be handled carefully, and data protection laws and ethical standards must be followed.

Can you provide examples of successful implementations in dataset curation?

Successful implementations showcase rigorous data quality checks and innovative annotation techniques. Scalable curation practices are also highlighted. These case studies offer practical insights and lessons for refining curation strategies.

What are the emerging trends in AI and data annotation?

Trends include the increased use of automated tools leveraging AI. These tools predict and adapt to curation needs dynamically. Future trends suggest more integration with real-time AI applications, requiring agile systems.

What are the predictions for the evolving landscape of dataset curation?

The evolving landscape will see a growing demand for sophisticated, well-curated datasets. As AI becomes more prevalent, innovative curation methodologies will be necessary to meet these demands effectively.