Data Annotation for Self-Driving

AI-powered cars now process more data than the average human generates in a year, relying on carefully structured information to navigate city streets, enabling automotive AI to operate safely in dynamic environments.

High-quality training data determines whether machines can safely interpret stop signs obscured by snow or distinguish between pedestrians and mailboxes at 65 mph. Every sensor-equipped vehicle generates a significant amount of raw input data every second, including camera images, LiDAR scans, and radar signals that require precise categorization.

Teams use labeling techniques to teach artificial systems to recognize lane markings during heavy rain or predict cyclists at intersections.

Quick Take

- Each operational second requires processing a significant amount of sensor input.

- Accurate labeling allows for object recognition in all weather conditions.

- Raw sensor data is ready for decision-making through systematic categorization.

- Current systems use 15-20 external sensors, requiring coordinated analysis.

- Safety-critical responses rely on machine learning models trained on validated datasets.

What is data annotation, and why is it important?

Data annotation is the process of labeling, classifying, or describing data to make it structured and understandable for use by artificial intelligence and machine learning algorithms. The process involves adding information to the data, such as labeling objects in images, identifying emotions in text, or recognizing sounds in audio recordings. Good annotation is important because it is on such datasets that models are trained, and the accuracy of AI depends on the correctness and completeness of these labels. Errors in annotation lead to incorrect predictions or decisions, which are important in medicine, security, or autonomous transportation.

The Role of Autonomous Vehicle Annotation in Developing Self-Driving Cars

Raw sensor information becomes understandable through systematic organization. Modern autonomous driving systems depend on categorized inputs to interpret unpredictable environments.

Understanding the Core Processes

Designing annotation workflows relies on three fundamentals:

- Object alignment. Urban navigation needs are different from highway scenarios, so labeling priorities change.

- Multi-sensor synchronization. Coordinating camera, lidar, and radar data requires timestamp-level accuracy.

- Iterative validation. Each labeled frame undergoes a three-step validation process before training a model.

The annotation process involves image segmentation, object path tracing, safety zone identification, and labeling of dynamic and static elements of the environment. High-quality labeling affects the accuracy of computer vision models that make decisions during driving. Also, annotation helps systems adapt to road and weather conditions, increasing autonomous vehicle safety. Therefore, proper annotation is the basis for training and testing autopilot systems, accelerating the development of driverless driving technologies and their application in commercial vehicles.

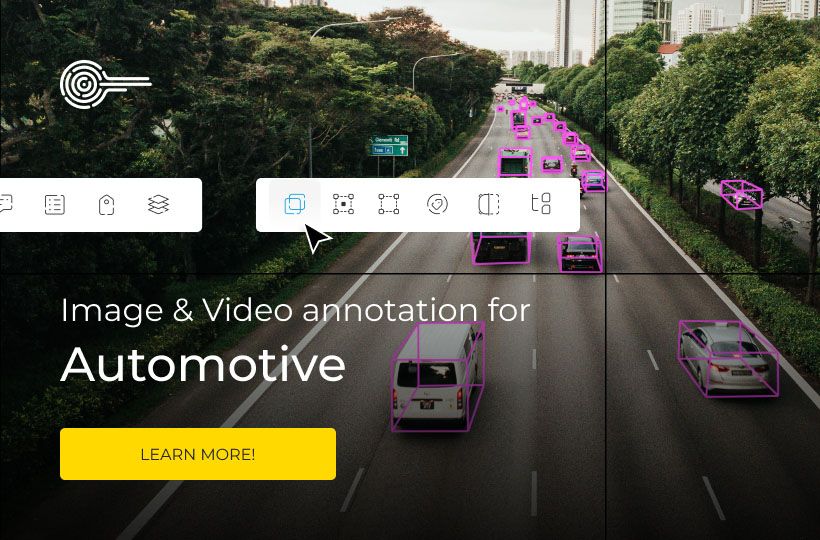

Annotation Methods and Tools for Autonomous Vehicles

Four primary methods are effective for mapping the environment. Rectangular bounding boxes identify cars and signs in flat images. 3D cuboids add depth measurements for spatial orientation, which are important for estimating distances in LiDAR annotation workflows.

Polygonal annotation tracks precise contours to mark irregular shapes. A more detailed approach is semantic segmentation, which classifies each pixel.

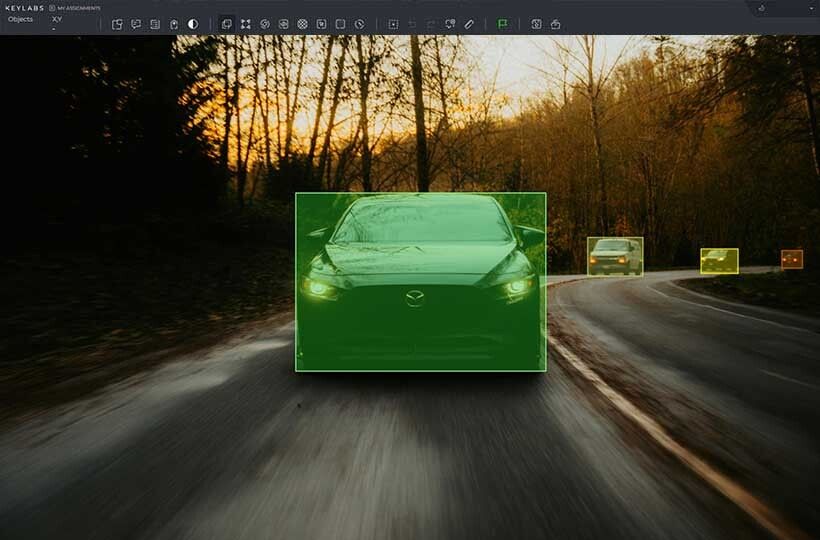

Choosing the Right Marking Tools

When choosing a platform or software, consider support for different types of annotation, including 2D and 3D markup, polygonal segmentation, boxes, keypoints, and semantic segmentation, which are necessary for recognizing road infrastructure, vehicles, pedestrians, and road scene labeling. The Keylabs platform has all the above features and combines AI-powered labeling with human quality control. Our tools also have flexible settings to comply with security standards, analytics to track performance, and annotation effectiveness.

Implementing video annotation and advanced labeling methods

Dynamic environments require intelligent labeling approaches. This uses video analytics to teach systems how objects move and interact over time. This method better reflects real-world traffic annotation needs than static images.

Video annotation strategies for complex environments

This involves breaking down video footage into individual frames for accurate labeling. Each second of the video has over 30 images that require consistent tags. Machine learning helps track elements in sequences after initial human input.

Features include:

Improved object detection and tracking

Advanced algorithms are trained on temporal data to improve accuracy. Systems trained on video input detect obstacles faster than models trained on images. Improvements include:

- Better recognition of partially obscured objects.

- Smooth tracking in crowded areas.

- Adapt to changes in lighting.

This approach prepares AI for the unpredictable real world and halts labeling time.

Quality control, team training, and scaling annotation processes

The annotation quality is achieved through multi-level checks, including automated validation, manual spot audits, and accuracy metrics to detect and correct errors. Key aspects of 3D verification include:

- Initial accuracy checks based on sensor data.

- Assessment of inter-team consistency.

- Final approval by industry experts.

To maintain a consistently high level of performance, it is necessary to implement systematic team training that covers basic tool skills and understanding of project specifics, annotation standards, and data security requirements. Regular training, simulation tasks, and manual updates help increase the competence of the annotation team and reduce the number of errors. A quality annotator training process includes:

- Practical exercises on tool mastery.

- Scenario-based decision trees.

- Real-time feedback during practical annotation.

Scaling processes is possible through automation of routine tasks, semi-automatic markup based on AI models, distribution of roles in the team, and building a flexible infrastructure. This lets you quickly attract new specialists and integrate additional tools without stopping the main workflows. This approach provides stable performance, flexibility, and the ability to process large volumes of data with high accuracy of results.

FAQ

How does data labeling improve machine learning models for autonomous driving systems?

Data labeling provides training samples with accurate examples, allowing AI models to recognize objects, traffic situations, and behavior patterns.

Why is semantic segmentation important for perceptual systems in autonomous vehicles?

Semantic segmentation allows autonomous driving systems to accurately identify and classify every pixel in an image, distinguishing between roads, pedestrians, vehicles, and other objects. This provides a deep understanding of the scene and improves the safety and accuracy of the car’s decision-making.

How do teams handle edge cases, such as rare traffic scenarios, during annotation?

To do this, they create detailed instructions and examples for each type of case to ensure consistency in labeling. They use group discussions and additional validation by senior experts for complex or ambiguous situations.

What quality checks ensure the consistency of labeled datasets for autonomous systems?

Multi-level checks, including automated markup validation and selective manual auditing, ensure the consistency of labeled datasets for autonomous systems. Standardized instructions and accuracy metrics also reduce variability between annotators.