Ethical Considerations in Deploying YOLOv7: Addressing Bias and Privacy Concerns

In a startling turn of the industrial dial, the first quarter of 2023 saw a staggering 34% increase in product recalls in the US due to manufacturing defects, touching an immense 83.3 million units. This statistic not only highlights the scale of potential quality control issues but also underscores the urgency for solutions such as YOLOv7—an advanced object detection system—to mitigate such defects through enhanced accuracy. However, the deployment of YOLOv7 ethics goes beyond technical capabilities and delves into the realm of privacy concerns in AI and ethical AI deployment.

While YOLOv7 has demonstrated remarkable proficiency in sectors like industrial manufacturing, reducing human error which accounts for an estimated 20% to 30% of inspection inaccuracies, its implementation is not without deep ethical considerations. Addressing issues of bias, which affects nearly 30% of the GC10-DET dataset with multi-class images, requires a framework that ensures equitable and transparent application of AI. This reflects the substantial role that ethical AI deployment plays in not only enhancing operational efficiency but also in securing trust in AI technologies.

The advent of privacy concerns in AI is another dimension exigent of diplomacy, particularly as data collection and analysis become more sophisticated. YOLOv7 ethics, a core concern amidst advancements, invites a broader conversation on how these powerful tools are to be wielded without infringing on individual privacy and integrity. The balance of innovation and ethical responsibility remains imperative, highlighting the need for comprehensive analysis and a sensitive approach to AI deployment.

Key Takeaways

- Urgent need for robust YOLOv7 ethics in the face of increased manufacturing defects.

- Human error in inspections warrants the responsible use of YOLOv7 for greater accuracy.

- Bias in AI necessitates ethical AI deployment to ensure fairness and transparency.

- Privacy concerns in AI magnify the requirement for careful considerations in data handling.

- YOLOv7 embodies the potential for operational excellence balanced with ethical obligation.

- Engagement and dialogues among stakeholders are key in navigating the ethics of AI technologies.

Understanding the Impact of Bias in Object Detection

The realm of artificial intelligence, specifically within object detection technologies such as YOLO (You Only Look Once) models, presents transformative possibilities for myriad applications. However, the prevalence of bias in object detection, algorithmic bias, and AI discrimination poses serious challenges that can potentially skew outcomes and impact fairness.

Defining Bias within AI and YOLOv7 Applications

In the usage of AI for object detection, bias can manifest in various forms. Primarily, it appears when algorithms developed and trained using non-representative data fail to accurately identify or categorize objects across a diverse range of environments and populations. Such biases could lead to AI discrimination, disproportionately affecting certain groups based on race, ethnicity, or socioeconomic status.

Analyzing Historical Data on Bias Prevalence in Algorithms

Historical insights into AI applications show a troubling trend where algorithmic bias is embedded within the foundational data sets like ImageNet, used for training image classification models. These datasets, often lacking in diversity, feed into the training process of single-shot detectors like SSDs and two-shot detectors like Faster R-CNN, propagating biases in real-world applications.

YOLOv7 Ethics: Incorporating Responsible AI Development

The integration of YOLOv7 technologies into diverse applications demands adherence to responsible AI development and strict ethical AI guidelines. These frameworks aim to offer solutions that not only advance technological capabilities but also safeguard human rights and ensure ethical integrity.

Central to implementing YOLOv7 responsibly is the commitment to developing AI that is fair and accountable. This requires meticulous attention to how the technology is designed, what data it is trained on, and how it is deployed. To illustrate, recent advancements in YOLOv7 models have demonstrated significant improvements in precision and efficiency, which highlights the potential of AI when developed with an ethical framework in mind.

- The YOLOv7-UAV algorithm has shown a 3.9% increase in mean Average Precision on the VisDrone-2019 dataset compared to its predecessors, reflecting strides toward more accurate detection capabilities that reduce the risk of bias.

- This model also reduces resource consumption by 28% and power consumption by over 200 W, proving that efficiency can be achieved alongside ethical considerations, ultimately leading to a 12-fold increase in energy efficiency compared to traditional GPU-driven models.

Such statistics underscore the importance of integrating ethical AI guidelines into the core of AI development processes. The emphasis on reducing power and resource usage not only maximizes operational efficiency but also minimizes environmental impact, aligning with broader ethical objectives that consider sustainability and responsible use of technology.

Furthermore, responsible AI development involves continual monitoring and adaptation to ensure ongoing compliance with ethical standards. As AI technologies evolve, so too should the frameworks that govern their use, ensuring that advancements in AI do not outpace the ethical guidelines designed to guide them.

By adhering to rigorous standards, developers and users of YOLOv7 can help foster AI technologies that are not only powerful and efficient but are also fair, transparent, and accountable. Employing these principles is crucial in ensuring that AI serves the public good while preventing potential misuses or harmful impacts on society.

Navigating Privacy Concerns in Surveillance and Data Capture

In an era where technological advancements rapidly transform the landscape of surveillance, it's crucial to address the privacy concerns that arise. With technologies like YOLOv7 playing a pivotal role in data collection, understanding and implementing robust legal frameworks and privacy-preserving technologies are more important than ever to ensure that surveillance ethics are maintained.

Legal Frameworks Governing Data Privacy

Protecting individual privacy in the age of AI-driven surveillance, such as that enabled by YOLOv7, requires strict adherence to legal standards. Regulations like GDPR in Europe and CCPA in California are designed to enforce data privacy in AI, setting a benchmark for global data protection standards. These legal frameworks dictate how data can be collected, processed, and stored, ensuring transparency and user consent, which are cornerstone elements of data privacy in AI. Working with legally obtained datasets is a big part of the process.

YOLOv7's Role in Data Collection: Risks and Protections

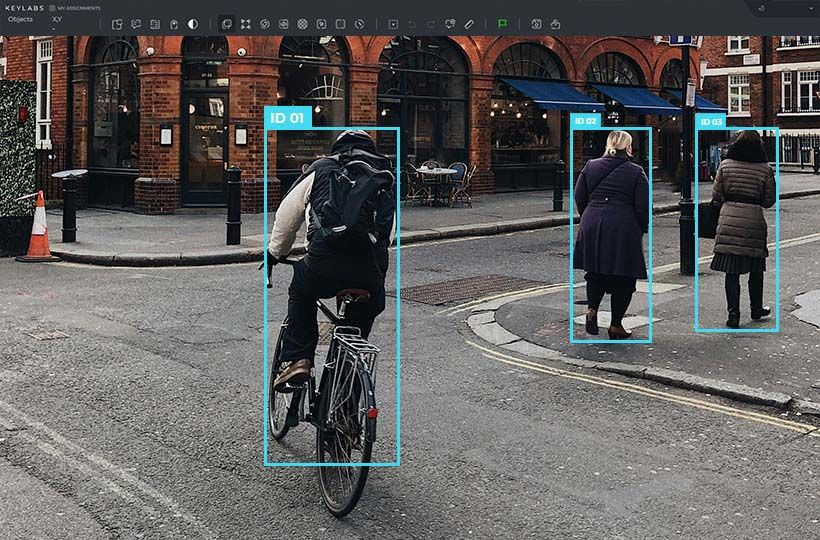

YOLOv7, known for its efficiency in real-time object detection, introduces specific risks when employed in surveillance systems. The capability to detect and analyze visual data on a large scale must be balanced with privacy-enhancing technologies that protect individuals' identities and sensitive information. Employing techniques such as automatic blurring of faces and license plates during data collection phases can be effective in maintaining surveillance ethics while utilizing YOLOv7.

Advancements in Privacy-Preserving Techniques

The integration of emerging privacy-enhancing technologies in AI systems has shown significant potential in mitigating privacy risks. Technologies such as federated learning allow data to be processed locally, minimizing the amount of personal data transferred and stored centrally. Furthermore, homomorphic encryption offers a method to analyze encrypted data without ever needing to decrypt it, providing a robust layer of data security. These advancements represent pivotal shifts toward safeguarding privacy in surveillance applications.

As we continue to harness the power of AI like YOLOv7 in surveillance, our approach to data privacy and surveillance ethics will invariably shape the landscape of personal and public security. By integrating robust legal standards and advancing privacy-preserving technologies, we can navigate these challenges responsibly, ensuring that technological advancements do not come at the cost of individual privacy.

Transparency and Accountability in YOLOv7 Deployments

In the rapidly evolving field of AI object detection, the deployment of tools like YOLOv7 has placed a spotlight on the paramount importance of AI transparency and YOLOv7 accountability. Transparency in AI systems involves a candid showcase of both methodologies and operational procedures, ensuring that every stakeholder comprehends how decisions are made. For YOLOv7, which is a real-time object detection system, this means articulating the processes that guide its predictive behaviors and output.

The landscape of YOLOv7 accountability stretches beyond just identifying objects in an image—it encapsulates the assurance that every deployment phase and outcome is fair, ethical, and justifiable. This involves implementing robust mechanisms to monitor and evaluate the technology continually. For instance, ensuring that YOLOv7's real-time decisions, heavily reliant on object recognition accuracies, adhere to stringent ethical standards is crucial for wide-scale acceptance and integration.

- Maintenance of a transparent audit trail that details AI decision paths and revisions

- Regular dissemination of performance reports that highlight accuracy and fairness evaluations

- Commitment to rectifying biases as part of ongoing system enhancements

Robust frameworks are central to achieving AI transparency. These frameworks should not only encompass explanatory capacity but also foster the development of features that anyone can analyze and understand—even those without extensive technical knowledge.

Ensuring YOLOv7’s systems are accessible and understandable promotes a more inclusive environment where all users can trust and reliably use the technology.

Strategies for Mitigating Ethical Risks in AI

In the rapidly evolving domain of artificial intelligence (AI), the importance of implementing robust measures to address AI ethical risks cannot be overstated. By integrating fairness audits, organizing ethical AI training, and setting up dedicated ethical review boards, businesses can better align their AI operations with the highest ethical standards, thereby enhancing transparency and accountability.

Implementing Equity and Fairness Audits

Conducting thorough fairness audits offers numerous opportunities for AI systems like YOLOv7 to be examined critically for biases and inconsistencies. These audits can detect and quantify discriminatory patterns, thereby fostering a practice of corrective action that ensures AI technologies operate equitably across diverse user demographics. Regular fairness audits not only uphold ethical norms but also strengthen public trust in AI applications.

The Role of Continuous Ethical Training for AI Teams

Maintaining an ongoing ethical AI training program for development teams is crucial. Through continuous learning and updating of knowledge on the latest ethical AI practices, teams can anticipate potential ethical challenges and learn effective strategies to mitigate them. This constant educational advancement supports AI developers in aligning new technologies with established ethical benchmarks, ensuring these innovations benefit society without compromising moral values.

Creating Ethical Review Boards for AI Deployment

Setting up ethical review boards is a fundamental step in safeguarding AI deployment strategies. These boards act as governing bodies that review and regulate AI projects to ensure they comply with ethical standards before their implementation. Comprised of experts in AI ethics, law, technology, and social sciences, these boards evaluate AI systems thoroughly, advising on potential ethical risks and the mitigation strategies needed to address them effectively.

Together, these strategies form a robust framework for minimizing AI ethical risks through vigilant monitoring, comprehensive training, and strict governance. As AI technologies continue to permeate various sectors, the importance of these measures grows, highlighting the need for an ethical approach to AI development and deployment.

Community Engagement and Awareness in YOLOv7 Use

As the deployment of YOLOv7 advances, the role of AI community engagement becomes crucial in shaping its acceptance and efficacy. Engaging with various stakeholders not only broadens the understanding of AI capabilities but also reinforces the ethical framework within which these technologies operate.

Stakeholder Inclusion in AI Deployment Discussions

Involving stakeholders from diverse sectors is fundamental for fostering inclusive discussions around AI deployment. This approach ensures that the perspectives of all community members are considered, promoting stakeholder inclusion in the decision-making process. Such inclusive practices help in recognizing potential impacts of AI on different segments of society, paving the way for more equitable AI solutions.

Public Education Initiatives on AI Capabilities and Limitations

To demystify AI technologies like YOLOv7, robust AI public education initiatives are essential. Educating the public about both the potential and the limitations of AI fosters informed opinions and realistic expectations. This transparency is crucial for building trust and facilitating smoother integration of AI technologies into everyday life.

Promoting a Dialogue Between AI Developers and End-Users

Creating a consistent dialogue between AI developers and end-users enhances mutual understanding and collaboration. This interaction allows developers to gain insights into the practical challenges end-users face and enables them to tailor AI solutions to better meet these needs. Moreover, it empowers users by giving them a voice in how AI technologies are shaped and implemented.

| Stakeholder Group | Engagement Approach | Expected Impact |

|---|---|---|

| Local Governments | Regulatory and policy co-creation | Enhanced governance and compliance |

| Academic Institutions | Collaborative Research | Advanced AI education and innovation |

| General Public | Workshops and Seminars | Increased AI literacy and acceptance |

| Industry Experts | Joint Development Projects | Practical and impactful AI applications |

Through strategies like AI public education, stakeholder inclusion, and active engagement, the implementation of AI technologies such as YOLOv7 can be demystified and made more accessible, ensuring that these advancements are utilized ethically and effectively in diverse societal contexts.

Evaluating the Societal Implications of Advanced Object Detection

The integration of advanced object detection technologies like YOLOv7 into daily technological applications harbors significant societal implications that merit profound assessment. The societal impact of AI, particularly through lenses such as privacy, security, and changes in societal behaviors, demands a balanced view to navigate the ethically charged waters of modern surveillance capabilities.

Advanced object detection ethics involve dissecting how these technologies affect societal norms and individual behaviors. For instance, the omnipresence of surveillance systems can alter public conduct, potentially impinging on privacy rights and heightening security measures. As AI systems become more adept at interpreting complex visuals in real-time, the lines between maintaining public safety and infringing on privacy blur, creating a dichotomy that necessitates ethical clarity.

- Privacy Concerns: The capability of object detection systems to monitor public and private spaces can lead to over-surveillance, raising ethical questions regarding the right to privacy.

- Security Enhancement: While these systems can bolster security, identifying threats more efficiently, they also bring the ethical debate of constant monitoring and data handling.

- Societal Behavior: The awareness of being monitored can deter crime but also modify innocent daily behaviors, influencing societal norms and personal freedoms.

Moreover, the debate extends to how these technologies are implemented across different demographics and locations, potentially leading to unequal surveillance that could reinforce societal divides. Such advanced object detection ethics need guidelines that ensure equitable application to prevent any form of digital discrimination.

Addressing and Overcoming Job Displacement Concerns

The rapid acceleration of artificial intelligence in various sectors raises significant concerns about AI job displacement. As AI efficiency skyrockets, traditional roles are being reimagined, and the workforce must adapt swiftly to keep pace with technological advances. This section delves deep into strategies to mitigate these disruptions through reskilling initiatives and proactive AI policy responses.

Technological Advancement vs. The Human Workforce

The dichotomy between technological progression and job security continues to be a pressing issue. With AI's ingrained capability to enhance efficiency and cost-effectiveness, industries are increasingly deploying automation in areas traditionally managed by human employees. This transition, while beneficial for productivity, sparks AI job displacement across multiple industries, urging a critical analysis of future employment landscapes.

Reskilling and Upskilling Initiatives in the Age of AI

Reskilling for AI emerges as a crucial counteractive measure. It’s essential for the workforce affected by AI job displacement to embrace new skills that align with the digital era. Training programs in data analysis, AI maintenance, and system management not only empower workers but also bolster their employability in a transformed job market. Notably, initiatives in tech-focused education are expanding, providing vital opportunities for career transitions.

Policy Responses to AI-Induced Job Market Transformations

AI policy responses play a pivotal role in managing AI-induced changes within the job market. Governments and international bodies are called upon to formulate robust policies that safeguard worker rights while fostering an environment conducive to innovation and technological adoption. Policies must cover economic aids, educational programs, and job transition strategies to address the repercussions of AI job displacement comprehensively.

| Concern | Strategic Response | Expected Impact |

|---|---|---|

| Job Displacement | Implementation of reskilling programs | Reduction in unemployment rates among displaced workers |

| Policy Lacunae | Introduction of comprehensive AI governance laws | Improved regulation of AI deployment and workforce transition |

| Skills Gap | Upskilling initiatives | Enhanced workforce adaptability and future readiness |

Addressing job displacement while fostering AI's potential requires a multi-faceted approach involving education, policy adjustments, and corporate responsibility. With strategic planning and collaborative effort, the shift towards an AI-integrated society can be both innovative and inclusive, ensuring that no one is left behind as we step into the future of work.

Summary

The swift advancements in artificial intelligence, as exemplified by object detection systems like YOLOv7-TS, bring to the fore the essential balance between innovation and ethical considerations. The evolution in performance metrics, such as the significant enhancements in mAP offered by YOLOv7 and its successors, should be matched by an equally rigorous approach to YOLOv7 ethical deployment and the development of responsible AI. Our journey from standard R-CNN's regions of interest towards the integrated approaches of Fast, Faster R-CNN, and YOLO's succession vividly illustrates our capacity to not just create but also refine and attune technologies in sync with ethical imperatives.

Around the globe, researchers and practitioners are pooling together large-scale datasets, like the Singapore Maritime Dataset and the FloW dataset, and are fine-tuning algorithms such as ViT-MVT to navigate complex visual challenges with prowess and precision. But behind each dataset, algorithm, or model upgrade, stands a responsibility to uphold privacy, ensure fairness, and secure transparency. The deployment of real-time water target detection tools or the discernment of sea surface targets through algorithms like STE-YOLO not just demonstrate our technical capabilities but also cast a spotlight on the responsibility that accompanies these breakthroughs.

Thus, as we embrace increasingly sophisticated AI models, our commitment to responsible AI is what will safeguard human values within the digital realm. Whether through enhancing feature fusion as seen in YOLOv5 or employing novel loss function optimizations in the likes of Wise-IOU, these technological strides must always be paralleled by ethical stewardship. In an era where the confluence of innovation and ethics is more crucial than ever, the responsible deployment of AI technologies like YOLOv7 must remain our collective guiding star, ensuring that progress and principle remain inextricably interwoven.

FAQ

What are the key ethical considerations in deploying YOLOv7?

Key ethical considerations include addressing biases that can lead to discrimination, ensuring privacy of individuals in the use of AI surveillance, adopting ethical AI deployment practices that uphold transparency and accountability, and engaging proactively with the community.

How does bias in object detection manifest within AI and YOLOv7 applications?

Bias in object detection can occur when the AI algorithms produce skewed results, leading to disproportionate effects on different groups of people. This can result in discrimination and inappropriate profiling.

What strategies are available to mitigate biases in YOLOv7 and other AI systems?

To combat biases, strategies include conducting equity and fairness audits, continuous ethical training for AI development teams, and establishing ethical review boards. These measures foster responsible AI development that aligns with societal values.

What are the privacy concerns associated with YOLOv7 and how can they be addressed?

Privacy concerns revolve around the potential for invasive surveillance and data capture. Addressing these concerns involves adhering to legal frameworks, implementing robust data privacy protections, and applying privacy-enhancing technologies like federated learning and encrypted data processing.

How can developers ensure transparency and accountability when using YOLOv7?

Developers can ensure transparency by clearly communicating the capabilities and limitations of AI models and by establishing mechanisms to hold all stakeholders accountable for outcomes. This involves thorough documentation and the possibility for audits of the AI's performances and decisions.

How does YOLOv7 impact job displacement, and what remedies are suggested?

The deployment of YOLOv7, like other AI technologies, may automate tasks traditionally performed by humans, leading to job displacement. Remedies include offering reskiling and upskiling programs to help the workforce adapt and proactive policy interventions aimed at managing changes in the job market equitably.

What role does public education play in the deployment of YOLOv7?

Public education is essential in helping people understand the functions and implications of AI. Educating the public on AI's capabilities and limitations is crucial for building trust in the technology, fostering responsible use and facilitating an informed dialogue between developers and users.

What is the significance of cross-disciplinary collaboration in ethical AI development?

Cross-disciplinary collaboration brings together expertise from technology, law, ethics, and social sciences to tackle the complex ethical considerations in AI. This collaboration is vital for ensuring comprehensive, responsible strategies that balance innovation against social responsibility.