Finding the Best Training Data for Your AI Model

Choosing the right training data is crucial for the success of your AI application. Data engineering plays a significant role in training AI models. During the learning phase, AI models are trained using examples of input and corresponding output. Deep learning, a type of AI that has shown great results, requires a large amount of data. However, rushing through the data engineering process can lead to inaccurate models. Data engineering involves defining, collecting, cleaning, and labeling data.

Key Takeaways:

- Choosing the right training data is crucial for the success of an AI model.

- Data engineering involves defining, collecting, cleaning, and labeling data.

- Deep learning, a powerful AI technique, requires a large amount of training data.

- By following best practices in data engineering, businesses can enhance the accuracy and effectiveness of their AI applications.

The Importance of Data Engineering in AI Model Training

Data engineering is a critical aspect of AI model training. Without proper data engineering, AI models cannot perform accurately and deliver reliable results. Data engineering encompasses several essential steps, including data collection, data cleaning, data labeling, and ensuring the dataset represents the real-world environment where the AI model will be deployed.

To understand the significance of data engineering, it is crucial to recognize its role in defining the input and expected output of the AI model. By clearly defining the parameters and objectives, data engineering lays the foundation for a successful training process.

Data collection is the initial step of data engineering. It involves gathering data from relevant sources that align with the application's specific requirements. Whether it be images, text, or numerical data, collecting the right data is vital for training models that accurately interpret and respond to real-world inputs.

Data cleaning is another crucial step in data engineering. It ensures that the collected dataset is free from errors, outliers, and any inconsistencies that might adversely impact the model's performance. Cleaning the data involves detecting and correcting inaccuracies, removing duplicates, and addressing missing values.

Data labeling is an integral part of data engineering, especially in supervised learning scenarios. It involves assigning the correct label to each input image or data point, providing the model with the necessary ground truth information for learning and decision-making.

"Data engineering is the backbone of AI model training. It sets the stage for accurate and reliable AI performance by collecting, cleaning, and labeling the data that fuels the learning process."

Ensuring the dataset represents the real-world environment is crucial for training successful AI models. Specific factors such as location, distance, size, and orientation of objects in the dataset must align with the real-world scenario to enhance the model's ability to generalize and produce accurate predictions.

| Data Engineering Steps | Description |

|---|---|

| Data Collection | Collecting relevant data aligned with the application's requirements. |

| Data Cleaning | Removing errors, outliers, and inconsistencies from the dataset. |

| Data Labeling | Assigning the correct labels to data points or images. |

Collecting Relevant Data for AI Model Training

When it comes to training AI models, collecting relevant data is crucial for achieving accurate and effective results. There are several types of data sources that can be utilized, including public datasets, open source datasets, synthetic data, and data generators.

Public Datasets

Public datasets are freely available and can be accessed through platforms such as Google Dataset Search, GitHub, Kaggle, and UCI Machine Learning Repository. These datasets cover a wide range of industries and topics, providing a diverse pool of data for AI training. Public datasets are a valuable resource for researchers, developers, and data scientists to explore and analyze different types of data.

Open Source Datasets

Open source datasets are maintained by government organizations and are specifically curated to support research and development in various fields. These datasets offer comprehensive coverage of specific industries or domains, making them ideal for AI training purposes. Researchers can leverage open source datasets to build models tailored to specific use cases and domains, ensuring that the trained AI models are relevant and accurate.

Synthetic Data

Synthetic data is generated using distribution models or deep learning techniques. It can be a valuable asset when real-world data is limited, inaccessible, or sensitive. Synthetic data allows AI developers to simulate various scenarios and edge cases, providing diverse training data to enhance the model's robustness and generalization capabilities. It also enables data augmentation, improving the performance of AI models by increasing the variability of the training dataset.

Data Generators

Data generators are tools that create synthetic data based on predefined parameters. These tools allow developers to generate custom datasets that closely match the requirements of their AI models. By specifying the desired characteristics, such as class distributions, noise levels, or feature correlations, data generators can produce synthetic data that meets specific training objectives. Data generators provide flexibility and control over the generated dataset, facilitating the creation of high-quality training data.

By utilizing a combination of public datasets, open source datasets, synthetic data, and data generators, AI practitioners can gather relevant and diverse data for training their models. This comprehensive approach to data collection ensures that the AI models are well-trained and capable of handling real-world scenarios.

Choosing the Right Model for AI Training

When it comes to AI training, selecting the right model is a critical step in ensuring success. With the abundance of machine learning algorithms and deep learning algorithms available, it is essential to make an informed decision based on the specific learning goals and data requirements of your project.

Model selection involves evaluating various algorithms and considering their strengths and weaknesses. Machine learning algorithms, such as decision trees, random forests, and support vector machines, offer versatility and efficiency in handling different types of data. On the other hand, deep learning algorithms, like convolutional neural networks (CNNs) and recurrent neural networks (RNNs), excel in processing complex patterns and sequences.

To choose the right model, it is recommended to benchmark different algorithms and experiment with different hyperparameters. By comparing their performance across various evaluation metrics, you can identify the most suitable model for your specific use case. Performance metrics, such as classification accuracy, precision, recall, or root mean squared error, provide objective measures of a model's effectiveness.

"The choice of model depends on the specific learning goals and data requirements of the project."

For example, if your AI application involves image classification tasks, a convolutional neural network (CNN) may be the best choice due to its ability to extract features from images effectively. However, for time series forecasting, a recurrent neural network (RNN) may be more appropriate, as it can capture temporal dependencies in the data.

It is worth mentioning that selecting the right model is not a one-size-fits-all approach. Each project has unique characteristics and demands. Therefore, it is essential to carefully evaluate the strengths and weaknesses of different algorithms and consider the specific requirements of your application.

Comparison of Machine Learning and Deep Learning Algorithms

| Algorithm | Application | Strengths | Weaknesses |

|---|---|---|---|

| Decision Trees | Classification, Regression | - Easily interpretable - Handles both numerical and categorical data - Handles missing values | - Prone to overfitting - Limited ability to capture complex relationships |

| Random Forests | Classification, Regression | - Ensemble learning - Reduced overfitting - Handles high-dimensional data | - Slow training and prediction times for large datasets |

| Support Vector Machines | Classification, Regression | - Effective in high-dimensional spaces - Good generalization capabilities | - Sensitive to parameter tuning - Doesn't scale well with large datasets |

| Convolutional Neural Networks (CNNs) | Image Classification, Object Detection | - Excellent at extracting features from images - Can capture spatial relationships | - Requires large amounts of training data - Computational complexity |

| Recurrent Neural Networks (RNNs) | Time Series Analysis, Natural Language Processing | - Can capture temporal dependencies - Handles sequences of varying lengths | - Difficulties with long-term dependencies - Vanishing/exploding gradients |

By carefully considering the strengths and weaknesses of different algorithms and evaluating their performance using appropriate performance metrics, you can confidently choose the right model for your AI training. Remember, the goal is to maximize the accuracy and effectiveness of your machine learning application.

Key Takeaways:

- Model selection is crucial for successful AI training, considering the specific learning goals and data requirements.

- Machine learning algorithms offer versatility, while deep learning algorithms excel in processing complex patterns.

- Benchmarking and comparing different models using performance metrics help identify the best fit.

- Consider the strengths and weaknesses of algorithms and the requirements of your application to make an informed decision.

Choosing the right model is a critical step in AI training, as it sets the foundation for accurate and efficient machine learning. With careful evaluation and consideration, you can make an informed decision that aligns your model's capabilities with your project's needs and objectives.

Set Realistic Performance Expectations for AI Models

Setting realistic performance expectations is an essential step in training AI models to ensure their effectiveness and success. By defining clear goals and communicating them to project owners and stakeholders, businesses can align their AI projects with practical outcomes.

When setting performance expectations, it is crucial to establish a minimum acceptable performance level. This benchmark provides a measurable standard for evaluating the model's success and determining whether it meets the desired outcomes.

One key aspect of setting performance expectations is selecting a single performance metric to evaluate the model's performance throughout its lifecycle. By focusing on one specific metric, businesses can effectively track and compare different models' performance, ensuring a consistent evaluation process.

Common performance metrics for AI models vary depending on the task at hand. For classification tasks, classification accuracy is often used as a performance metric to measure the model's ability to accurately assign categories or labels to input data. On the other hand, for regression tasks, root mean squared error (RMSE) is commonly employed to assess the model's predictive accuracy in continuous value estimation.

The chosen performance metric should align with the project's objectives and provide meaningful insights into the model's performance in real-life scenarios. It should be a reliable indicator of the model's capabilities and how well it performs in delivering accurate and reliable results.

By having well-defined performance expectations and utilizing appropriate performance metrics, businesses can evaluate their AI models effectively and make informed decisions about their deployment and further improvements. It ensures that the AI models are realistic, effective, and aligned with the desired outcomes.

The Training Process for AI Models

The training process for AI models is an essential step in creating accurate and effective machine learning applications. It involves adjusting model parameters and hyperparameters based on the training data to optimize model performance. Let's explore the different aspects of the training process in more detail.

Model Parameters and Hyperparameters

During the training process, AI models learn from the input data to adjust their internal parameters. These parameters represent the learned knowledge and patterns from the training data and affect how the model operates. On the other hand, hyperparameters are external settings that determine the behavior and configuration of the model.

Model parameters are learned from the input data, while hyperparameters are set externally and define how the model operates.

Training, Validation, and Testing Datasets

To train an AI model, a dataset is partitioned into three subsets: training, validation, and testing. The training dataset is used to fine-tune the initial model parameters, while the validation dataset is crucial for evaluating the model's performance and fine-tuning hyperparameters. The testing dataset provides a real-life assessment of the trained model's performance.

It is important to properly partition the datasets and ensure that they are representative of the real-world scenarios in which the AI model will be deployed. Additionally, having ground truth labels for evaluation is essential for accurate performance assessment.

Model Training Process

The model training process typically involves several iterations of adjusting parameters and hyperparameters and evaluating the model's performance. This iterative process allows the model to learn from the training data and improve its predictions or classifications.

Here is an overview of the training process:

- Initialize the model with initial parameter values.

- Iterate through the training dataset, feeding the inputs and expected outputs to the model.

- Update the model's parameters using optimization algorithms, such as gradient descent, to minimize the difference between predicted and expected outputs.

- Periodically evaluate the model's performance using the validation dataset and fine-tune the hyperparameters if necessary.

- Once the training process is complete, evaluate the model's real-life performance using the testing dataset.

Image illustrating the training process:

The training process requires careful attention to achieve optimal model performance. By adjusting model parameters and hyperparameters, partitioning datasets, and iteratively refining the model, AI engineers can train models that effectively solve real-world problems.

Ongoing Maintenance of AI Models

Ensuring that AI models consistently perform at their best requires ongoing maintenance. This maintenance is crucial to keep up with the dynamic nature of model inputs and prevent performance deterioration over time.

Data drift is a common challenge that AI models face. As the world evolves, the data used to train the models may become outdated or no longer representative of the real-life environment. This drift can lead to reduced accuracy and compromised performance.

Continuous monitoring plays a vital role in maintaining AI models. It involves regularly evaluating the model's performance in real-life tasks, identifying any deviations or anomalies, and taking appropriate actions to address them. By closely monitoring the model's output, potential issues can be identified early on, minimizing their impact on the overall performance.

If a model's performance deteriorates, adjustments may be necessary to restore optimal functionality. This can involve fine-tuning hyperparameters, which are parameters that control the model's behavior and performance, or updating the training data to better reflect the current environment. By making these adjustments, AI models can adapt to changing circumstances and continue delivering accurate and reliable results.

Regular monitoring and maintenance are essential to the long-term success of AI models. By staying vigilant and proactive, businesses can ensure that their AI models remain effective and responsive to evolving needs and conditions.

Key Points:

- Ongoing maintenance is crucial for maintaining optimal performance of AI models.

- Data drift can lead to reduced accuracy and compromised performance over time.

- Continuous monitoring helps validate model performance and identify potential issues.

- Adjustments, such as fine-tuning hyperparameters or updating training data, may be necessary to address performance deterioration.

- Regular monitoring and maintenance are essential for accurate and reliable AI model results.

Custom Data Creation for AI Models

Custom data creation is a vital process in training AI models, as it involves sourcing or generating data that is tailored to meet the specific requirements of the model. This section explores various methods for creating custom data, including data sourcing, annotation services, synthetic data generation, and data mining.

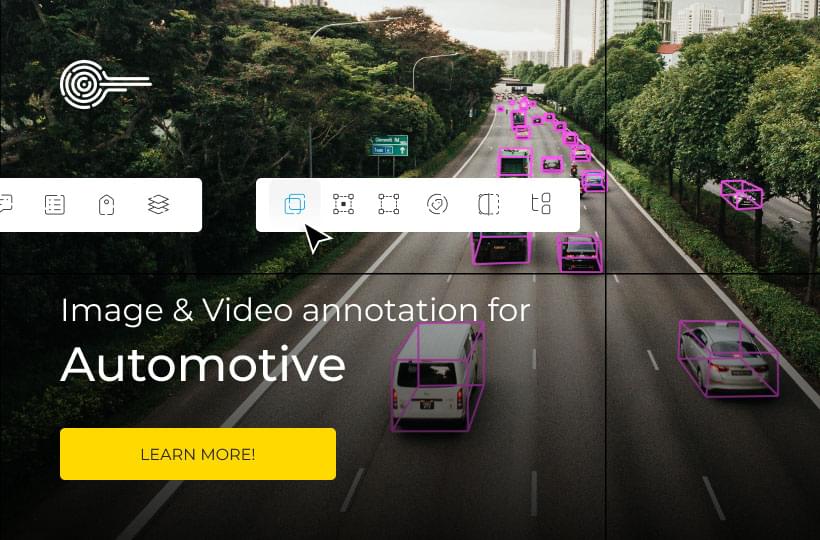

Data Sourcing and Annotation Services

Data sourcing and annotation services are valuable resources for acquiring labeled datasets or creating new ones from scratch. These services involve experts who meticulously annotate existing datasets or generate fresh datasets with accurate labels. By leveraging data sourcing and annotation services, businesses can obtain large quantities of quality training data that align with the AI model's needs.

Synthetic Data Generation

Synthetic data, generated using distribution models or deep learning techniques, can be an effective way to augment or substitute real-world data. Synthetic data creation involves generating artificial data points that mimic the characteristics and patterns of the target data. It offers the advantage of versatility and control, allowing AI engineers to generate specific scenarios or edge cases for comprehensive model training.

Data Mining

Data mining involves extracting data from various online sources, such as websites, social media platforms, or public repositories. It enables the collection of diverse and extensive datasets for AI model training. However, it is essential to be mindful of privacy regulations and ethical considerations when conducting data mining. Respecting data privacy and abiding by legal constraints are critical to maintaining ethical practices in AI model development.

Benefits of Custom Data Creation

Creating custom data for AI models offers several advantages:

- Highly tailored datasets: Custom data creation allows AI engineers to design datasets that closely align with the specific requirements of the AI model, ensuring optimal performance.

- Improved accuracy: By curating custom data, potential biases and limitations present in publicly available datasets can be minimized, leading to more accurate and reliable AI models.

- Enhanced domain expertise: Collaboration with data sourcing and annotation service providers provides access to their industry-specific knowledge and expertise, resulting in more informed and contextually relevant training data.

- Efficient model training: Custom data creation enables AI engineers to focus on precise use cases and scenarios, streamlining the training process and improving efficiency.

Custom data creation is a strategic approach for training AI models, allowing businesses to tailor datasets according to their unique needs. By harnessing data sourcing and annotation services, synthetic data generation techniques, and responsible data mining practices, organizations can achieve more accurate and effective AI solutions.

Benefits of Partnering with a Data Sourcing and Labeling Provider

Partnering with a data sourcing and labeling provider can offer several benefits. These providers have the expertise to source or generate custom datasets, ensuring they meet the specific requirements of the AI model. They also possess the necessary skills for data annotation, including domain knowledge and specialized labeling skills. Working with a provider can save time and resources, allowing businesses to focus on other aspects of their AI projects.

When it comes to training AI models, having high-quality data is essential. However, sourcing and labeling large volumes of data can be a challenging and time-consuming task. By partnering with a data sourcing and labeling provider, businesses can leverage their expertise and experience in acquiring and preparing training data.

A data sourcing and labeling provider specializes in collecting, curating, and labeling datasets for AI model training. They have access to a diverse range of data sources and can tailor the dataset to match the specific requirements of the AI model. This ensures that the training data is representative of the real-world environment where the AI will be deployed.

Moreover, data annotation is a critical aspect of AI model training. It involves labeling data points or images with the correct annotations, such as object detection or classification labels. Data sourcing and labeling providers have domain knowledge and specialized labeling skills to accurately annotate the data.

By partnering with a data sourcing and labeling provider, businesses can benefit from:

- Expertise: Providers have extensive knowledge and experience in sourcing and labeling data for AI models.

- Custom Datasets: Providers can create custom datasets that precisely match the requirements of the AI model.

- Domain Knowledge: Providers possess deep domain knowledge, enabling accurate annotation of data points.

- Specialized Labeling Skills: Providers have the skills to annotate data with high precision, ensuring the quality of the training data.

- Time and Resource Savings: Partnering with a provider frees up valuable time and resources for businesses to focus on core AI project tasks.

By leveraging the expertise of a data sourcing and labeling provider, businesses can ensure that their AI models are trained on high-quality, accurately labeled datasets. This, in turn, enhances the accuracy and effectiveness of the machine learning applications that utilize these models.

Partnering with a data sourcing and labeling provider can be a strategic decision for businesses looking to streamline their AI model training process and achieve superior results.

Challenges and Considerations in Model Training

Model training presents unique challenges and considerations that must be addressed to ensure the accuracy and effectiveness of AI models. These challenges include:

- Bias and Misrepresentation: Bias and misrepresentation in training data can result in inaccurate or biased models. To mitigate this, it is essential to actively address bias and ensure that AI models are trained on diverse and representative datasets.

- Regulatory Compliance: Regulatory compliance should be a key consideration when collecting and using training data. Organizations must ensure that they adhere to relevant laws and regulations, particularly those related to data privacy.

- Data Privacy: Protecting data privacy is crucial in model training. Organizations must implement appropriate safeguards and protocols to safeguard the privacy and security of the data used for training AI models.

Ethical Implications

Bias and misrepresentation in training data have ethical implications. Inaccurate or biased models can perpetuate discrimination and inequality when deployed in real-world applications. It is imperative for organizations to prioritize fairness and equity in model training to avoid unethical consequences.

"The presence of bias and misrepresentation in training data can result in AI models that perpetuate discrimination and inequality when deployed in real-world applications."

Key Considerations in Model Training

When training AI models, organizations should take into account the following considerations:

| Consideration | Description |

|---|---|

| Bias and Representation | Ensure training data is diverse and representative to minimize bias and improve model accuracy. |

| Regulatory Compliance | Adhere to relevant laws and regulations, particularly those related to data privacy and protection. |

| Data Privacy | Implement robust data privacy measures to safeguard sensitive information used in model training. |

By addressing these challenges and considerations, organizations can enhance the quality and reliability of their AI models and uphold ethical standards in the field of AI.

Conclusion

In conclusion, the successful training of AI models relies on several key factors. Data engineering is crucial for ensuring that the training data accurately represents the real-world environment in which the AI will be deployed. This involves defining data, collecting relevant data from various sources, cleaning and labeling the data to create a high-quality dataset.

Model selection is another vital aspect, as it entails choosing the right algorithm and tuning hyperparameters to optimize the performance of the AI model. By benchmarking different models and evaluating them against specific performance metrics, businesses can identify the best fit for their use case.

The training process itself involves adjusting model parameters based on the training data and fine-tuning hyperparameters using a validation dataset. Ongoing maintenance is crucial to monitor the performance of AI models, address any issues that arise, and ensure their continued accuracy and reliability.

By following these steps—carefully considering data engineering, selecting the appropriate model, and implementing an effective training process—businesses can improve the accuracy and effectiveness of their AI models in various machine learning applications.

FAQ

What is the importance of data engineering in AI model training?

Data engineering is crucial for AI model training as it involves defining data, collecting data, cleaning data, and labeling. It ensures that the training data is representative of the real-world environment where the AI will be deployed.

How can I collect relevant data for AI model training?

Relevant data can be collected from various sources such as public datasets, open-source datasets, and synthetic data. Public datasets can be accessed through platforms like Google Dataset Search, GitHub, Kaggle, and UCI Machine Learning Repository.

How do I choose the right model for AI training?

The choice of model depends on the specific learning goals and data requirements of the project. Different machine learning algorithms and deep learning algorithms are available, each with its own strengths and weaknesses. Benchmarking and performance metrics can be used to evaluate and compare different models.

What are the best practices for setting realistic performance expectations for AI models?

It is important to define the minimum acceptable performance level and communicate it to project owners and stakeholders. A single performance metric should be chosen to evaluate the model throughout its lifecycle. Common performance metrics include classification accuracy for classification tasks and root mean squared error for regression tasks.

What is the training process for AI models?

The training process involves adjusting model parameters and hyperparameters based on the training data. Parameters are learned from the input data during training, while hyperparameters are externally set and define how the model operates. The training dataset is used to tune the initial model parameters, while the validation dataset is used to evaluate model performance and fine-tune hyperparameters. The testing dataset is used to assess the real-life performance of the trained model.

How do I maintain AI models after training?

Ongoing maintenance is necessary to ensure optimal performance of AI models. Model inputs can change over time, leading to data drift, which can reduce the accuracy of the model. Continuous monitoring is essential to validate model performance in real-life tasks and make any necessary adjustments such as fine-tuning hyperparameters or updating the training data.

How can I create custom data for AI models?

Custom data creation can be done through data sourcing and annotation services, where existing datasets are labeled or new datasets are created. Synthetic data can also be generated using distribution models or deep learning techniques. Data mining is another option for collecting data from online sources, but privacy regulations and ethical concerns should be considered.

What are the benefits of partnering with a data sourcing and labeling provider?

Partnering with a data sourcing and labeling provider offers benefits such as the expertise to source or generate custom datasets, specialized labeling skills, and domain knowledge. Working with a provider can save time and resources, allowing businesses to focus on other aspects of their AI projects.

What challenges and considerations should be taken into account in model training?

Model training can face challenges related to bias and misrepresentation in the training data, which can lead to inaccurate or biased models with ethical and regulatory implications. It is important to actively address these issues and ensure that models are trained on diverse and representative datasets. Compliance with data privacy regulations should also be considered when collecting and using training data.

What is the best approach for finding the best training data for AI models?

Finding the best training data for AI models involves considering data engineering, model selection, and the training process. Data engineering ensures the training data is representative of the real-world environment. Model selection involves choosing the right algorithm and tuning hyperparameters for optimal performance. The training process involves adjusting model parameters based on the training data and fine-tuning hyperparameters using validation data.