Generating synthetic data for annotation training

Machine learning systems need large amounts of information, but real-world datasets have obstacles like regulatory constraints or inherent biases.

That's where artificial datasets come in. Creating realistic simulation data that reflects real-world patterns helps to get around the bottlenecks.These alternatives maintain statistical accuracy and eliminate sensitive details, making them ideal for scenarios where original information is scarce or legally restricted.

This approach is transforming industries from healthcare to autonomous vehicles. The flexibility of tailored datasets allows teams to stress-test systems under critical conditions that don't include real-world examples.

Quick Take

- Artificially generated content addresses privacy and scarcity issues in AI development.

- Tuned datasets improve AI model performance through controlled scenario testing and effective training augmentation.

- Modeled information reduces the risks of bias inherent in traditional collection methods.

- Regulatory compliance becomes easy without the use of sensitive original records.

- Cost-effective generation accelerates project timelines compared to manual data collection.

The role of synthetic data in AI training

Synthetic data, often referred to as data synthesis, is artificially generated information that mimics the properties of real datasets, but does not contain real-world records. It is created using machine learning algorithms, simulations, or generative models to reproduce the original data's patterns, distributions, and structure. Synthetic data ensures safe work with information in cases where real data is restricted due to confidentiality, ethics, or legal requirements. It trains artificial intelligence, tests software systems, models rare scenarios, and creates samples to avoid bias in AI models.

Advantages of using artificially generated data

- Privacy and security. Allows you to train AI models without disclosing personal data.

- Unlimited availability. You can create data in any volume if real examples are lacking.

- Sample balancing. Helps avoid bias by generating different data.

- Rare scenario simulations. Allow you to recreate situations that are difficult or dangerous to collect in reality.

- Cost and time savings. Reduce the need for long-term collection of real data.

- Safe testing. Provide people or businesses with the ability to test algorithms without risk.

Synthetic Data Generation Methods

Four primary methods allow teams to create personalized information while maintaining statistical accuracy. Each approach addresses different industry needs, from healthcare diagnostics to fraud detection systems.

Generative Methods

Generative adversarial networks use competing neural networks to generate realistic results. One network creates samples while another evaluates them, which drives continuous improvement. This method creates realistic medical images for training diagnostic tools without compromising patient privacy.

Variational autoencoders compress the original information into simplified representations and reconstruct new variations. Financial institutions use VAEs to create transaction patterns that help detect new fraud tactics. Both methods achieve high statistical parity with real-world patterns in controlled tests.

Rule-based and simulation-based strategies

Structured rule systems control input parameters. Developers define specific constraints, such as driving patterns for autonomous vehicle testing, to create materials that respond to particular scenarios. This approach is important for training safety systems, which require accurately reproducing failure conditions.

Simulation methods create complex environments using physical engines or digital twins. Robotics teams use them to create manufacturing defect scenarios in virtual factories. Hybrid approaches combine multiple methods, combining creative variation with deterministic control for optimal results.

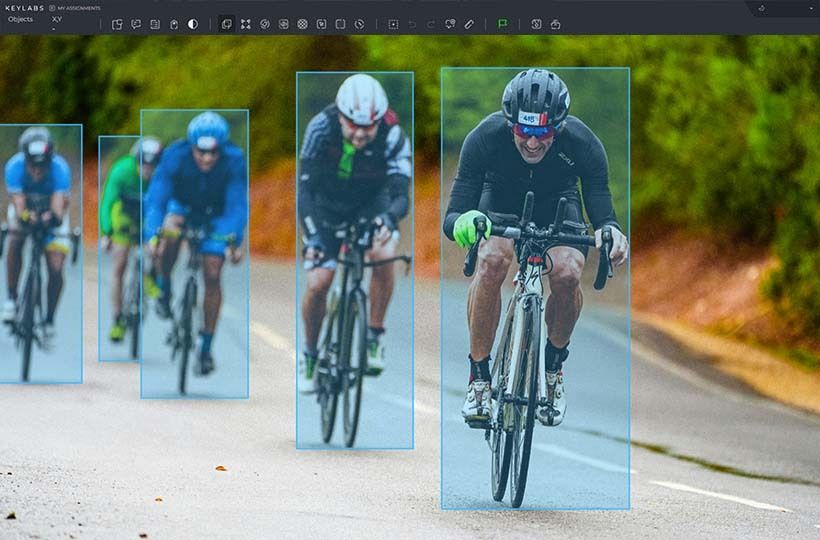

Synthetic Data Annotation Methods

Three-step verification processes set standards:

- Double Tagging. Two annotators label each sample.

- Discrepancy Analysis. Algorithms detect inappropriate interpretations.

- Template Audit. Regular checks for labeling drift over time.

This approach minimizes the risk of errors, maintains confidence in the results, and ensures regulatory compliance.

Specialized Verification Networks

They are aimed at improving the quality and reliability of artificially generated sets. Such networks are an additional control stage that checks the compliance of synthetic data with real-world patterns and their suitability for training artificial intelligence. After generating synthetic examples, they are passed through a verification model trained on the verified data. This model assesses the extent to which the generated samples retain the desired characteristics, do not contain anomalies, and correspond to statistical indicators. In case of deviations, the network automatically rejects incorrect data or marks them for manual verification. This approach is helpful in areas where quality and accuracy matter, such as medicine or finance. Specialized verification networks make the annotation of synthetic data reliable, automated, and scalable, increasing the effectiveness of AI applications in practical tasks.

Improving data quality and reducing bias

Quality is achieved by tuning generation algorithms, multi-level verification, and integrating methods that preserve the statistical regularities of the original datasets. To reduce bias, sample balancing strategies, control metrics for detecting disparities, and regularization techniques prevent the AI model from reproducing social or structural biases.

Ensuring high accuracy of generated materials

Advanced generation methods, such as GAN and VAE, achieve high consistency of patterns with real-world information. The quality system evaluates three dimensions:

- Distribution matching. Comparison of statistical profiles by key indicators.

- Correlation preservation. Maintaining variable relationships within tolerance limits.

- Edge case coverage. Stress testing of rare scenarios.

Models trained using validated materials have higher consistency in production environments.

Integrating synthetic and real data for optimal model performance

Integrating synthetic and real data allows for optimal performance of artificial intelligence models, and combines the flexibility of generative methods with the reliability of real examples. Real data provides authenticity and reflects natural patterns, and synthetic data complements them where there is a lack of information, biases or the absence of rare scenarios. This approach expands the diversity of the sample, reduces the risk of overfitting, and makes the model more resistant to new situations. Also, combining sources allows for testing systems in controlled conditions without risk to user privacy, which is important in medicine and finance. As a result, integrating real and synthetic datasets forms a balanced learning environment, increases the generalization abilities of the AI model, and creates more accurate and safe solutions.

Future Trends and Emerging Technologies in Synthetic Data Generation

Next-generation systems learn through reinforcement learning loops. These frameworks analyze gaps in model performance and automatically generate target materials to address weaknesses.

Three breakthroughs define this:

- Neural networks that mimic human intuition to predict rare scenarios.

- Real-time feedback systems that adjust generation parameters during training.

- Cross-industry template sharing through secure knowledge graphs.

Next-generation generative adversarial networks and diffusion models are expected to generate detailed and diverse datasets for complex medical research or autonomous transportation scenarios. At the same time, integrating differential privacy and federated learning methods will combine personal information protection with synthetic examples. A new direction will be the generation of multimodal data, which combines text, images, video, and sensory information to train artificial intelligence. In the future, synthetic data will become the leading resource for innovations in medicine, finance, manufacturing, and the public sector.