How to Use a Confusion Matrix to Evaluate an AI Model

Confusion matrices help evaluate an AI model's performance. They divide predictions into true positives, false positives, and false negatives. This classification identifies where an AI model outperforms others and where it falls short.

The confusion matrix provides information that helps calculate precision, completeness, and F1 scores. This knowledge is important for improving algorithms and strategically deploying an AI model.

Quick Take

- Confusion matrices provide a detailed analysis of AI model predictions.

- Confusion matrices help calculate precision, completeness, and F1 scores.

- They work for both binary and multi-class classification problems.

- Understanding confusion matrices helps you evaluate AI models correctly.

Understanding the Basics of Model Evaluation

AI model evaluation tests an AI model's accuracy, reliability, and ability to generalize to new data. It allows you to assess the predictive power and quality of the model. Based on this evaluation, the best model is selected to improve it.

Common Evaluation Metrics

Different machine learning models require different evaluation metrics. Accuracy, precision, recall, and F1-score are derived from the confusion matrix for supervised learning. Unsupervised learning uses metrics that measure cohesion, separation, and error, such as the silhouette measure for clustering.

Introduction to the Confusion Matrix

A confusion matrix is a table that shows how well an AI model classifies data, comparing the actual and predicted classes. It contains four key elements, true positive, false positive, true negative, and false negative values, that allow a detailed assessment of the quality of an AI model. It is used when working with unbalanced data sets or when error costs vary significantly.

Structure of the confusion matrix

The basic structure of a confusion matrix for binary classification includes four main components:

- True positive (TP). Correctly predicted positive cases.

- True negative (TN). Correctly predicted negative cases.

- False positive (FP). Incorrectly predicted positive cases (Type I error).

- False negative (FN). Incorrectly predicted negative cases (Type II error).

Key Components

Let's look at an example confusion matrix:

In this matrix, we observe 86 true positives, 79 true negatives, 12 false positives (type I error), and 10 false negatives. These numbers allow us to calculate various performance measures:

- Accuracy: 88.23%

- Overall: 87.75%

- Comprehensive: 89.83%

- F1-score: 88.77%

Confusion Matrix for Binary Classification

A two-class confusion matrix for binary classification helps assess an AI model's accuracy.

Components of a binary confusion matrix:

- True Positives (TP). Correctly identified positive cases.

- True Negatives (TN). Correctly identified negative cases.

- False Positives (FP). Negative cases are incorrectly labeled as positive.

- False Negatives (FN). Positive cases are incorrectly labeled as negative.

Consider a spam detection system to illustrate:

This matrix shows that the model correctly identified 15 spam and 50 non-spam emails out of 100. It incorrectly labeled 10 non-spam emails as spam and 25 as non-spam.

The confusion matrix helps to quickly calculate key metrics such as accuracy, precision, and completeness. For example, the accuracy here is (15 + 50) / 100 = 65%.

Multi-class confusion matrix extension

Multi-class classification extends the confusion matrix to handle more than two outcomes. This approach helps determine how well an AI model performs across different classes.

Structure of multi-class classification matrices

A confusion matrix for multi-class classification is an N x N grid, where N is the number of classes. Each row represents the actual class, and the columns represent the predicted classes. This arrangement helps to learn about correct and incorrect predictions for each class.

Interpreting multi-class results

Correct predictions are on the diagonal, and incorrect classifications are in the off-diagonal elements. This arrangement helps to understand which classes are confused with others.

One-vs-all approach

The one-vs-all method treats one class as positive and the rest as negative. This creates multiple binary classifiers. This strategy makes it easier to calculate class-specific metrics and helps identify where problems arise in the AI model.

Key Confusion Matrix Metrics

Accuracy measures the overall accuracy of predictions. Precision measures the ability of an AI model to make optimistic predictions. Completeness measures the ability of an AI model to detect all positive cases. The F1 score harmonizes accuracy and completeness into a single metric for evaluating a model.

Specificity and Sensitivity

Medical diagnostics require specificity and sensitivity. Sensitivity, or completeness, measures the actual rate of positive results, while specificity measures the true rate of negative results.

Micro-, Macro-, and Weighted Averages

Micro-, macro-, and weighted averages show an AI model's performance in multi-class scenarios. These metrics help assess the level of classification across different classes.

- Micro-averages assess the metrics globally, counting the total number of true positives, false negatives, and false positives.

- Macro-average estimates the metrics for each label and averages them without weighting.

- The weighted average is similar to the macro-average but weighs each metric according to its actual data.

These metrics from the confusion matrix help you choose the appropriate metric to evaluate a specific application.

Visualizing Confusion Matrices

Visualizing confusion matrices helps you understand the performance of your AI model. Heatmaps and color coding highlight patterns and areas for improvement.

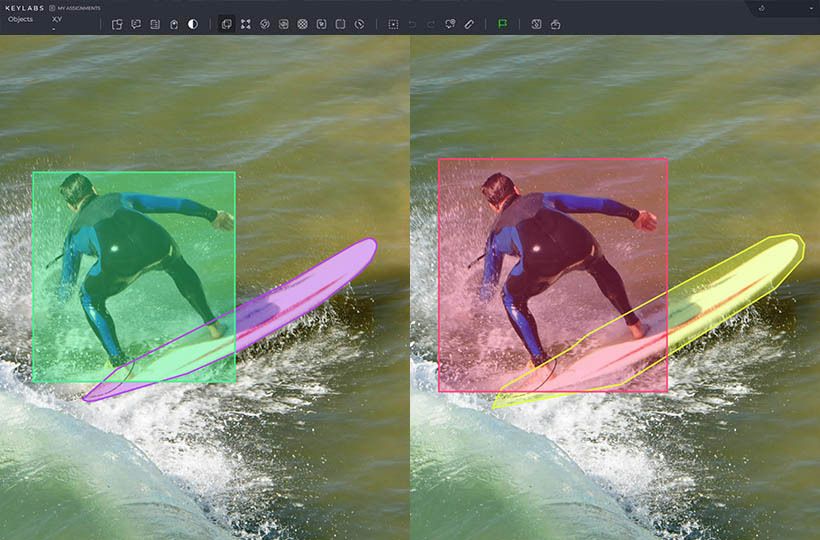

Heatmaps and color coding

Heatmaps display the values in a confusion matrix using color intensity. Bright colors indicate high values and dark colors indicate low values. This method helps you identify the strengths and weaknesses of your AI model.

Color coding visually represents information by assigning colors to different values or categories. In a confusion matrix, correct predictions can be labeled green, and errors can be labeled red, allowing you to assess the quality of your AI model quickly.

Visualization tools and libraries

Python libraries like Matplotlib and Seaborn are easy to use and have functions for creating confusing matrix plots.

They help you present your results to stakeholders in a presentable way or quickly identify areas for improvement.

Practical Implementation in Python

Implementing confusion matrices in Python is easy with libraries like Sklearn, TensorFlow, and Keras. Let's examine an example of using Sklearn to evaluate the performance of a movie recommendation system.

First, import the core modules:

from sklearn.metrics import confusion_matrix, accuracy_score, precision_score, recall_score, f1_score

import numpy as np

Next, prepare arrays for true and predicted labels:

y_true = np.array([1, 1, 0, 1, 0, 0, 1, 0, 1, 0])

y_pred = np.array([1, 1, 0, 0, 0, 1, 1, 0, 1, 0])

Then, generate the confusion matrix:

cm = confusion_matrix(y_true, y_pred)

print("Confusion Matrix:\n", cm)

Calculate key metrics:

accuracy = accuracy_score(y_true, y_pred)

precision = precision_score(y_true, y_pred)

recall = recall_score(y_true, y_pred)

f1 = f1_score(y_true, y_pred)

print(f"Accuracy: {accuracy:.2f}")

print(f"Precision: {precision:.2f}")

print(f"Recall: {recall:.2f}")

print(f"F1 Score: {f1:.2f}")

This Python implementation shows an accuracy of 0.70, a precision of 0.67, a completeness of 0.80, and an F1 score of 0.723. These metrics give an idea of the AI model's performance and help improve the recommendation system.

For deep learning models, TensorFlow and Keras have similar features. These tools allow you to evaluate and improve your machine-learning models in different applications.

Summary

A confusion matrix influences the evaluation and analysis of AI model performance. These tools offer a detailed overview of an AI model's strengths and weaknesses. Understanding the relationships between true positives, false positives, and false negatives helps you make informed decisions about strategies for improving your AI model.

Confusion matrix metrics such as precision, completeness, and F1-score provide deep insight into the behavior of a model. Precision, which is equal to TP / (TP + FP), is key when false positives are a problem. Completeness, or TP / (TP + FN), is important when the absence of true positives leads to serious consequences. The F1-score, a balance of precision and completeness, provides a complete picture of the performance of an AI model.

Tools such as ROC curves and AUC metrics help visualize and measure an AI model's ability to distinguish between classes at different thresholds. Effective assessment requires a comprehensive approach tailored to the project's specific needs and the industry's impact of misclassifications.

FAQ

What is a confusion matrix?

A confusion matrix is a table that shows how well a model classifies data, comparing the actual and predicted classes.

What are the components of a confusion matrix?

The parts of a confusion matrix include true positives (TP), true negatives (TN), false positives (FP or Type I error), and false negatives (FN or Type II error).

How is a confusion matrix used for binary classification?

For binary classification, a confusion matrix is a 2x2 table showing each class's correct and incorrect predictions.

What metrics can be obtained from a confusion matrix?

Various performance metrics such as accuracy, precision, completeness, F1-score, specificity, and sensitivity can be obtained.

Why are confusion matrices important for model evaluation?

Confusion matrices provide insight beyond accuracy, showing patterns in misclassifications and class-specific performance.

How can confusion matrices be visualized?

Visualizing confusion matrices as color-coded heat maps improves their interpretability. This makes it easier to identify patterns and areas of poor performance.

How can confusion matrices be implemented in Python?

Using libraries like Scikit-learn, TensorFlow, and Keras.