Human-in-the-Loop: Balancing Automation and Expert Labelers

Human-in-the-loop (HITL) is an approach to AI and machine learning that integrates human expertise into automated systems to improve accuracy, reliability, and adaptability. While AI is great at processing large amounts of data, it often struggles with ambiguity, rare events, and ethical considerations.

HITL plays an important role in content moderation on digital platforms. AI can filter out harmful or inappropriate content on a large scale, but moderators intervene to check ambiguous cases, preventing over-filtering or misinterpretation that could violate users' rights.

Understanding Human-in-the-Loop Refinement

HITL improvement is an essential approach in machine learning that combines automation with annotator expertise to improve accuracy and address ethical concerns in AI. Rather than relying solely on automation, HITL introduces human oversight at key stages, allowing artificial intelligence to learn from expert corrections and improve over time.

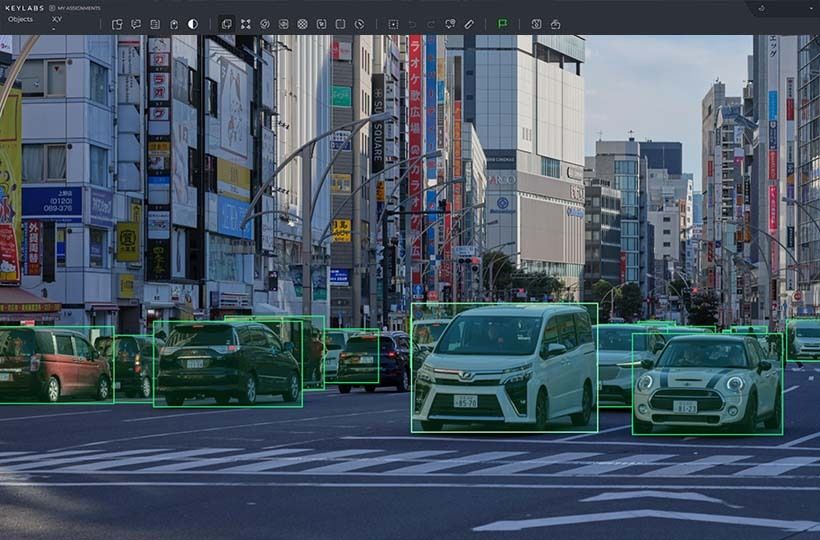

By its very nature, HITL improvement is a semi-automated process, which is especially important in supervised learning, where annotators label extensive data sets to train algorithms to recognize patterns. Automated systems often have trouble making nuanced decisions, such as detecting bias in data or making ethical judgments.

The Role of Automation in Data Processing

Modern businesses and organizations create massive data sets, making manual processing impractical. Automated data processing technologies based on artificial intelligence and machine learning simplify data collection, cleansing, transformation, and analysis tasks. This reduces human error, speeds decision-making, and allows professionals to focus on higher-level tasks rather than repetitive data management.

One of the main benefits of data automation is its ability to ensure consistency and accuracy. Automated systems apply standardized rules to validate data, identifying and correcting inconsistencies that could otherwise jeopardize analytical results. In addition to efficiency, automation provides real-time data processing, essential for fraud detection, predictive analytics, and personalized recommendations.

Limitations of Fully Automated Systems

Fully automated systems offer speed, efficiency, and scalability but have significant limitations, especially when dealing with complex, ambiguous, or ethically sensitive situations. One of the main drawbacks is their inability to fully understand a person's context, nuances, or intentions. AI models and algorithms work based on patterns in the data, but they struggle with unpredictable scenarios or rare edge cases beyond their training.

Another key limitation is bias in automated decision-making. Since AI models are trained on historical data, they can inadvertently reinforce existing biases in the data set. Without human intervention, computerized systems can lead to unfair or discriminatory outcomes, especially in hiring, lending, or law enforcement areas.

Challenges in Human-in-the-Loop Systems

One of the main challenges is scalability. While automation allows AI systems to process vast amounts of data, including human oversight, it can quickly slow down performance and increase costs. Annotators, reviewers, or decision-makers cannot match the speed of artificial intelligence, making it difficult to maintain efficiency, especially when dealing with large-scale or real-time applications such as fraud detection, content moderation, or medical diagnostics.

Another challenge is ensuring consistency and minimizing human bias. Different reviewers may interpret the same data differently, leading to inconsistencies in training data and decision-making. This can affect the performance of artificial intelligence models, as inconsistent labels or feedback can cause errors rather than improve accuracy. In addition, human biases, whether conscious or unconscious, can affect HITL processes, potentially reinforcing societal prejudices rather than mitigating them.

Balancing Cost and Efficiency

Automation alone is cost-effective for processing large-scale data, but it can have problems with edge cases and nuanced decision-making. Conversely, excessive human intervention increases reliability but significantly increases operating costs.

One way to manage costs while maintaining efficiency is strategically allocating human involvement. Instead of having annotators review every data point or decision, organizations can use artificial intelligence to handle routine or simple cases while saving human input for complex, ambiguous, or high-stakes situations.

HITL systems require skilled annotators who can provide high-quality input. However, training these individuals can be expensive, especially in specialized fields such as medical diagnosis or legal document review. Organizations must weigh the trade-offs between hiring experienced professionals, who can be costly but provide better knowledge, and training less skilled workers, who require more supervision but reduce labor costs.

Impact on Machine Learning Model Performance

One of HITL's most important contributions to model performance is its role in reducing bias and improving generalization. Automated systems often inherit biases from training data, leading to skewed predictions that exacerbate social inequalities. Annotators can help identify and correct these biases, ensuring that AI models produce fairer and more accurate results. Furthermore, by continuously incorporating human feedback, HITL allows models to adapt to changing conditions, making them more reliable in dynamic environments such as fraud detection, content moderation, and medical diagnosis.

However, HITL can also introduce inconsistencies if not appropriately managed. Annotators may interpret data differently, leading to inconsistencies in labels and training inputs. If left unaddressed, these inconsistencies can negatively impact model performance by introducing noise into the training process.

Ethical Considerations in Human-in-the-Loop

One of the main ethical concerns is data bias. While HITL is often used to reduce algorithmic biases, annotators may introduce subjective judgments that can amplify or even exacerbate biases in the learning process.

Another important ethical issue is the transparency and accountability of HITL processes. In many AI applications, users and stakeholders may be unaware of the extent to which human decisions influence model outcomes. To maintain trust and prevent abuse, clear documentation of when and how human intervention occurs and the ability to explain when AI decisions are made is necessary.

Data privacy and work ethics are also key issues in HITL systems. Annotators often work with sensitive data, increasing the risk of breaches or misuse if proper security measures are not implemented. Ethical HITL practices should include robust data security protocols, fair compensation, and protection of employee rights.

Measuring Success in Refinement Processes

One of the main indicators of success is the model's performance, which can be measured by metrics such as accuracy, precision, recall, and F1 score. In addition to performance metrics, human involvement can measure the effectiveness of HITL improvements. If the system is properly balanced, the need for human intervention should decrease over time as the model learns from expert corrections.

Another key indicator of success is fairness and the reduction of bias. HITL processes should help AI systems produce fairer results by minimizing biases in training data and decision-making. Regular audits and fairness assessments can determine whether HITL interventions successfully mitigate biases rather than create new ones.

Key Performance Indicators

Metrics help to assess accuracy, efficiency, equity, and overall impact, guiding continuous improvement processes. Below are the key KPIs used to measure success in HITL systems:

- Model accuracy and error reduction: measures improvements in precision, recall, F1 score, and overall accuracy before and after human intervention. Reduced false positives and false negatives indicate successful improvement.

- Human intervention rate: measures how often human input is required for AI decision-making. A decrease over time suggests that the model is learning effectively and relying less on manual corrections.

- Time to annotation or review: tracks the average time it takes annotators or reviewers to process data points. Faster annotation times without compromising quality indicate a more efficient HITL workflow.

- Bias and fairness metrics: assesses whether human intervention reduces the bias in AI results. This can be measured using demographic parity, leveled odds, or equity audits to ensure that the model does not disproportionately favor or disadvantage certain groups.

- Cost per Human Interaction: Calculates the financial impact of human involvement, balancing labor costs against model performance improvements. Lower costs with consistent model accuracy indicate a well-optimized HITL process.

- User trust and satisfaction: collects feedback from end users or stakeholders on the reliability and transparency of AI solutions. Higher satisfaction scores signal that HITL interventions are leading to more reliable results.

Feedback Loops for Optimization

Feedback loops allow artificial intelligence models to continuously improve by incorporating human feedback at key process stages. Automation and human insight contribute to system improvement through this iterative approach, leading to better decision-making and increased efficiency over time. Below are the key steps in creating effective feedback loops for optimization:

- Gathering human feedback: The first step involves gathering input from human experts who review and correct AI decisions. This can include annotating data, correcting errors, or providing information on ambiguous cases.

- Data Integration and Model Adjustment: Once the human feedback is collected, it is integrated into the model's training dataset, allowing the AI to learn from these corrections. This process helps to tune the model parameters and improves its ability to make accurate predictions, especially in complex or changing scenarios.

- Model evaluation and performance metrics: After accounting for human input, model performance is evaluated using various metrics such as precision, accuracy, recall, and F1 score. This helps to determine whether the feedback loop effectively improves the system's output and whether further human intervention is required for additional improvement.

- Automated learning with human supervision: As the model improves through feedback, more routine tasks can be performed automatically, allowing human involvement to be focused on more complex cases. The goal is to create an optimal balance where automation handles the bulk of the tasks and human expertise intervenes when needed, resulting in greater efficiency.

- Continuous Iteration: The feedback loop is a dynamic and constant process. As the AI model continues to learn from new data and human corrections, it adapts to changing conditions, improving over time. Continuous evaluation and fine-tuning ensure the model aligns with real-world requirements and human values.

Summary

Human-in-the-loop (HITL) systems combine the strengths of automation and human expertise to improve AI model performance, accuracy, and ethical decision-making. By integrating human feedback into the learning process, HITL systems can handle complex scenarios and edge cases that purely automated systems may not be able to handle. This collaborative approach ensures continuous improvement of AI systems through human oversight, especially in applications where accuracy, integrity, and accountability are critical.

FAQ

What is human-in-the-loop (HITL) in machine learning and AI?

HITL combines automated processes with human expertise in machine learning and AI. It involves integrating human judgment into AI model development and refinement.

Why is HITL important in AI development?

HITL is vital for improving model accuracy and reducing errors. It adapts AI systems to complex real-world scenarios.

What are the key components of a HITL approach?

HITL includes automated systems for data processing and initial analysis. It also involves expert annotators and integrated workflows.

How does HITL impact machine learning model performance?

HITL significantly improves machine learning model performance. Human input corrects errors, reduces biases, and provides context. This hybrid approach leads to more robust and adaptable AI models.

What are some challenges in implementing HITL systems?

Implementing HITL systems involves challenges such as balancing cost and efficiency, ensuring labeling consistency, and managing scalability.

What are the best practices for effective HITL processes?

Effective HITL processes require comprehensive training and ongoing support for annotators. Implementing quality assurance measures and clear guidelines for data annotation is crucial.

What ethical considerations are essential in HITL systems?

Ethical considerations include mitigating bias and ensuring fairness in AI outcomes. Transparency and accountability in decision-making processes are also critical.