Improving Your AI Model's Accuracy: Expert Tips

Enhancing accuracy is a top priority for data scientists working with AI models in the field of machine learning and artificial intelligence. Achieving higher accuracy can unlock the full potential of these models and enable more reliable predictions. In this article, we will explore eight proven ways to improve the accuracy of your AI models through effective data optimization and model refinement techniques.

Key Takeaways:

- Adding more data to your training set can improve model performance and reduce reliance on assumptions.

- Treating missing and outlier values is essential for reducing bias and enhancing model accuracy.

- Feature engineering enables the creation of new variables that better explain the variance in the data.

- Feature selection helps to minimize overfitting and improve model interpretability.

- Exploring multiple algorithms allows for the identification of the best fit for your data and problem.

What is Model Accuracy in Machine Learning?

Model accuracy is a fundamental performance metric in machine learning. It measures how well a machine learning model is performing by quantifying the percentage of correct classifications made by the model. Accuracy is represented as a value between 0 and 1, where 0 signifies that the model always predicts the wrong label, and 1 indicates that the model always predicts the correct label.

Calculating model accuracy involves dividing the number of correct predictions by the total number of predictions across all classes. This metric provides valuable insights into the effectiveness of the model's predictions and its ability to accurately classify data points.

Accuracy plays a crucial role in various machine learning tasks, including binary classification. It helps evaluate the model's performance and determine its reliability and suitability for real-world applications. By maximizing the accuracy of a machine learning model, data scientists can enhance its predictive capabilities and ensure more accurate and reliable outcomes.

"Model accuracy is a key performance metric that allows data scientists to quantify the correctness of predictions made by a machine learning model."

The concept of model accuracy is closely linked to the confusion matrix, which provides a comprehensive summary of the model's predictions. The confusion matrix enables data scientists to analyze the distribution of predicted and actual labels and identify any patterns or discrepancies.

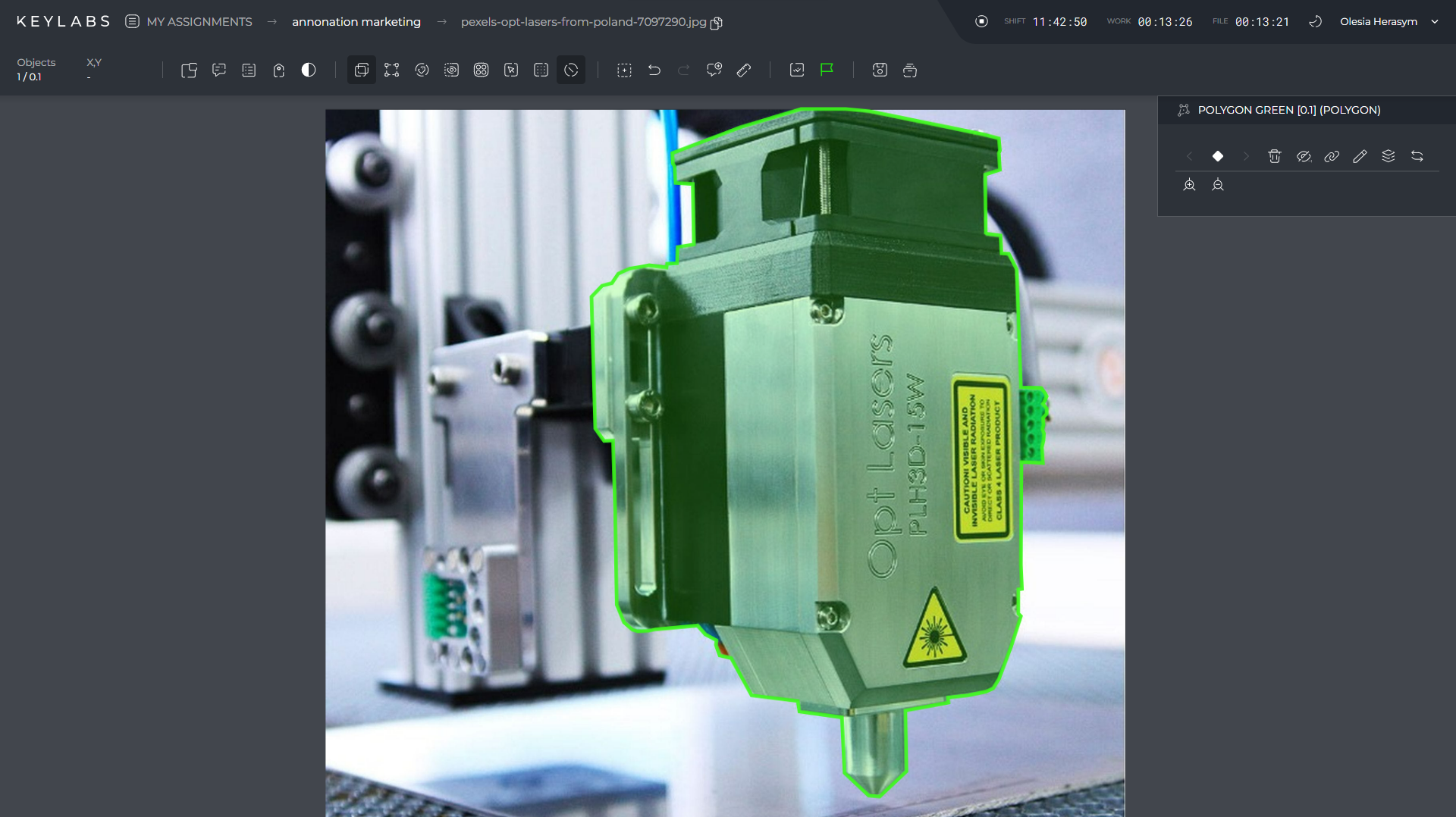

To illustrate the importance of model accuracy in machine learning, consider an image classification task. A high accuracy score indicates that the model can correctly identify and classify images, enabling applications such as facial recognition, object detection, and medical image analysis to provide accurate results.

Key Takeaways:

- Model accuracy measures how well a machine learning model performs by quantifying the percentage of correct classifications.

- Accuracy values range from 0 to 1, with 0 representing incorrect predictions and 1 representing perfect predictions.

- Calculating accuracy involves dividing the number of correct predictions by the total number of predictions across all classes.

- Model accuracy is closely related to the confusion matrix, which summarizes the model's predictions.

Why is Model Accuracy Important?

Model accuracy plays a crucial role in machine learning, serving as a fundamental metric to evaluate the performance and reliability of AI models. The significance of model accuracy stems from several key factors that contribute to its importance.

Firstly, model accuracy provides a straightforward and easy-to-understand measure of how well a model is making correct predictions. It represents the percentage of accurate predictions made by the model, offering a clear indication of its effectiveness in solving the given problem.

Moreover, model accuracy is closely related to the concept of error complement. By measuring accuracy, we can indirectly assess the error rate of the model. A high accuracy corresponds to a low error rate, signifying a higher degree of precision in predictions. This error complement aspect simplifies the evaluation process, allowing data scientists and stakeholders to quickly assess the model's performance.

Furthermore, accuracy holds computational efficiency, making it a widely used metric in machine learning research. Its simplicity and ease of computation enable practitioners to compare and benchmark different models effectively, aiding in the selection of the most appropriate and efficient model for a specific task.

In real-life applications, accuracy serves as a vital benchmark for assessing the practical utility and value of machine learning models. By aligning with various business objectives and metrics, accuracy facilitates effective communication with stakeholders, conveying the benefits and potential impact of the deployed model. This enables stakeholders to make informed decisions based on the model's performance and applicability.

Overall, model accuracy's importance lies in its simplicity, error complement, computational efficiency, and aligning with real-life applications. By striving for higher accuracy, data scientists can enhance the effectiveness and usefulness of their AI models, unlocking their full potential in various domains.

Image related to Model Accuracy:

Add More Data

Adding more data is a proven way to improve the accuracy of machine learning models. With a larger and more diverse dataset, the model can learn from a greater variety of examples, reducing reliance on assumptions and weak correlations. The additional data provides valuable insights that may not have been captured in smaller datasets, leading to more accurate predictions.

While it may not always be feasible to add more data, such as in cases like data science competitions with limited datasets, it is highly recommended to consider requesting additional data in real-world projects. More data means more opportunities for the model to identify patterns, generalize better, and make more accurate predictions.

By leveraging more data, machine learning practitioners can optimize their models for improved performance and obtain more reliable results. The increased volume and diversity of data enable the model to capture a wider range of scenarios and produce more accurate predictions in real-world applications.

Harness the Power of Data

"The more data you can gather, the more complete and accurate your model will become. It's like having a larger sample size to draw insights from, resulting in more robust predictions."

Treat Missing and Outlier Values

Missing and outlier values in training data can have a significant impact on the accuracy of trained machine learning models, potentially leading to biased predictions. Effective treatment of missing and outlier values is essential for improving the reliability and accuracy of these models.

When dealing with missing values, one common approach is to impute them using statistical measures such as the mean, median, or mode for continuous variables. For categorical variables, missing values can be treated as a separate class. By imputing missing values appropriately, the model can better capture the patterns and relationships in the data.

Outlier values, on the other hand, can be handled in different ways. One approach is to delete the observations containing outliers, as they may significantly deviate from the expected patterns. Alternatively, transformations can be applied to normalize the data and mitigate the impact of outliers. In some cases, outliers can be treated as a separate category if they contain valuable information.

By effectively treating missing and outlier values, data scientists can optimize the quality of their training data, reduce bias, and ultimately improve the accuracy of machine learning models. This process plays a crucial role in ensuring reliable predictions and enhancing the overall performance of these models.

Feature Engineering

Feature engineering is a crucial step in data optimization and machine learning. By extracting more information from existing data and creating new features, data scientists can significantly improve the accuracy of their models. These new features have a higher ability to explain the variance in the training data, leading to more precise predictions and enhanced model performance.

Feature engineering is not a random process. It is influenced by hypothesis generation and structured thinking, guiding the exploration of potential relationships and patterns in the data. By leveraging domain knowledge and understanding the problem at hand, data scientists can engineer features that capture the most relevant information for accurate predictions.

Feature transformation techniques further contribute to model accuracy improvement. Data normalization, for example, ensures that features are transformed into a common range, preventing certain features from dominating the model training process due to their magnitude. Handling skewness in data distribution is also essential for optimal model performance, reducing the impact of extreme values that can introduce bias. Data discretization, on the other hand, allows for the creation of categorical features from continuous variables, providing valuable insights into non-linear relationships.

Overall, feature engineering plays a pivotal role in the success of machine learning projects. It empowers data scientists to extract the most relevant information from the data, identify hidden patterns, and create new features that optimize model performance. By incorporating feature engineering techniques and leveraging domain knowledge, data scientists can unlock the full potential of their machine learning models.

Feature Selection

Feature selection plays a crucial role in improving the performance of machine learning models. By selecting the most relevant features from a dataset, data scientists can enhance model accuracy, reduce dimensionality, minimize overfitting, and improve model interpretability. The goal of feature selection is to focus on the most informative variables that have a significant impact on model performance.

There are several techniques available for feature selection, each with its own advantages and limitations. Some common methods include filter methods, wrapper methods, and embedded methods. Filter methods evaluate the relevance of each feature based on statistical measures like correlation or mutual information. Wrapper methods use trial and error by evaluating the model's performance with different feature subsets. Embedded methods incorporate the feature selection process within the model training.

Choosing the right feature selection technique is essential to ensure the best possible outcome in model performance. By leveraging these methods, data scientists can select the most relevant features and optimize the model's accuracy. This process not only improves prediction accuracy but also enhances the efficiency and interpretability of the model.

For example, in a predictive analytics project, feature selection helps identify the key variables that drive the outcome of interest. Let's say we are building a model to predict customer churn. By analyzing various customer attributes such as age, income, and purchase history, we can determine which factors have the most significant impact on churn. By selecting these crucial features, the model can focus on the most influential factors, leading to improved predictions.

Multiple Algorithms

Trying multiple algorithms is a strategy that can significantly improve the accuracy of machine learning models. Each algorithm has its own strengths and weaknesses, making it important to compare and benchmark them to find the best fit for the data and problem at hand.

During the model selection process, data scientists evaluate the performance metrics, scalability, interpretability, and robustness of different algorithms. By exploring various algorithms, they can iterate and refine their models, resulting in more successful AI implementations tailored to specific needs.

"Comparing and benchmarking multiple algorithms enables data scientists to identify the best fit for their data and problem, improving the accuracy of their machine learning models."

With multiple algorithms at their disposal, data scientists have the flexibility to adapt and optimize their models based on the unique characteristics of their data. This approach ensures that the chosen algorithm is capable of delivering accurate predictions and meeting the specific requirements of the problem domain.

Furthermore, exploring a variety of algorithms allows data scientists to gain a deeper understanding of how different approaches work and how they perform under different circumstances. This knowledge is invaluable in building robust and reliable machine learning models.

Algorithm Tuning

Algorithm tuning plays a crucial role in optimizing the performance of machine learning models and improving overall model accuracy. By adjusting the hyperparameters of the algorithm, data scientists can fine-tune the learning process and achieve better results. Hyperparameters control various aspects of the algorithm, such as the learning rate, regularization strength, and kernel parameters, among others.

To optimize these hyperparameters, different techniques can be utilized, such as grid search, random search, or Bayesian optimization. Grid search exhaustively searches through a predefined set of hyperparameter values, while random search randomly samples values from the search space. Bayesian optimization, on the other hand, uses Bayesian inference to update a probabilistic model of the hyperparameter space based on previous evaluations, effectively guiding the search towards promising regions.

By finding the optimal values for these hyperparameters, the machine learning model can be fine-tuned to achieve better accuracy and align with the specific problem domain. However, the process of algorithm tuning does not end with simply finding the optimal values; continuous monitoring and evaluation of the model's performance during and after training are crucial.

Effective algorithm tuning involves evaluating the model's performance using appropriate metrics and making adjustments as needed. This iterative process allows for the refinement of the model, ensuring that it continues to perform optimally even as new data becomes available or the problem domain evolves.

"Algorithm tuning is a key step in achieving optimal model performance. By adjusting the hyperparameters and continuously evaluating the model's performance, we can fine-tune the algorithm to achieve better accuracy and solve real-world problems more effectively."

Ensemble Methods

In the realm of machine learning, ensemble methods have gained prominence as a powerful technique for enhancing overall accuracy and performance. These methods involve combining multiple models to leverage their individual strengths and achieve superior prediction outcomes.

One popular approach to implementing ensemble methods is aggregating the predictions made by each individual model. This can be done through techniques such as majority voting, where the most commonly predicted class is selected, or averaging, where the average of the predicted values is computed. By aggregating predictions, ensemble methods benefit from the diversity of models, resulting in improved accuracy and robustness.

Ensemble methods encompass various techniques, including bagging, boosting, and stacking. Bagging involves training multiple models independently on different subsets of the same data, while boosting focuses on iteratively training models that address the weaknesses of their predecessors. Stacking combines multiple models as a meta-model, using their predictions as input features for a final model.

Employing ensemble methods presents a compelling strategy for boosting AI model accuracy and enhancing overall performance. By harnessing the collective power of multiple models and exploiting their complementary strengths, data scientists can achieve better results, improve predictions, and unlock the full potential of machine learning applications.

Realizing the Power of Ensemble Methods

"Ensemble methods offer a unique opportunity to capitalize on the strengths of individual models and optimize overall performance. By combining diverse models, we can obtain more accurate predictions, reduce bias, and enjoy greater robustness in AI applications."

Ensemble methods have gained popularity across various domains due to their effectiveness in improving accuracy and performance. They provide an avenue for data scientists to go beyond the limitations of individual models and achieve remarkable results. With ensemble methods, model combination becomes a strategic step towards optimal performance.

Cross Validation

Cross validation is a crucial technique used in machine learning for evaluating and estimating the performance of models. By splitting the data into multiple subsets or folds, cross validation allows for thorough validation and assessment of how well the model generalizes to unseen data. Implementing cross validation ensures the reliability and accuracy of the AI model.

During cross validation, the model is trained on one subset of the data, known as the training set, and then validated on the remaining folds. This process is repeated multiple times, with each fold being used as both the training and validation set. By averaging the performance measures obtained from each iteration, a more robust and accurate estimate of the model's performance can be obtained.

One of the key benefits of cross validation is that it helps in detecting potential biases in the model and allows for better understanding of its robustness. It enables data scientists to assess how well the model is expected to perform on real-world, unseen data. By evaluating the model's performance across different subsets of the data, cross validation provides valuable insights into its overall effectiveness and potential for overfitting or underfitting.

By implementing cross validation in the machine learning workflow, practitioners can make more informed decisions regarding model selection, hyperparameter tuning, and overall model evaluation. It provides a more comprehensive understanding of the model's strengths and weaknesses, allowing for better optimization and improvement of its performance.

Conclusion

Improving the accuracy of AI models is a crucial step in maximizing their potential in the field of machine learning. By following expert tips and employing proven methods, data scientists can significantly enhance the accuracy of their AI models and unlock their full capabilities.

One of the key strategies is to add more data to the training set. More data allows the model to learn from a larger and more diverse set of examples, resulting in improved accuracy. Treating missing and outlier values in the data is also important, as it helps to ensure the reliability and accuracy of the trained models.

Furthermore, feature engineering and feature selection techniques play a vital role in enhancing model performance. By creating new features and selecting the most relevant ones, data scientists can improve the model's ability to explain the variance in the training data, leading to better accuracy.

Trying multiple algorithms and tuning their hyperparameters are valuable approaches to finding the best-fit algorithm for the data. Additionally, employing ensemble methods, such as combining multiple models, can further improve prediction accuracy. Finally, implementing cross-validation is essential for assessing the model's generalization capabilities and estimating its performance on unseen data.

To optimize model performance, reduce bias, increase interpretability, and ensure reliable predictions, data scientists should focus on accuracy improvement. By implementing these expert tips and data optimization techniques, the full potential of AI models in predictive analytics can be realized.

FAQ

What is model accuracy in machine learning?

Model accuracy is a measure of how well a machine learning model is performing. It quantifies the percentage of correct classifications made by the model.

Why is model accuracy important?

Model accuracy is important because it represents the percentage of correct predictions made by a model. It is a straightforward and easy-to-understand metric that is aligned with various business objectives and metrics in real-life applications.

How can adding more data improve the accuracy of machine learning models?

Adding more data allows the model to learn from a larger and more diverse set of examples, reducing the reliance on assumptions and weak correlations.

How can treating missing and outlier values improve model accuracy?

Treating missing values and outlier values in training data is essential for improving the reliability and accuracy of machine learning models. Proper treatment can involve imputing missing values with mean, median, or mode, and handling outlier values through deletion, transformations, or separate treatment.

What is feature engineering and how does it improve model accuracy?

Feature engineering involves extracting more information from existing data by creating new features. These new features have a higher ability to explain the variance in the training data, resulting in improved model accuracy.

How does feature selection enhance model performance?

Feature selection is the process of selecting the most relevant features from a dataset to improve model performance. It helps to reduce dimensionality, minimize overfitting, and enhance model interpretability.

How does trying multiple algorithms improve model accuracy?

Trying multiple algorithms allows for comparison and benchmarking to find the best fit for the data and problem. Different algorithms have different strengths and weaknesses, and exploring various options allows for iterative learning and refinement.

What is algorithm tuning and how does it optimize model performance?

Algorithm tuning involves adjusting the hyperparameters of a machine learning algorithm to optimize its performance and improve model accuracy. By finding the optimal hyperparameter values, the model can achieve better accuracy and align with the specific problem domain.

How do ensemble methods improve model accuracy?

Ensemble methods involve combining multiple models to improve overall accuracy. These methods harness the diversity of different models and exploit their complementary strengths, resulting in improved prediction accuracy and robustness.

What is cross-validation and why is it important for model accuracy?

Cross-validation is a technique used to assess the generalization capabilities of a machine learning model and estimate its performance. It helps to evaluate how well the model is expected to perform on unseen data and detect potential biases that may arise during deployment.