Integration Testing for Labeled Data: Ensuring Consistency Across the Pipeline

Integration testing for labeled data looks at how different parts of a system process data together rather than separately. In machine learning pipelines, data often flows through multiple stages, some automated, some manual, and integration testing helps ensure this flow occurs without unexpected changes. It's not about testing how individual components work but how they interact when connected. This type of testing focuses on the points where data is exchanged or transformed. Problems can still occur at the boundaries even when each part functions correctly.

Key Takeaways

- Identifies hidden flaws in multi-component data workflows.

- Validates API and module compatibility before deployment.

- Reduces project risks by catching errors early.

- Enhances output quality through systematic cross-checks.

- Supports scalable AI solutions with robust pipelines.

Understanding Integration Testing

Understanding integration testing means examining how different system parts work together once connected. Unlike unit testing, which checks small, individual pieces, integration testing focuses on the flow between those pieces. It's concerned with how data moves, how components respond to one another, and whether the system behaves as expected when all the parts interact.

Each step might rely on specific formats, naming conventions, or assumptions about the data. If one part of the pipeline changes or behaves unexpectedly, it can affect everything that comes after it. Integration testing helps surface these issues by treating the pipeline as a connected whole.

Definition and Scope

Integration testing is a software testing approach that verifies how different system components work together as a unified whole. Rather than focusing on the internal correctness of each part, it examines the connections between them, checking whether data is passed, interpreted, and processed correctly across interfaces. Integration testing ensures that labels remain consistent and meaningful throughout various pipeline stages in systems that rely on labeled data. This includes transitions between preprocessing, annotation, storage, model training, and evaluation.

The scope of integration testing can vary depending on the complexity of the pipeline and the number of systems involved. It may cover direct interactions between two modules or span broader workflows involving multiple stages and data formats. Integration tests often run after unit and end-to-end tests as a middle layer focusing on relationships between parts. The scope of pipelines that process labeled data typically includes validating data formats, label structures, and schema alignment across tools and services.

Role in the Software Development Lifecycle

Integration testing bridges early-stage component and later-stage system testing in the software development lifecycle. After individual units or modules have been verified in isolation, integration testing checks how those units work together in a realistic environment. It's where assumptions about shared inputs, outputs, and interfaces are tested against actual behavior. This stage helps catch mismatches or miscommunications before they affect the entire system.

Integration testing usually occurs after unit tests pass before complete system or user acceptance testing begins. It helps reduce the risk of errors propagating through the system by verifying data exchange and coordination early. In projects involving labeled data, this is often where issues like schema drift, version mismatches, or broken label references are first detected. The testing efforts at this stage are focused on workflows, interactions, and contract fidelity between parts of the codebase.

Why Integration Testing is Crucial

Each part of a system may follow its logic and expectations, but when these parts interact, hidden assumptions often surface. A component might handle inputs differently than we've detected or produce outputs in a format that the next step doesn't fully understand. These miniature disconnects can lead to larger issues, especially in systems where accuracy and consistency are critical, such as those involving labeled data.

Changes in one part of the pipeline, like an updated schema or new formatting rule, can easily break something downstream. Integration testing helps catch these effects early by regularly checking how components behave together. It supports smoother collaboration between teams working on different system parts and gives more confidence that updates won't introduce regressions.

Early Detection of Interface Errors

Integration testing helps catch interface errors early before they cause larger issues downstream. When two components interact, they rely on shared expectations like the format of inputs, the order of fields, or the type of response returned. If one side changes and the other isn't updated to match, the system might still run but produce incorrect or unstable results. These errors are often subtle and easy to miss during unit testing, focusing only on internal logic.

Catching these issues early saves time and effort later in the development process. Once a system is fully built or deployed, tracing problems back to a broken interface can be difficult and expensive.

Enhancing Overall System Stability

Integration testing contributes directly to system stability by ensuring that all parts of a pipeline work together reliably. Even when individual components function correctly, their interactions can introduce unpredictable behavior. Integration testing checks these interactions, ensuring changes in one area don't lead to cascading failures in others. It is a buffer against fragility in complex systems, where minor issues can proliferate.

This leads to fewer interruptions, more predictable performance, and greater confidence during updates or scaling. As systems grow in size and complexity, the risk of integration-related failures also increases. Regular integration testing helps manage this risk by catching problems early and encouraging better coordination between teams and tools. It supports a more resilient development process, where stability isn't just a final check but an ongoing priority.

Integration testing for data

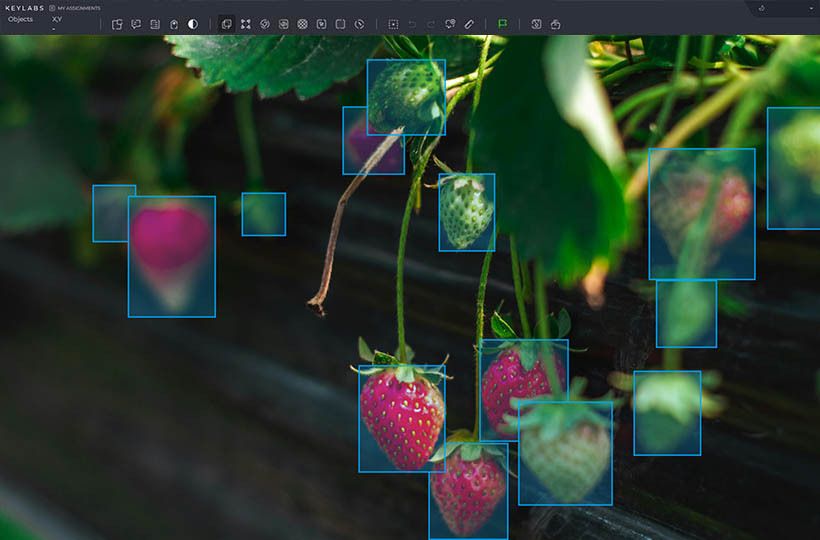

Data integration testing focuses on how data flows through different parts of a system and whether it remains correct, complete, and consistent along the way. Unlike tests that verify code behavior in isolation, this type of testing looks at how data is passed, transformed, and stored between components. It checks that formats match, required fields are preserved, and data isn't unintentionally changed or lost. Even if each step works independently, the data flow across them can introduce subtle, hard-to-detect problems.

This testing often includes comparing input and output data at different checkpoints, validating schema compatibility, and confirming that records remain aligned throughout the process. It's also helpful in detecting version mismatches or accidental overwrites when systems are updated. In setups involving labeled data, integration testing helps ensure the labels stay accurate and linked to the correct inputs, even as formats or sources change.

Aligning Data Pipelines for Consistency

Aligning data pipelines for consistency means ensuring that data remains accurate, structured, and traceable as it moves from one processing stage to the next. In complex systems, data often flows through multiple tools, services, and storage formats, each with its own rules and expectations. Minor differences like a renamed column, a changed data type, or a reordered label can disrupt this flow and lead to downstream inconsistencies. Integration testing helps catch these issues by checking how well each part of the pipeline communicates with the next, focusing on the transitions where data is most likely to be misinterpreted or lost.

This alignment is critical when the data includes labels used for training or evaluation. A mismatch in labels' storage or interpretation can introduce silent errors that degrade model performance or skew results. Integration tests can be designed to verify that data schemas are respected, that mappings between inputs and labels are preserved and that no steps alter the meaning or structure of the data.

Measuring Data Integrity Across Modules

Measuring data integrity across modules involves checking that data stays complete, accurate, and consistent as it moves through different system parts. Each module in a pipeline might apply transformations, validations, or reformatting steps, and even minor changes can affect how the data is used later on. Integration testing helps monitor this by comparing data before and after it passes through key points in the pipeline. It looks for unexpected changes, missing values, or broken links between related data elements, such as labels and their corresponding inputs.

To do this effectively, teams often define integrity rules based on schema definitions, reference datasets, or expected relationships within the data. Tests might track row counts, field values, or label distributions to detect silent shifts that could otherwise go unnoticed. This kind of monitoring is beneficial in pipelines that span multiple services or formats, where assumptions can easily drift apart.

Comparing Integration Testing and Unit Testing

Integration and unit testing serve different purposes in software development, especially when working with data pipelines. Unit testing focuses on individual functions or components in isolation, checking that each behaves as expected under a range of controlled inputs. It's fast, precise, and helpful in catching logic errors within a single module. Integration testing, on the other hand, examines how these components interact when connected, focusing on the flow of data and the correctness of their communication.

In the data context, unit tests validate how a function cleans or transforms a dataset, while integration tests ensure that this cleaned data is still compatible with the next stage in the pipeline. Unit testing is often easier to automate and maintain, but it can miss problems arising only when systems work together. Integration testing fills this gap by verifying each part's assumptions about the others.

Key Differences in Objectives

- Focus of Testing. Unit testing targets individual functions or modules in isolation, while integration testing focuses on the interaction between multiple components.

- Scope. Unit tests cover small, self-contained pieces of logic; integration tests span across modules, services, or stages in a pipeline.

- Goal. Unit testing aims to verify internal correctness, whereas integration testing aims to ensure proper communication and data flow between parts.

- Error Detection. Unit testing catches internal logic errors or invalid assumptions within a single component; integration testing identifies mismatches, interface issues, and data inconsistencies across components.

- Dependencies. Unit tests typically mock or isolate dependencies; integration tests rely on real or closely simulated interactions between components to validate their behavior in context.

Approaches to Integration Testing

- Big Bang Integration. All components are combined at once and tested as a whole. This approach is simple to set up but makes it harder to isolate the source of errors when something goes wrong.

- Top-Down Integration. Testing starts from high-level modules and gradually adds lower-level components, often using stubs to simulate unfinished parts. It helps verify control flow and overall logic early in development.

- Bottom-Up Integration. Testing begins with the lowest-level modules and integrates upward through the system. Drivers are used to simulate higher-level calls, making this approach suitable for validating foundational components before complete assembly.

- Sandwich (Hybrid) Integration combines top-down and bottom-up testing to cover high-level logic and low-level processing simultaneously. It allows for more parallel testing but requires careful coordination.

- Incremental Integration. Modules are added and tested one at a time or in small groups. This method makes it easier to pinpoint errors and track how system behavior changes as new parts are introduced.

Integration Testing Techniques: Black Box, White Box, Gateway's Box

Integration testing techniques can be grouped by how much visibility the tester has into the system's internal workings. Three common approaches, black box, white box, and a more informal, practical concept like Gateway's box, each offer different strengths depending on the goal of the test.

Black Box Testing Techniques

This technique treats each component as a closed system, focusing only on inputs and expected outputs without knowing how the component works internally. It's helpful in testing data flow, input validation, and output consistency between modules. It reflects how users or downstream systems interact with the pipeline and is especially helpful when components are developed independently or by different teams.

White Box and Gateway's Box Strategies

In white box testing, the tester has complete knowledge of the internal logic and structure of the components. This approach allows for more detailed testing of specific integration points, internal data handling, and edge cases. It's valuable when testing how tightly coupled systems behave together, particularly if subtle errors might arise from shared logic, data structures, or execution order.

While not a formal category like a black or white box, the idea of a "gateway's box" refers to focusing tests on the interfaces or boundaries between systems where one module hands data to another. It emphasizes validating protocols, formats, schemas, and version expectations across modules. This technique is fundamental in data pipelines, where schema mismatches or encoding issues can break downstream processing without obvious signs. It's a practical middle ground: the test may not look deeply into internals but still requires awareness of how interfaces are designed.

Challenges and Common Issues in Integration Testing

Integration testing often surfaces challenges that don't appear during unit testing, mainly because it involves coordinating multiple components, each with its assumptions and dependencies. One common issue is interface mismatch, where connected modules expect different formats, data types, or parameter structures. These mismatches can lead to silent failures or data corruption that's hard to trace. Another frequent challenge is incomplete or unstable test environments, where mocked services or missing dependencies prevent realistic testing of integration points. Without a fully representative climate, it's easy to overlook problems that would only appear in production.

Summary

Integration testing for labeled data focuses on verifying the consistency and correctness of data as it flows through a connected pipeline. Unlike unit testing, which checks components in isolation, integration testing ensures that modules interact reliably, handle data accurately, and maintain alignment in structure and meaning. It is key in detecting interface errors early, preserving data integrity, and keeping pipelines stable as systems evolve. Techniques like black box, white box, and interface-focused testing help uncover mismatches, schema drift, and other issues arising when components exchange data. Despite challenges like environment complexity and subtle inconsistencies, integration testing remains essential for building trustworthy, end-to-end data systems.

FAQ

What is the primary goal of integration testing for labeled data?

The goal is to ensure that labeled data remains consistent, accurate, and adequately structured as it passes through different data pipeline stages. It verifies how components interact and how data is handled across those connections.

How does integration testing differ from unit testing?

Unit testing checks individual components in isolation, while integration testing examines how those components work together. It focuses on data flow and interactions between modules rather than their internal logic.

Why is integration testing important in data pipelines?

Data pipelines involve multiple steps where labeled data is transformed, stored, or consumed. Integration testing helps catch issues like mismatches, lost data, or structural changes affecting downstream processes or model performance.

What kinds of issues does integration testing help detect?

It can uncover interface mismatches, schema inconsistencies, incorrect data formatting, and broken links between inputs and labels. These issues are often invisible in isolated testing but can cause significant errors when modules are connected.

What is black box integration testing?

Black box testing treats each module as a closed system and focuses only on inputs and outputs without considering internal logic. It helps validate that connected components produce the correct results when given real or simulated input.

What does white box integration testing involve?

White box testing gives the tester full access to the internal structure of the components. This allows for more detailed validation of data processing and helps identify edge case errors across module boundaries.

What is the purpose of the Gateway's box approach in integration testing?

It tests the interfaces between components, such as data formats, schemas, and protocols. This approach is instrumental in data pipelines, where minor differences at the boundaries can lead to larger downstream issues.

What challenges are common in integration testing for data systems?

Challenges include interface mismatches, unstable test environments, data inconsistencies, and insufficient test coverage. These issues can lead to errors that are hard to detect without a full view of the data pipeline.

How can integration testing help ensure data integrity?

It monitors how data is passed between modules, checking for loss, alteration, or corruption. Tests can validate schema consistency, track label alignment, and confirm that data transformations preserve meaning and structure.

When should integration testing be performed in the development cycle?

It should be done after the unit and complete system or acceptance testing. This helps catch problems in how components work together early, reducing the risk of hidden failures later in the process.