Maintaining Consistency in NLP Dataset Development

In AI and machine learning, managing NLP datasets is a major challenge. Large datasets require constant updating due to product changes and customer behavior. This leads to issues like concept and data drift, which degrades AI model performance.

NLP projects require a version control system. These systems track changes, ensure reproducibility, and maintain data quality. Without this, teams can encounter inconsistencies in data formats, structures, and annotations, which can seriously impact the accuracy and reliability of AI models.

Quick Take

- NLP dataset version control maintains data integrity and consistency.

- Frequent changes to a dataset lead to conceptual and data drift.

- Version control systems track changes and ensure reproducibility.

- Documentation and metadata help to understand dataset versions.

- Team collaboration and communication are required for dataset management.

Understanding NLP Dataset Versioning

NLP data versioning is a way to document which version of a dataset was used to train AI models. This includes recording changes to text corpora, annotated data, and model output.

The Importance of Versioning in NLP

Versioning is essential in NLP dataset management:

- Reproducibility. Allows you to reproduce specific versions of datasets to repeat an experiment and verify results.

- Collaboration allows a team to work on the same dataset, giving them a single point of truth and allowing them to know which version of the dataset each team member is working on.

- Quality control. Track changes to the data and identify issues in AI model performance.

Common versioning practices

NLP uses different versioning techniques:

- Snapshot-based. Save complete copies of the dataset at specific points in time.

- Incremental. Only changes between versions are recorded.

- Metadata-based. Use descriptive information to track changes in data sets.

Issues in NLP Dataset Versioning

When managing NLP datasets, issues of varying complexity arise that affect the consistency and reliability of the data.

To address these issues, a comprehensive approach to NLP dataset management is required. Annotators achieve consistency and reliability in NLP projects by creating robust versioning strategies and using specialized tools.

NLP Dataset Management Methods

Data Version Control (DVC) works with Git to manage data and AI models' versions. By linking AI models to specific dataset versions, DVC ensures that results are reproduced correctly.

Clear Documentation Standards

Establishing documentation standards includes:

- Creating comprehensive metadata.

- Maintaining detailed change logs.

- Tracing the provenance of data.

These steps help track changes in data and help teams work together on NLP projects.

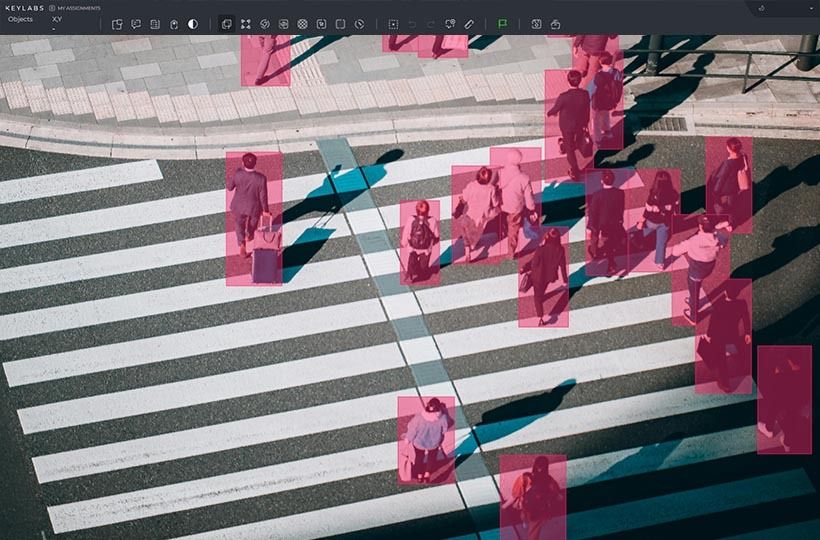

Using Automated Tools

Automated tools simplify and speed up processes previously performed manually by annotators. Their functions include collecting, cleaning, preprocessing, annotating, and analyzing text data. Annotation platforms allow you to quickly and accurately add labels to text, such as identifying parts of speech or tone of speech. This reduces manual labor, increases accuracy, and increases the scalability of projects.

Version Control Tools and Frameworks

Let’s look at three major tools that simplify the data version control process.

Monitoring Dataset Changes Over Time

It is important to monitor NLP datasets by monitoring quality metrics. These metrics indicate the health of the dataset and help maintain consistency. They include:

- Vocabulary Size.

- Sentence Length Distribution.

- Entity Coverage.

- Data Drift Percentage.

Monitoring these metrics helps to identify problems early. This ensures that the dataset remains robust for NLP tasks.

Using Change Detection Algorithms

Change detection algorithms in NLP datasets ensure the stability and quality of natural language processing models. These algorithms automatically detect changes in the data, preventing models from degrading.

Such algorithms analyze new or updated parts of the corpus and compare them to older versions using various metrics: word frequency, sentence length, part-of-speech distribution, or named entities. If a deviation is detected, this may indicate data drift, which requires retraining the model or updating annotations. This helps maintain the consistency of the data set and detect errors or inconsistencies in a timely manner.

Collaborate with teams to manage dataset versions

Effective NLP dataset management and version control require seamless teamwork. Defining roles helps to manage NLP datasets smoothly. To do this, assign specific tasks to team members:

- Dataset managers oversee data integrity and updates.

- QA professionals monitor and verify data accuracy and consistency.

- Administrators control versions and workflows.

Ensure communication

Establish protocols for dataset changes and updates. Regular team meetings help to reach a shared decision on version control practices. Implement code review processes for data modifications to catch errors.

Use collaboration platforms

Platforms designed for NLP datasets streamline the workflow. Such platforms share access to the corpus, allow you to distribute tasks across the team, track progress, and discuss conflicting cases as you work. They control quality through double annotation, consistency checks, and automatic error detection. Most platforms have built-in tools for data visualization, statistical analysis, change logging, and integration with machine learning tools. This makes the entire process of collecting and processing datasets easier.

Legal and ethical considerations

You must comply with data protection laws such as GDPR (the EU General Data Protection Regulation) or CCPA (the California Consumer Privacy Act). This means that the data must be collected lawfully.

It is important to consider biases in data that lead to discriminatory or unfair results from AI models. You need to carefully verify the data sources and their balance and implement mechanisms to detect and reduce bias.

Privacy and compliance

To ensure proper data protection, the following are used:

- Data encryption.

- Limited access controls for personnel.

- Regular reviews to eliminate potential threats.

- Data retention policies to reduce the risk of unauthorized access.

Future trends in NLP dataset versions

Using dynamic and updated datasets with new examples, linguistic shifts, or context allows models to be kept up to date.

There is a growing demand for datasets that cover not only popular languages but also less widely spoken dialects and minority languages. This allows NLP models to be more global and fair.

New dataset versions will undergo additional checks for toxic content, bias, and ethical risks. Open annotation guidelines, codes of ethics, and frameworks for responsible use will appear.

The number of datasets that combine text with other types of data - images, audio, video - is increasing. This opens the way to creating AI models that can work with multiple types of information.

FAQ

What are NLP dataset versions, and why are they important?

NLP dataset versions are saved versions of a dataset at various stages of its update or change. This helps track changes, ensures experiment reproducibility, and controls the quality of AI models.

What are the common issues with NLP dataset versions?

Issues include data loss, inconsistent formats, and scalability issues. These lead to data loss, impact AI model performance, and slow down the project's progress.

What are the best practices for managing an NLP dataset?

NLP dataset management practices include thorough documentation, versioning, and regular data quality monitoring.

What tools are available for versioning NLP datasets?

Popular tools include DVC, Git LFS, and MLflow. These tools support effective version control.

How can teams effectively collaborate on NLP dataset versions?

Clear roles, communication protocols, and collaboration platforms are required.

What legal and ethical considerations should be considered when versioning NLP datasets?

It is important to ensure compliance with data protection laws. Ethical considerations also need to be considered.

What are the emerging trends in NLP dataset versioning?

Emerging trends include dynamic and updatable datasets, multilingualism and cultural inclusiveness, ethical annotation and filtering, and multimodality.