Mean Average Precision (mAP): Differences and Applications

mAP is central to top object detection algorithms like Faster R-CNN, MobileNet SSD, and YOLO. It's the preferred metric for benchmark challenges such as Pascal VOC and COCO. Here, precision in object identification is crucial for a model's success.

Grasping mAP is vital for those in computer vision or information retrieval. It offers a balanced view of a model's object identification accuracy and false positive rate. By examining precision and recall across various confidence levels, mAP paints a detailed performance picture that single metrics can't.

Key Takeaways

- mAP is essential for evaluating object detection models like Fast R-CNN and YOLO

- It combines precision and recall metrics for comprehensive performance assessment

- mAP is widely used in benchmark challenges like Pascal VOC and COCO

- Precision-recall curves visualize model performance at different confidence thresholds

- Improving mAP involves enhancing data quality and optimizing detection algorithms

Understanding Mean Average Precision in Machine Learning

Mean Average Precision (mAP) is key in evaluating object detection and information retrieval systems. It combines precision and recall to offer a detailed look at model performance across multiple classes. This metric is essential for assessing how well models perform in these areas.

Definition of Mean Average Precision

The mAP definition involves the average of AP scores for all classes in a dataset. It evaluates both localization accuracy and classification performance. This makes it crucial for object detection evaluation. The mAP formula calculates the mean of all average precision scores to measure model effectiveness.

Importance in Object Detection and Information Retrieval

In object detection, mAP assesses a model's ability to identify and locate objects in images. For information retrieval, it evaluates the system's capacity to return relevant documents based on user queries. mAP is especially useful when dealing with multiple classes and varying confidence thresholds.

Key Components of mAP Calculation

Several elements are crucial for mAP calculation:

- Precision: The ratio of true positives to total predicted positives

- Recall: The ratio of true positives to total actual positives

- Intersection over Union (IoU): Measures overlap between predicted and actual bounding boxes

- Confusion Matrix: Provides insights into true positives, false positives, and false negatives

| Metric | Formula | Application |

|---|---|---|

| Precision | TP / (TP + FP) | Measures accuracy of positive predictions |

| Recall | TP / (TP + FN) | Assesses ability to find all positive instances |

| IoU | Overlap / Union | Evaluates localization accuracy in object detection |

By integrating these components, mAP offers a comprehensive metric for assessing model performance in various machine learning tasks. It is particularly valuable in object detection and information retrieval contexts.

The Fundamentals of Precision and Recall

Precision and recall are foundational in machine learning classification accuracy. They are essential for gauging model performance and the trade-offs involved.

Precision gauges the accuracy of positive predictions. It's calculated by dividing true positives by the sum of true and false positives. High precision signifies that your model's positive predictions are mostly correct.

Recall, however, measures the model's ability to identify all positive instances. It's computed by dividing true positives by the sum of true positives and false negatives. High recall indicates your model's effectiveness in detecting most positive cases.

The relationship between precision and recall is intricate. Enhancing one often results in a decrease in the other, known as the precision-recall trade-off. For instance, adjusting the Intersection over Union (IoU) threshold in object detection significantly impacts precision values.

Grasping this trade-off is crucial when comparing mAP vs mean precision. Mean precision offers a single value, whereas mAP provides a broader perspective by considering various thresholds. This makes mAP a preferred metric for evaluating object detection models.

"Precision without recall is like a sports car without fuel - impressive but not very useful."

To visualize model performance, a precision-recall curve is used. This graph plots precision against recall at different threshold settings. The area under this curve represents the Average Precision (AP), a key component in calculating mAP.

Confusion Matrix: The Foundation of mAP

The confusion matrix is essential for calculating mAP in machine learning. It categorizes predictions into four main areas, offering a detailed view of model performance.

True Positives and True Negatives

True Positives (TP) are instances correctly identified as positive. True Negatives (TN) are instances correctly identified as negative. These elements demonstrate your model's predictive accuracy.

False Positives and False Negatives

False Positives (FP) occur when the model incorrectly labels something as positive. False Negatives (FN) happen when it misses a positive instance. These errors point out areas for model enhancement.

| Predicted / Actual | Positive | Negative |

|---|---|---|

| Positive | 4 (TP) | 1 (FP) |

| Negative | 2 (FN) | 3 (TN) |

Impact on mAP Calculation

The confusion matrix affects precision and recall, key for mAP calculation. Precision shows the accuracy of positive predictions. Recall measures the model's ability to identify all positive instances.

For the given data:

- Precision: 0.8

- Recall: 0.67

These metrics are foundational for mAP calculation. They offer a thorough assessment of your model's performance at various confidence levels.

By examining the confusion matrix, you uncover your model's strengths and weaknesses. This insight enables targeted improvements and more precise mAP calculations.

Intersection over Union (IoU): Measuring Localization Accuracy

Intersection over Union (IoU) is vital for assessing object localization accuracy. It evaluates the overlap between predicted and actual bounding boxes. This metric offers insights into the effectiveness of object detection algorithms.

The IoU score ranges from 0 to 1, with higher values indicating better accuracy. An IoU of 0 signifies no overlap, while 1 means a perfect match. The formula for calculating IoU is:

IoU = Area of Intersection / Area of Union

In object detection, the IoU threshold is key in determining prediction correctness. A common threshold is 0.5, requiring at least 50% overlap with the actual box for a prediction to be considered correct. This threshold balances detection accuracy and false positives.

IoU is crucial for distinguishing between true positives, false positives, and false negatives in object detection. It complements other evaluation metrics to offer a comprehensive model performance assessment.

When training object detection models, it's essential to have ground truth data divided into training, testing, and validation sets. This data is vital for accurate IoU calculations and overall model evaluation. Implementing IoU calculations in Python enables efficient computation based on bounding box coordinates.

Understanding and applying IoU helps measure and enhance your model's localization accuracy. This leads to more reliable object detection results.

Mean Average Precision: Calculation and Interpretation

Mean Average Precision (mAP) is a key metric for assessing object detection models. It's vital for machine learning experts to grasp how to calculate mAP and understand its scores.

Step-by-Step mAP Calculation Process

The mAP calculation involves several critical steps:

- Generate prediction scores for each object in the image

- Convert scores to class labels using a threshold

- Calculate the confusion matrix (True Positives, False Positives, False Negatives)

- Compute precision and recall values

- Plot the precision-recall curve

- Calculate the area under the curve (AUC) to get the Average Precision (AP)

- Repeat for all classes and take the mean to obtain mAP

Interpreting mAP Scores

Higher mAP scores signify better model performance. An mAP of 1.0 indicates perfect object detection with precise bounding boxes. Real-world models usually score between 0.5 and 0.9.

Advantages and Limitations of mAP

mAP has several advantages. It offers a comprehensive performance measure across multiple classes and handles class imbalances effectively. It balances precision and recall for a fair evaluation.

However, mAP has its limitations. It's sensitive to the Intersection over Union (IoU) threshold. It may not fully reflect real-world performance in certain scenarios. When evaluating mAP, consider these factors for a thorough assessment of your model's capabilities.

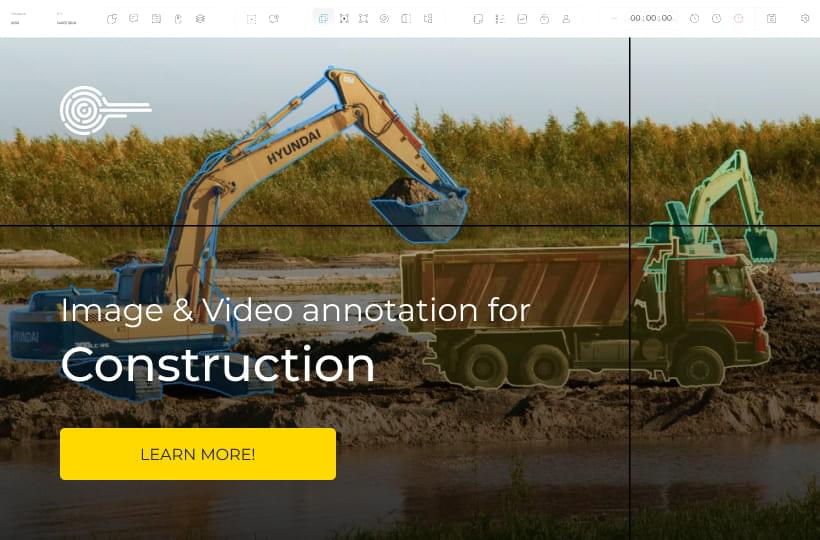

Applications of mAP in Object Detection

Mean Average Precision (mAP) is vital for evaluating object detection algorithms in computer vision tasks. It assesses the performance of models like Faster R-CNN, YOLO, and MobileNet SSD. mAP's applications span beyond simple object detection, touching on self-driving cars, visual search, and medical imaging.

In autonomous vehicles, high mAP scores are critical for safety. Object detection algorithms must accurately identify pedestrians, vehicles, and road signs to prevent accidents. The precision and recall trade-offs captured by mAP directly affect these systems' reliability.

Visual search algorithms heavily rely on mAP to gauge their accuracy and completeness. A high mAP score indicates the algorithm's ability to find relevant results while reducing false positives. This is especially valuable in e-commerce and content management systems.

Medical image analysis also benefits from mAP. In tumor detection models, mAP averages precision-recall curves for all classes. This is crucial for early diagnosis and treatment planning, where accuracy can significantly impact patient outcomes.

- Self-driving cars: mAP ensures accurate object detection for safety

- Visual search: mAP evaluates accuracy in retrieving relevant images and videos

- Medical imaging: mAP assesses tumor detection model performance

mAP's versatility makes it essential across various computer vision tasks. It provides a comprehensive evaluation of object detection algorithms. This helps researchers and developers enhance model performance, creating more robust systems for real-world applications.

The Precision-Recall Curve: Visualizing Performance

The precision-recall curve is a powerful tool for visualizing model performance in binary classification tasks. It helps understand the trade-off between precision and recall at various confidence thresholds.

Understanding the Precision-Recall Trade-off

Precision measures the accuracy of positive predictions, while recall indicates the proportion of actual positives identified. The trade-off between these metrics is crucial for model evaluation. Precision-recall curves plot these values against each other, revealing how changes in the classification threshold affect performance.

Analyzing Curve Shapes and Areas

The shape of a precision-recall curve provides insights into model behavior. A curve that maintains high precision as recall increases indicates a strong model. The area under the curve (AUC) is a single-value metric for overall performance, with larger areas signifying better models.

| Curve Shape | Interpretation |

|---|---|

| Steep initial drop | High precision at low recall |

| Gradual slope | Balanced precision-recall trade-off |

| Flat line | Consistent precision across recall values |

Using PR Curves to Compare Models

Precision-recall curves excel in model comparison, especially for imbalanced datasets. By plotting curves for different models on the same graph, you can visually assess their relative strengths. This performance visualization technique helps in selecting the best model for your specific use case.

Remember, the ideal model achieves high precision and recall simultaneously. Use these curves to fine-tune your models and make informed decisions about threshold selection for optimal performance.

mAP vs. Other Evaluation Metrics

In the realm of machine learning, you'll come across numerous metrics to assess model performance. Mean Average Precision (mAP) is particularly crucial in object detection tasks. However, it's not the sole metric in this field. Let's delve into a comparison of mAP with other prominent metrics to grasp their distinct advantages.

The F1 score is a well-liked metric for classification tasks, balancing precision and recall. It's ideal when you seek a single metric that weighs both false positives and negatives. Unlike mAP, the F1 score doesn't consider multiple thresholds, making it less suitable for object detection scenarios.

AUC-ROC (Area Under the Curve - Receiver Operating Characteristic) is another notable metric. It excels in measuring a model's ability to differentiate between classes. Though valuable for binary classification, it lacks in multi-class object detection tasks where mAP excels.

- mAP: Ideal for multi-class object detection, handling various thresholds and classes

- F1 Score: Balances precision and recall, best for binary classification

- AUC-ROC: Excellent for assessing class separation, primarily used in binary classification

Improving mAP Scores in Machine Learning Models

Boosting Mean Average Precision (mAP) scores is crucial for enhancing machine learning model performance. This metric, ranging from 0 to 1, assesses ranking and recommender systems. A score of 1 signifies perfect ranking, with all relevant items at the top. Let's delve into strategies to elevate mAP scores and optimize your models.

Data Quality Enhancement Strategies

Enhancing data quality is essential for boosting mAP scores. It's important to diversify your training data and balance class representations. This strategy aids models in learning more robust features, enhancing performance across various scenarios.

Algorithm Optimization Techniques

Model optimization is key to increasing mAP. Fine-tune hyperparameters, leverage transfer learning, or employ ensemble methods. These approaches can notably enhance your model's capacity to rank relevant items at the forefront of recommendations or search results.

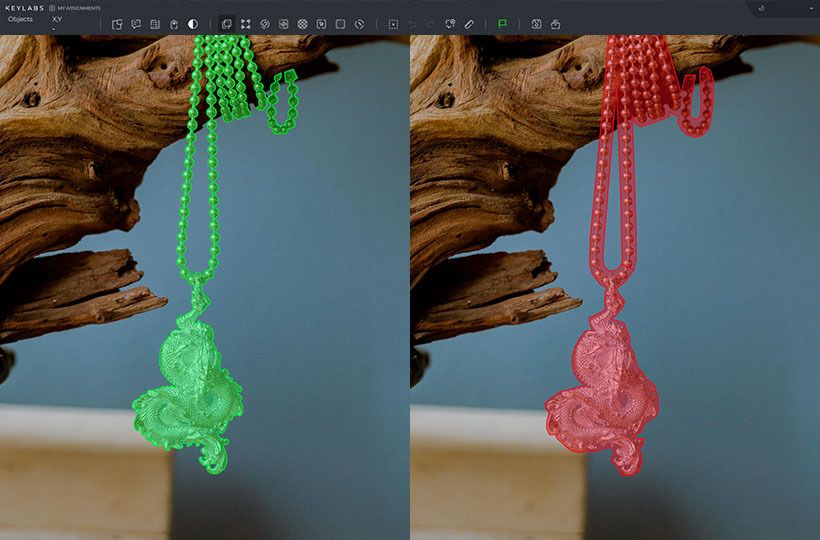

Annotation Process Refinement

Refining the annotation process is critical for precise ground truth data. Develop clear guidelines for annotators and conduct rigorous quality checks. This refinement directly affects mAP scores by offering more accurate data for model training and evaluation.

Remember, mAP focuses on placing relevant items at the top of the list. While it may be challenging to interpret intuitively, focusing on these improvement strategies will enhance model performance. The computer vision community frequently employs mAP for comparing object detection systems, making it a valuable metric to master.

Future Trends and Developments in mAP Usage

Object detection technology is advancing rapidly, with mAP playing a pivotal role in these advancements. The future of object detection metrics will see mAP adapting to new challenges in 3D object detection and point cloud data analysis. This evolution will enhance the accuracy of complex spatial relationships in autonomous driving and robotics.

Researchers are working to improve mAP's handling of occlusions and small objects. These enhancements will significantly boost the metric's effectiveness in crowded scenes and long-range detection tasks. Expect future mAP versions to offer more detailed performance insights, especially in real-world scenarios.

The integration of temporal information in video analysis is another significant development for mAP. As object detection moves beyond static images, mAP will evolve to assess tracking consistency and object persistence across video frames. This will be essential for surveillance, sports analytics, and automated video content analysis.

FAQ

What is Mean Average Precision (mAP)?

Mean Average Precision (mAP) is a key metric for evaluating object detection and information retrieval models. It assesses a model's performance across various classes and confidence levels. It considers both the accuracy of localization and the precision of classification.

Why is mAP important in object detection and information retrieval?

mAP is crucial for evaluating models with multiple classes and different confidence thresholds. It evaluates both the accuracy of localization (through IoU) and the precision of classification. This makes it a single-number metric for assessing overall model performance.

What are the key components of mAP calculation?

The calculation of mAP involves precision, recall, Intersection over Union (IoU), and the confusion matrix. These elements directly influence the mAP score. They help evaluate different aspects of model performance.

How does the confusion matrix contribute to mAP calculation?

The confusion matrix is used to calculate precision and recall. It includes True Positives (TP), True Negatives (TN), False Positives (FP), and False Negatives (FN). These values are essential for determining mAP.

What is the role of Intersection over Union (IoU) in mAP?

Intersection over Union (IoU) measures the overlap between predicted and ground truth boxes. It indicates localization accuracy. Different IoU thresholds can significantly affect precision values and, consequently, the mAP score.

How is mAP calculated, and how do you interpret the scores?

mAP calculation involves several steps. First, prediction scores are generated and converted to class labels. Then, the confusion matrix attributes are calculated. Precision and recall are computed, and the area under the precision-recall curve is determined. Finally, average precision for each class is measured. The process is repeated for each class, and the mean is taken. Higher mAP scores indicate better overall model performance.

What are some applications of mAP in object detection?

mAP is widely used in evaluating object detection algorithms like Faster R-CNN, YOLO, and MobileNet SSD. It's crucial in benchmark challenges like COCO and ImageNet. mAP is also applied in segmentation systems, search engine evaluation, medical imaging for tumor detection, and assessing the effectiveness of search algorithms.

How does the precision-recall curve relate to mAP?

The precision-recall curve visualizes the trade-off between precision and recall at various confidence thresholds. The area under the precision-recall curve (AUC) is used in mAP calculation. The curve shape provides insights into model performance.

How does mAP compare to other evaluation metrics like F1 score and AUC-ROC?

While F1 score balances precision and recall, it doesn't consider multiple thresholds. AUC-ROC focuses on the model's ability to distinguish between classes. mAP is preferred in object detection due to its ability to handle multiple classes and thresholds. Each metric has its strengths, and the choice depends on the specific task and dataset characteristics.

How can mAP scores be improved in machine learning models?

Strategies to improve mAP scores include enhancing data quality and optimizing algorithms. Increasing diversity, balancing class representations, and ensuring accurate annotations are key. Fine-tuning hyperparameters, using transfer learning, or implementing ensemble methods can also help. Additionally, refining the annotation process through clear guidelines and quality checks is crucial.