Multi-Sensor Labeling: LiDAR, Camera & Radar

In the world of autonomous systems, relying on a single type of sensor is like trying to navigate a noisy city with your eyes closed or your ears plugged. Every sensor has its strengths, but also critical limitations. Cameras provide incredible detail, reading colors, textures, and text, yet they become nearly helpless in the dark or when blinded by the sun.

LiDAR, in turn, builds flawless 3D geometry of the space but cannot distinguish color. Radar remains a reliable guide in heavy rain or fog when other sensors go "blind", although it lacks the clarity to recognize small objects. Multi-sensor labeling emerges as the technological answer to these individual weaknesses.

Instead of analyzing each data stream in isolation, this method allows them to be combined into a single, unified model of reality. Labeling data from multiple sources simultaneously creates a context for AI where the weakness of one sensor is compensated for by the strength of another. This creates a kind of "digital hypersensitivity" that allows self-driving systems to make safe decisions in the most complex real-world conditions.

Quick Take

- Multi-sensor fusion combines the strengths of different devices into a single, ultra-precise model of reality.

- Sensor calibration and time alignment of data are the foundation; without them, it is impossible to correctly merge 2D and 3D spaces.

- The use of track IDs and synchronized annotation ensures that an object is perceived as a single entity across all projections.

- A labeling error of just a few centimeters can lead to a wrong prediction of a pedestrian's intent or a dangerous maneuver.

- High-quality labeling on the first try saves the budget on AI retraining and speeds up the product's time to market.

How Artificial Intelligence Perceives the World Through Sensors

For a self-driving car or a robot to move safely, it needs to see the space around it as clearly as a human does. Since no single device is perfect, engineers use a whole set of different sensors. This process is called multi-sensor fusion, which means merging data from all sources into one clear picture where each sensor helps the other better understand the situation.

The Role of Each Sensor in the Big Picture

Cameras provide rich colors and fine details, making them essential for recognizing road signs or the color of a traffic light. However, a camera is easily confused by fog or nighttime, and it cannot accurately tell exactly how far away an object is.

In such cases, LiDAR helps by scanning the space with lasers to create a precise "point cloud." It doesn't see colors, but it knows the distance to every centimeter of the road perfectly. Radars work great in bad weather and instantly determine the speed of other cars. Thus, different views of the same scene are obtained: LiDAR provides volume, the camera provides color, and radar provides speed.

Synchronization and Data Alignment

This is the most critical stage, as data from different devices must work like a single, coordinated organism. The first step is sensor calibration – the precise setup of all sensors relative to each other so the computer understands where the camera is located compared to the LiDAR. Without this, the data will be offset, and the system won't be able to merge the information correctly.

The next step is time synchronization, because all frames and laser scans must be taken at the exact same moment. If the data is off by even a fraction of a second, the car will see an obstacle where it isn't actually located. Only after full alignment in time and space does synchronized annotation begin, where specialists mark objects simultaneously on all types of data.

Merging 2D and 3D Spaces

The final stage involves correctly combining the flat image from the camera with the volumetric scan from the LiDAR. For this, a 2D-3D projection is used, allowing a photo to be overlaid onto a 3D model of the world. It’s like stretching a drawing over a 3D shape to make it look realistic.

This results in complete information for every object. You can find the exact height of a pedestrian, their speed, and the distance to them in 3D space. This level of accuracy allows the system to make correct decisions on the road and avoid errors that would occur if only one type of sensor were used.

Features and Value of Labeling in Combined Systems

When data from different sensors are merged, the process of creating training data becomes more complex but also more valuable. It is important to understand which types of labels exist and how the quality of this work affects business and safety.

Types of Annotations in a Multi-Sensor Approach

The main advantage is that one annotation is automatically reflected across all sensor projections. This ensures data integrity and allows you to cover all characteristics of the object.

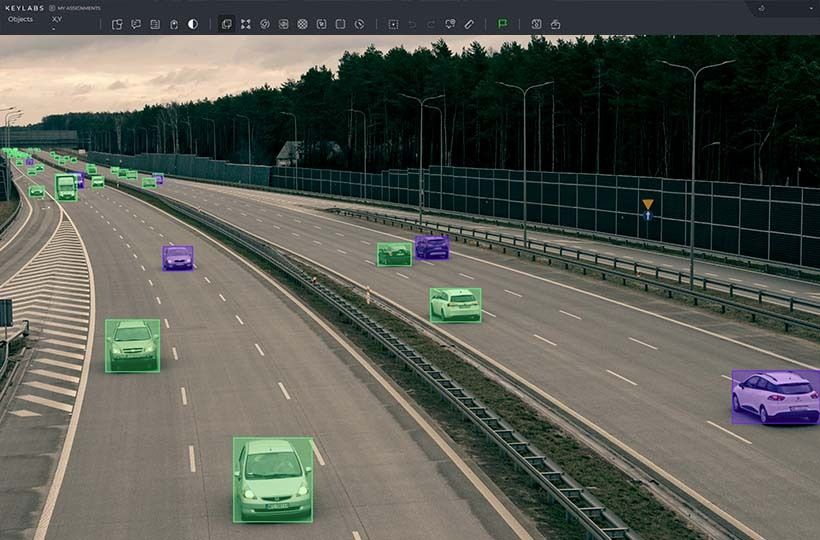

- 2D Bounding Boxes. Standard frames on flat photos from cameras. They help the system identify the type of object, such as distinguishing a car from a pedestrian.

- 3D Bounding Boxes. These look like transparent cubes around objects in the LiDAR point cloud. They provide an understanding of volume and exact position in space.

- Point-level Labeling. Every single point of a laser scan gets its own color. This helps the computer understand exactly where the asphalt, grass, or building wall is.

- Track IDs. Every object is assigned a unique number. This allows the system to understand that the object in the video and in the point cloud is the same car moving along a specific path.

Impact of Multi-Sensor Labeling Quality on ML Models

The quality of labeling directly determines how smart the final AI model will be. Every stage of the algorithm's operation depends on the accuracy of the input data.

- Perception System. Correct labels allow the system to see objects clearly even in difficult conditions, such as during rain or at night.

- Object Tracking. High-quality labeling helps track targets without errors. This eliminates situations where the system loses sight of a car or confuses it with another.

- Action Prediction. Accurate data about past movement allows the system to understand exactly where a cyclist or pedestrian will turn in a second.

An annotation error of even a few centimeters can lead to an incorrect calculation of the braking distance or a dangerous maneuver. Therefore, high-quality data is of great value to the project.

Quality Control and Consequences of Labeling Errors

To create a reliable AI, it isn't enough to just mark objects. Every element must match reality with millimeter precision.

Methods for Verifying Complex Labeling

Since data comes from multiple sources, the verification process is multi-layered. Experts use special algorithms and manual checks to confirm that information matches everywhere. During cross-projection checks, inspectors see if the 3D cube aligns perfectly with the object in the 2D video.

Movement logic control is also performed, where the system automatically looks for errors in Track IDs. For example, if a car is marked as a sedan in one frame and suddenly becomes a truck in the next, it is a clear annotator error. Special attention is paid to edge cases – difficult scenarios like a car entering a tunnel or moving through a heavy downpour.

How Annotation Errors Damage the System

Errors in labeling have a direct impact on the three main functions of a self-driving vehicle. Each such inaccuracy creates a chain reaction that can lead to incorrect vehicle behavior on the road. Perception problems occur when an annotator incorrectly specifies an object's boundaries. In this case, the system may think the obstacle is smaller than it actually is. This creates a risk that the car will drive too close to another vehicle or even hit its side mirror.

Tracking failures appear when the same track ID number accidentally jumps from one car to another. Because of this, the algorithm loses the real object, and the system may suddenly "forget" about a vehicle it just saw in front of it.

Prediction errors happen due to uneven labeling of a pedestrian’s movement history. If the data has gaps, the model will not be able to understand the person’s intentions. Instead of expecting a calm road crossing, the system might recognize it as a sudden jump to the side and trigger emergency braking without a real need.

Business and Engineering Value of Accurate Data

High-quality labeling significantly saves company resources and increases trust in the product. It becomes the foundation for the entire project for several reasons:

- Savings on computing. Training large models is very expensive, so getting high-quality data right the first time saves the budget from the costs of retraining the system.

- Speed to market. Companies with clean data pass safety checks faster because their system works stably, thanks to perfect 2D-3D projection and synchronized annotation.

- Safety and reputation. For the average user, quality labeling means smooth driving without sudden movements, which creates the image of a reliable technology.

FAQ

Is it possible for a system to operate using only cameras without relying on LiDAR?

Some companies try to use only cameras, but this requires much more complex algorithms to calculate depth. Most developers consider LiDAR necessary for "redundant safety."

How do algorithms handle situations where sensor data contradict each other?

Methods of "weighted trust" are used. For example, in heavy rain, the system automatically gives higher priority to radar data, as cameras and LiDAR may provide a noisy image.

What are "ghost objects" in the context of labeling?

These are false sensor triggers, such as reflections from metallic surfaces on a radar. The task of the annotator and the filter system is to distinguish a real obstacle from such a "ghost."

How does automation affect the labeling process?

Modern systems use powerful neural networks for automatic pre-labeling, which a human inspector then simply verifies. This significantly speeds up the preparation of massive datasets.