Optimizing Batch Selection for Annotation: Techniques and Tips

Advanced strategies, such as batch size optimization, reduce redundant work while preserving diverse samples, which is a key challenge in managing covariance in large datasets.

Modern frameworks now integrate multi-parameter estimation to prioritize valuable samples. These techniques reduce the number of experimental iterations in distributed computing environments. Our team combines active learning principles with computational modeling to address the trade-offs between sampling uncertainty and representative coverage.

Quick Take

- Strategic annotation grouping reduces project costs and accelerates timelines.

- Active learning principles maximize information gain per annotation cycle.

- Sample covariance management prevents overprocessing of data.

- Hybrid approaches combine diversity with model uncertainty metrics.

- Distributed frameworks enable scalable implementation across teams.

Introduction to Batch Selection for Data Annotation

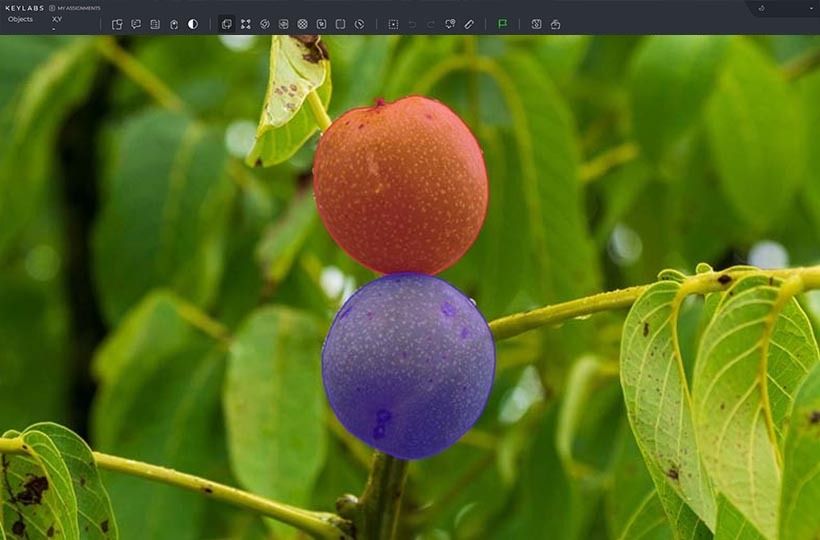

Batch selection is a strategy used in the data annotation process where a whole group of samples is selected for further labeling. This approach is helpful in active learning tasks with limited resources. Instead of random selection, the system determines, based on specific criteria, which examples will be able to maximize the quality of the AI model after annotation.

The main advantage of batch selection is that it optimizes the model training process and reduces the number of annotated samples required to achieve the desired accuracy. This is relevant for complex or specialized domains where annotation is expensive and requires expert intervention. The batch approach also allows you to adapt the process to different scenarios, from manual adaptation to semi-automated pipelines involving AI agents.

Batch selection methods for optimized model training

There are several approaches to batch selection. One is uniform selection, where examples are selected uniformly randomly from the dataset. This method is simple but often inefficient, especially when working with large or unbalanced data sets.

Informative selection focuses on selecting examples for which the model is least confident in its predictions. This approach lets the model learn quickly on complex or edge cases, improving its performance.

Cluster selection is based on preliminary data clustering, after which representatives from each cluster are selected to ensure diversity in the training set. This helps to avoid local overfitting.

Adaptive methods are also used, which combine several criteria: the complexity of the example, its uniqueness, or the potential to reduce the loss function.

In deep learning, batch selection is combined with active learning methods, which allows you to interactively select the next batch of data that will benefit the AI model the most. This is relevant for data annotation, which requires limited human resources and high labeling costs. These methods enhance labeling efficiency by reducing redundant annotations and focusing on the most informative samples.

Methods for effective and diverse data selection

Special methods are used to train the system on the most relevant examples, ensuring high informativeness and diversity of the selected examples. Let's consider the main methods that help optimize this process.

1. Uncertainty Sampling. This method is based on the idea that the AI model is trained on the least confident examples. This allows you to increase accuracy in complex cases and adapt the model to fine boundaries between categories.

2. Diversity Sampling. To avoid training on similar examples, an approach in which the most diverse samples from the set are selected is used. This makes it possible to cover a wide range of variations in the data, which is important in conditions of heterogeneous sources.

3. Clustering-Based Selection. The data is pre-grouped by similarity, and representative examples are selected from each cluster. This maintains a balance in the representation of all data subtypes and reduces the bias of the AI model.

4. Core-Set Selection. This method is based on finding a subset of data that retains similar learning dynamics as the complete set. Core-set algorithms reduce the size of the required sample without losing training efficiency.

5. Active Learning with Budget Constraints. This approach combines informativeness and diversity, but with a budget constraint. The AI model dynamically evaluates each sample's potential contribution to improving performance and selects the best ones in terms of cost/value.

6. Entropy-Based Selection. Entropy is used as a measure of uncertainty in the model's predictions. Examples with high entropy signals are ambiguous situations where the AI model does not have a clear favorite among the classes. This allows training to be sensitive to complex or rare patterns.

7. Representativeness Scoring. Assessing the representativeness of the data allows you to consider how well an individual sample corresponds to the structure of the overall dataset. This is important for tasks where individual classes or contexts may be underrepresented.

In combination or adaptation to the task, these methods allow you to form a training set that reduces processing and annotation costs and increases the overall quality of AI models.

Using in silico models to improve decision-making

In silico modeling, it is a method of computer modeling of biological, chemical, or physiological processes.

Predicting absorption, distribution, metabolism, excretion, and toxicity properties is the foundation of drug development. Machine learning models trained on chemical databases containing millions of structured data points are used. These systems:

- Identify bioavailability patterns across molecular structures.

- Identify potential toxicity risks using similarity scoring.

- Optimize lead compounds using iterative simulations.

Advanced features in modern frameworks handle complex interactions with datasets. Graph neural networks handle 3D molecular configurations and retain computational efficiency, which is important when screening over 100,000 compounds.

Improved model accuracy directly impacts decision quality. Teams achieve faster optimization cycles by combining predictive information with experimental validation.

Monitoring Model Performance and Convergence of Root Mean Square Error

RMSE is a metric that measures the root mean square deviation of a model's predictions from the actual values. It provides an interpretable estimate in units of the target variable of how well the model describes the data.

During training, RMSE is monitored at each epoch for both the training and validation sets. A decrease in RMSE with each epoch indicates that the quality of predictions is improving and the model is getting closer to the optimal solution. RMSE convergence means that the metric is stabilizing and the model is reaching a state where further training does not yield significant improvements. This can be a signal to end training or change hyperparameters.

Monitoring RMSE allows you to detect problems with over- or under-fitting. If the RMSE on the training set is less than on the validation set, this is a sign of over-fitting. If both values remain high, the model will not have enough complexity or require a different architecture.

From a technical perspective, quality monitoring involves visualizing RMSE changes in graphs, applying callback functions, and saving checkpoints when RMSE reaches a new minimum.

Integration of experimental and active learning results

This approach combines empirical observations with adaptive data selection strategies to achieve maximum training efficiency with minimum iterations.

In practice, this is an interaction between two streams of information. The first is the results of real experiments or tests, which have accurate but limited data with a high acquisition cost. The second is the results of active learning, where the model independently determines which data samples are most informative for its improvement and requests only their annotation. This allows human resources to be focused on important samples and reduces the overall cost of labeling.

The combination of both streams is ensured through prioritization and re-evaluation systems, where experimental results are used as a validation basis for adjusting the active learning selection criteria.

As a result, AI models quickly acquire high accuracy and demonstrate better generalizability in new conditions. They cope better with data heterogeneity and are less sensitive to noise, as they have a strong empirical basis supported by active adaptation.

This approach is actively used in biomedicine, agrotechnology, industrial visual inspection, and autonomous systems.

FAQ

How does annotation quality affect AI model results?

Annotation quality determines the accuracy and reliability of AI models; inaccurate or inconsistent annotations lead to incorrect predictions.

What distinguishes active learning from random sampling?

They differ in that, in active learning, the AI model selects the most informative samples for annotation.

Why integrate ADMET models into molecule optimization?

Integrating ADMET models allows you to quickly assess the pharmacokinetic properties and safety of molecules at the design stage. This reduces the risk of failure in the later stages of development and accelerates the drug's release to the market.

What metrics indicate successful RMSE convergence?

Successful RMSE convergence is indicated by a decrease in the metric value on the validation set without significant fluctuations. The difference between the training and validation RMSE must also be minimal, which indicates the ability to generalize the AI model.