Sample Selection Strategies for Efficient Data Labeling

In modern AI development, there is a prevailing myth that simply collecting as much data as possible is enough to create a high-quality model. However, in practice, randomly labeling all available information almost always proves to be an inefficient strategy. When thousands of identical images or repetitive text fragments land on the annotators' desks, the company wastes huge budgets on labeling "informational noise" that brings no new value to the algorithm.

If a model already confidently recognizes an object in typical conditions, the hundredth or thousandth similar copy of that sample practically does not improve its accuracy. As a result, the process becomes too slow, and the final model becomes overloaded with unnecessary repetitions, which can even lead to a decline in its ability to generalize knowledge.

True training speed and final accuracy depend directly on the quality of the selection. Strategic sample selection allows for the isolation of exactly those cases that are on the edge of the model's understanding or represent rare scenarios. Thus, sample selection transforms from a technical stage into a key tool for managing the efficiency of the entire AI development lifecycle.

Quick Take

- Smart sample selection reduces labeling costs without losing model accuracy.

- The model itself points out its "weak spots", directing annotators' attention to the most valuable data.

- It is necessary to combine complex cases with typical examples to avoid bias in the model's logic.

- Selection strategies must evolve as the project grows, moving from manual control to full automation.

Active Learning as a Practical Tool

Implementing active learning into data preparation workflows has been a true breakthrough in the development of intelligent systems. This represents a shift in the very paradigm of human-machine interaction, transforming the labeling process from passive accumulation into a dialogue.

Evolution from Passive to Active Labeling

In the classical approach to labeling, the human acts as a teacher who methodically explains every single example to the model in order. This is like reading a textbook from the first to the last page without considering which topics the student has already mastered and which they haven't. Active learning changes this scheme, turning the model into an active participant. Now, the algorithm itself analyzes the unlabeled data array and points the specialist toward samples that are the most informative or difficult for it.

This evolution significantly increases the intelligence of the entire process. The model no longer waits to be fed data; it independently "asks" for exactly the information it lacks to overcome its current accuracy limit. This makes training purposeful: instead of spending months labeling obvious and repetitive cases, the team focuses on nuances that truly affect final quality.

The main advantage is the ability to dramatically reduce annotation volume without losing quality. Since the system selects only the most useful examples, developers can reach target accuracy by labeling only a small fraction of the data. This avoids oversaturating the model with identical examples.

Balance Between Complexity and Data Value

During selection, it is important to remember that not every complex sample is equally useful. There is a risk of getting stuck searching only for the hardest examples, which can lead to unexpected problems in system behavior.

True dataset quality is built on a balance of three types of samples:

- Complex samples. They help the model clearly distinguish between similar objects or operate in harsh conditions.

- Typical examples. They serve as the "foundation", ensuring the model doesn't forget what the world looks like in standard situations.

- Rare cases. They teach the system to react to non-trivial scenarios that happen rarely but carry a high cost of error.

Over-biasing toward only complex samples can make the model too "suspicious." For example, the system might start seeing anomalies where there are none or lose accuracy on ordinary, everyday data.

Impact of Context and Sample Selection Logic

Data preparation efficiency depends on understanding exactly what we are trying to explain to the algorithm. Each type of information has its own specifics, so the approach to sample selection must be adapted to the specific structure and purpose of the project.

Impact of Data Types on Selection Strategy

There is no universal way to select data, as working with text is fundamentally different from processing video or sensor metrics. Each type of media dictates its own criteria for the value of information:

- Text data. Focus on rare linguistic structures, specific terminology, and complex semantic connections.

- Video streams. Searching for key changes between frames, the appearance of new objects in the scene, or changes in lighting to avoid duplication.

- Time series. Capturing anomalies, sharp value drops, and cyclical patterns in the digit stream.

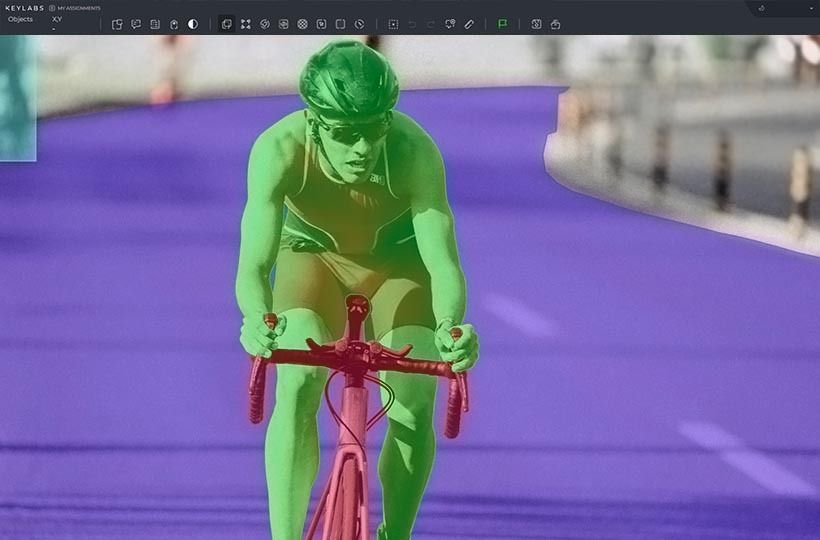

- Images. Ensuring maximum visual diversity, including different angles and backgrounds.

The context of the task always dictates the rules. While face recognition needs visual variety, medical diagnosis prioritizes the smallest deviations from the norm. This is why specialists analyze the nature of the data before starting work to choose the appropriate method.

Main Approaches to Sample Selection

The most popular method in modern development is uncertainty sampling. The logic is simple: we ask the model to check new data and return the examples it is most unsure about. This model-guided labeling is based on several principles:

- Searching for the boundary. Selecting samples where the model wavers between the two most likely answers.

- Entropy analysis. Identifying examples that cause the most "chaos" or confusion for the algorithm.

- Minimizing the obvious. Ignoring samples that the model has already learned to recognize with high accuracy.

Often, these approaches are combined into a single query strategy, balancing between looking for confusing examples and covering new categories. This iterative annotation, where labeling and training happen in cycles, allows the model to gradually become smarter by receiving exactly the portion of knowledge it lacks at that moment.

Strategic Management of Selection

Even the most advanced selection algorithms can fail if the human factor and project dynamics are not taken into account. Understanding common pitfalls and strategic planning helps turn theoretical methods into a reliable production process.

Typical Errors in Sample Selection

In practice, teams often face problems that negate the benefits of Active Learning. The most common error is excessive trust in the model during the early stages. When an algorithm lacks basic knowledge, its assessment of its own uncertainty can be wrong, leading to the selection of irrelevant or noisy data.

- Ignoring edge cases. By focusing only on what the model suggests, it’s easy to miss unique scenarios that haven't entered the algorithm's field of view at all.

- Cyclical bias. If the model constantly chooses similar complex samples, it may overtrain them, losing accuracy on the base data.

- Lack of a control group. Abandoning random sampling for testing prevents an objective evaluation of real-world accuracy progress.

A practical approach requires regular intervention by experts to check whether the sampling has become too narrow or specific.

Scaling Strategies

Approaches that work perfectly for a prototype often become inefficient when scaling to production. At the pilot stage, one can afford deep analysis of every sample, but when data volumes reach millions, the strategy must change.

At large scales, automating the query strategy becomes critical. Instead of just suggesting samples, the system must independently filter duplicates before they even reach the uncertainty assessment. The frequency of iterative annotation cycles also changes: instead of constant retraining after every hundred samples, companies move to batch processing to optimize server and annotator workloads.

Future Trends in Data Selection

The focus is shifting from the era of big data to the era of quality data. The main trend is using self-supervised learning and LLMs to automatically assess information value.

Modern systems are starting to use "judge models" that analyze data without human intervention to determine how much a new sample will enrich the main system's knowledge. Automated selection allows for the creation of datasets that are ten times smaller in volume but several times more effective in quality. This paves the way for personalized AI models that can learn on the fly, choosing only the most important experiences from an endless stream of input information.

FAQ

How can choosing samples that are too complex harm the stability of the model?

An excessive concentration on anomalies or "hard" examples forces the model to focus on noise, which leads to a loss of accuracy in basic, everyday scenarios. This creates an overfitting effect on rare cases, causing the algorithm to start seeing errors where there are none. An optimal dataset must always maintain a balance between edge cases and typical representative data.

Does changing the model architecture affect previously selected samples?

Yes. If you decide to change the neural network itself, its "zones of uncertainty" will be different. However, data selected by the previous model still remains useful, as it represents complex cases from a mathematical standpoint, not just for a specific architecture.

How exactly does using multiple models improve the objectivity of selection?

Instead of relying on the opinion of a single algorithm, the system uses a group of models that "vote" on the complexity of each sample. If models with different architectures cannot reach an agreement on the result, such a sample is considered the most informative for training. This helps filter out the subjective errors of a specific neural network and find truly complex patterns.

What role does the confidence threshold play in automating real-time selection?

The confidence threshold defines the limit at which data is either automatically accepted by the model or sent to a human for verification. Setting the threshold too high will lead to high costs for annotators, while setting it too low will lead to the accumulation of errors in the knowledge base.

Does sample selection work for regression tasks?

Yes. Instead of confidence in a class, we use variance. If different versions of the model give very different predictions for the same value, that sample is an ideal candidate for manual review.