Semantic Segmentation for 3D Point Clouds

It is a rapidly growing field of computer vision and 3D data analysis focused on assigning a meaningful label to each point in a 3D scene. Unlike traditional object detection or classification, semantic segmentation provides a detailed understanding of the spatial environment, enabling machines to distinguish between roads, vehicles, pedestrians, buildings, vegetation, and other elements at the point level. Semantic segmentation goes beyond traditional point cloud classification, providing a more detailed per-point understanding that enables accurate scene interpretation.

With the increasing popularity of autonomous vehicles, robotics, smart cities, and AR/VR applications, accurate segmentation of point clouds has become essential for scene understanding, navigation, and decision-making.

Quick Take

- Transforms raw point cloud data into structured maps that support autonomy and workflows.

- Models and methods range from classical clustering to extended neural architectures.

- Clear labels, masks, and consistent terminology speed annotation and reduce rework.

- Practical data and feature planning help produce production-grade results.

What is semantic segmentation, and why is it important

Semantic segmentation of point clouds is a method of processing 3D data, in which each point in space is assigned a semantic class that describes its meaning in the scene. The point cloud is formed from data collected by LiDAR sensors, 3D scanners, drones, or photogrammetry. It consists of millions of points with X, Y, and Z coordinates, as well as additional attributes.

Unlike classic object recognition, semantic segmentation operates at the level of each point, creating a comprehensive semantic representation of the environment without dividing it into separate instances. This enables artificial intelligence systems to comprehend the spatial structure and the role of each element within the scene. This process is implemented using deep models that work with irregular 3D data or their voxelized representations, enabling the real-time analysis of complex, dynamic environments.

Why semantic segmentation of point clouds is essential today

- Ensures safe navigation and decision-making in autonomous transport thanks to an accurate understanding of the 3D scene. Supports specialized tasks such as road surface detection, improving vehicle navigation and safety.

- Allows robots and drones to navigate complex spaces and interact correctly with objects.

- The basis for creating digital twins of cities and developing innovative city solutions.

- Reduces manual processing of LiDAR data in construction, surveying, and infrastructure projects.

- Enhances the realism and accuracy of AR/VR scenes that require an understanding of the physical environment.

Types of point cloud segmentation

Depending on the level of detail and the tasks set, different types of segmentation are used, each of which solves its part of the scene understanding problem.

Basic algorithms and methods for 3D point cloud segmentation

3D point cloud segmentation combines geometric, statistical, and deep spatial data analysis methods. For this purpose, specialized algorithms are used that enable the highlighting of objects, classes, and boundaries in three-dimensional space.

Basic methods and algorithms for 3D point cloud segmentation

- Clustering methods (DBSCAN, Euclidean Clustering) group points by spatial proximity and density without prior knowledge of the shape of objects.

- Regional Growth combines neighboring points with similar geometric characteristics, such as normals or surface curvature.

- Model-oriented methods (RANSAC, Hough Transform) find planes, cylinders, and spheres in a point cloud.

- Voxelization and 3D CNN transform the point cloud into a regular 3D grid for the application of convolutional neural networks.

- Point-based neural networks (PointNet, PointNet++) work with unstructured points and store spatial information.

- Graph Neural Networks (GNN) model a point cloud as a graph, taking into account the connections between neighboring points.

- Projection-based methods project 3D data into 2D space (BEV, range image) for use with classical segmentation methods.

- Multimodal approaches combine LiDAR, camera, and radar data to improve segmentation accuracy.

- Hybrid algorithms combine classical geometric methods with deep learning to strike a balance between accuracy and performance.

Training data, datasets, and benchmarks for point cloud segmentation

Training data and datasets are used to train machine learning models. The quality of this data affects the accuracy and reliability of the algorithms, as point clouds are characterized by irregularity, varying densities, and noise. These noises arise during sensor scanning. Training data should include a variety of scenes, objects, and lighting conditions, and be thoroughly annotated at the point or object level for different types of segmentation.

Evaluating the performance of segmentation models

Tools and Platforms for Annotating and Managing Point Cloud Data

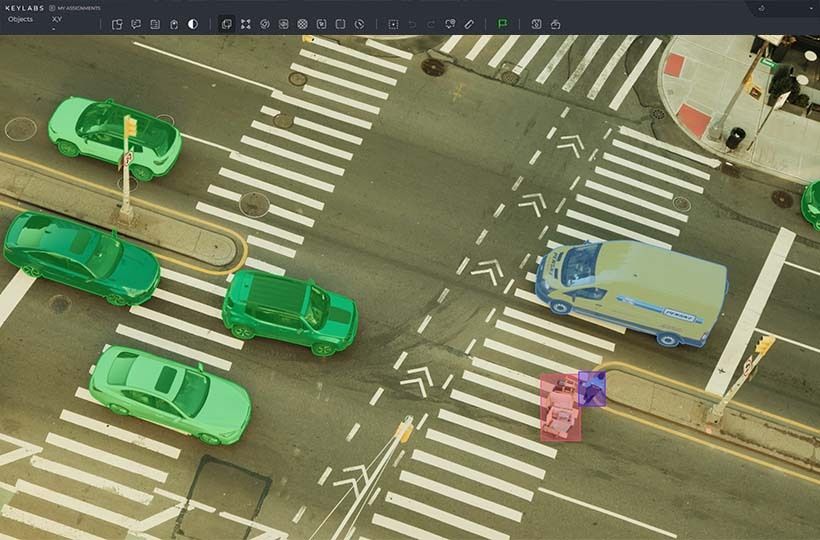

To generate high-quality training data for segmentation and other 3D computer vision tasks, specialized tools are required to annotate, organize, and manage large datasets. Such platforms combine interactive UI tools, machine learning algorithms for accelerated annotation, and project management tools to reduce manual labor.

A key requirement for working with point clouds is support for 3D VCs, including tools for visualization, 3D object construction, and the ability to adapt the process to specific tasks. Such software should be optimized for large amounts of LiDAR data, incorporate quality control mechanisms, and integrate flexibly into the machine learning pipeline.

Keylabs is a data annotation platform for 2D/3D data annotation with support for:

- comprehensive annotation for LiDAR and 3D data, including 3D point cloud tools;

- ML-assisted annotation to optimize and automate routine annotation tasks, reducing data preparation time;

- convenient data management, collaboration, and progress review tools that allow teams to organize projects and maintain quality standards;

- a wide range of annotation types (bounding box, cuboid, segmentation, etc.) and the ability to adapt to specific customer tasks.

An example of the practical application of these technologies is a case involving an automotive company, where our team performed comprehensive annotation of 3D LiDAR data for road markings and scene structures in autonomous driving applications. As part of the project, we built continuous polylines for road signs and edges, optimized the processing of aggregated 3D scenes, and developed a converter for verifying and validating data in the final format. This ensured high accuracy, scalability, and structural consistency of the annotation, which is important for training autopilot systems.

Current Challenges and Solutions

Segmentation and annotation of 3D point clouds face numerous technical and organizational challenges, as well as the need for accurate labeling. Combined solutions are used to achieve automation and high data quality.

FAQ

What is semantic segmentation for 3D point clouds, and why is it important?

Semantic segmentation of 3D point clouds is the process of assigning each point to a specific semantic class. This process involves multi-class labeling, assigning each point to a specific category such as road, vehicle, pedestrian, or vegetation, enabling AI systems to fully understand the environment.

What is the difference between point-based labeling and instance segmentation?

Point-based labeling assigns a class to a point without distinguishing between objects of the same type, while instance segmentation separates each specific object individually.

Which classical algorithms are still crucial for segmentation?

Classical algorithms, such as RANSAC, DBSCAN, Region Growing, and the Hough Transform, are crucial for point cloud segmentation due to their robustness in extracting geometric primitives and clusters, even in the presence of noisy data.

Which deep learning families are most commonly used for point data?

Point-based networks (PointNet, PointNet++), voxel-based 3D CNN, graph neural networks (GNN), and projection-based CNN families are used to work with point data.

How should teams collect domain-specific scans and labels?

Teams should collect domain-specific scans and labels through purposeful planning of acquisition scenarios, accurate annotation of data, and the use of application-specific class taxonomy standards.