Aligning predictions with reference data

In data science prediction-ground truth alignment is critical for validating and improving AI model performance against actual outcomes. Reference data, the actual data, serves as the example against which predictive AI models are evaluated.

Next, we will examine the relationship between predictions and reference data in data analysis. We will see how annotation analysis improves the performance of an AI model. And how accurate predictions affect the success of AI in various fields.

Quick Take

- Optional data is needed to validate machine learning predictions.

- Annotation analysis improves AI model performance.

- Prediction vs. fundamental data analysis involves several evaluation metrics.

Understanding Predictions in Data Analysis

Predictions in data analytics is the process of using historical data and mathematical models to predict future events, trends, or behavior.

Type of Analytics What it does Example

- Descriptive analysis of what happened. "Sales fell 12% last month"

- Diagnostics finds why it happened. "Sales fell due to a decrease in website traffic"

- Predictive predicts what might happen. "We expect demand to increase next quarter"

- Prescriptive recommends what to do next. "To increase sales, run a promotion on the weekend"

Why predictions are important

Predictions can:

- Identify customer churn based on historical data.

- Improve customer service with a personalized approach.

- Detect fraud by identifying suspicious patterns.

- Optimize resource utilization and predict future trends.

- Simplify routine decisions.

Good predictive analytics requires accurate, up-to-date data to avoid bias and incorrect choices. Important baseline data serves as an indicator of an AI model's performance during forecasting.

The Concept of Baseline Confidence

Baseline confidence is a simple level of accuracy that can be achieved without using complex AI models by predicting the most frequent class in the data. It verifies the reality of the AI model's results and whether they match real data.

The Role of Baseline Confidence in Machine Learning

The quality of data annotation affects the level of agreement of predictions with the baseline confidence. Baseline confidence can be divided into three types:

- Available instantly. Provides an estimate based on real-time data.

- Delayed. Confidence becomes available after a specific period

- Unavailable. It makes analysis difficult and requires proxy metrics or annotators.

Defining baseline confidence helps reduce error matrices and increase accuracy rates.

Intersection of predictions and reference data

Intersection over union (IoU) is the primary metric for this assessment. IoU scores range from 0 to 1, with higher scores indicating better agreement. A score above 0.5 is considered a good prediction in object detection tasks. This accuracy is important in industries like autonomous vehicles, where object detection helps prevent accidents.

Methods for assessing agreement

Accuracy and completeness. Tests the accuracy of positive predictions, while completeness measures the ability of the AI model to find all actual objects.

- Average accuracy (AP). AP is calculated for each class, giving a detailed picture of the AI model's performance.

- Mean average accuracy (mAP). The average of AP across all classes provides a comprehensive measure of performance.

By identifying where predictions and actual data do not match, areas for improvement are identified. This helps in industries like medical imaging, where accurate segmentation detects diseases at an early stage and facilitates tailored treatment.

The Importance of Annotation in Data Prediction

Data annotation is the process of adding labels or tags to raw data. These labels provide context and meaning, allowing machines to learn patterns and make predictions.

Types of Data Annotation

Data Analysis Methods

Data collection is divided into manual and automated methods. The manual method involves human experts, which is accurate but requires time and resources. The automated method uses algorithms for rapid annotation, which leads to some loss of accuracy. The choice depends on the project requirements and available resources.

Data Analysis Tools

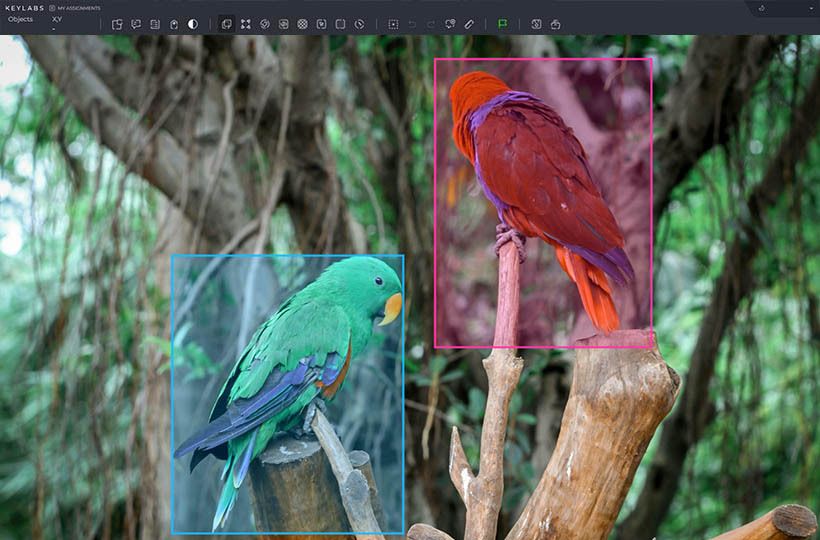

The Keylabs data annotation platform provides data annotation tools and uses manual and automated data annotation methods.

Some organizations develop their tools to adapt to their specific needs. These tools improve forecasting compared to terrestrial data analysis. They have functions for data management, annotation, and quality control.

Terrestrial data analysis requires detailed planning and the right tools. The right methods and innovative platforms increase forecasting accuracy, contributing to the creation of reliable machine learning models.

Problems in matching predictions with actual data

Low data quality is an obstacle in matching predictions with actual data. Noise, bias, and incomplete data sets lead to inaccurate predictions and distorted results.

Ambiguity arises from incomplete, inaccurate, inconsistent, or ambiguous data information, which makes it difficult to interpret or process it correctly. Ambiguous data misleads the AI model, reduces the accuracy of predictions, or distorts analytical conclusions.

Forecasting accuracy with ground data

Improving model performance requires improving the agreement of predictions with ground data. This is achieved through effective annotation strategies and the use of feedback loops. These techniques significantly improve accuracy in machine learning applications.

Strategies for effective annotation

- Establish clear annotation rules to maintain consistency.

- Involve multiple annotators to reduce bias and improve accuracy.

- Validate annotation quality using inter-annotator consensus methods.

- Combine human expertise with machine learning tools for efficiency.

- These strategies enhance the quality of annotation.

Using feedback loops

Refining through feedback loops helps maintain the accuracy of an AI model. This process includes:

- Regularly comparing predictions with real-world data.

- Identifying and analyzing prediction errors.

- Updating the AI model based on the results of error analysis.

Iterate the cycle to ensure continuous improvement. Implementing robust feedback mechanisms maintains and improves the AI model's performance over time.

Future Trends in Predictions and Ground Truth Analysis

One direction is deep learning for processing remote sensing (RS) data. This allows you to automatically detect landscape changes, classify surface cover, predict crop yields, or monitor urban expansion. Systems use integrated approaches that combine satellite images, IoT sensor data, and meteorological parameters. This allows you to build accurate forecasts adapted to local characteristics.

The role of cloud-based geoanalytical platforms that quickly process images in real time will grow. Neural networks running on edge devices are also emerging. This allows you to perform preliminary analysis at the site of data collection. Transformers in geospatial analytics will enable you to consider spatial and temporal context. This detects climate anomalies, predicts disasters, and monitors sustainable land use.

FAQ

What is the importance of benchmarking in machine learning?

It allows you to assess how well a model reproduces real-world results. It is a key step in validating an AI model's accuracy, robustness, and usefulness in real-world settings.

How do annotations help in benchmarking?

Annotations provide accurate data labeling, allowing an AI model to learn from well-defined examples.

What are some common challenges in benchmarking?

Data quality issues such as noise, bias, and incompleteness affect the accuracy of predictions and the benchmark data.

How can I improve forecasting accuracy using ground-based data?

Strategies such as developing clear annotation guidelines and continuously using feedback loops to improve an AI model can help improve forecasting accuracy.

What is the difference between manual and automated ground-based data collection?

Manual terrestrial data collection is the annotation of data by humans. Automated collection uses algorithms or existing datasets.