Vision-Language Model Training - Scaling Up and Down

The first generations of AI handled narrow tasks: computer vision recognized objects, and natural language processing understood text. They could not connect a photo of a cat with the word "cat" the way a human could. This is because human learning is inherently multimodal. We combine sight, hearing, and touch to understand the world. This definitely pushed the creation of AI that could do the same.

Aligning the streams of visual and textual data in shared neural structures is a major breakthrough. This gave rise to the Vision-Language Model - a revolutionary type of artificial intelligence that is capable of simultaneously understanding and working with two different types of data: visual (images, video) and language (text).

That is, while a typical AI can either read text (like Google Translate) or recognize objects (like an image search function), the VLM combines these abilities. Essentially, it's like giving a computer both hemispheres of the human brain - the ability to see and logically reason about what it sees.

Integration of Sensory Input and Logic

The core idea of VLM is that they align the space of vision and the space of language. They learn to find the connection between an object's appearance and how it is named or described. Today's artificial intelligence combines vision and language thanks to dual-encoder architectures. This architecture is based on transformer models, which modern AI uses to reason in a multimodal context:

The Visual Transformer (ViT)

Thanks to the ViT, the VLM doesn't just see pixels. Its task is to break down the image into pieces (visual tokens) and convert them into a numerical representation to understand that:

- "Red" is associated with color,

- "Sphere" is associated with shape,

- And the combination of a "red sphere" must be close to the concept of an "apple" or a "ball."

The Language Transformer (LLM)

This part of the model uses general pretrained transformers (like ChatGPT) to process text, understand queries, and generate responses. As a result, it can understand context and generate coherent, natural text.

Thus, VLM doesn't just compare text and images. Their goal is to learn natural language understanding (NLU) to recognize the user's context and intent.

For example, when you ask the model: "What emotion is reflected on this person's face?", the model must:

- Recognize the face (the visual part).

- Infer abstract concepts like "emotion" and "reflected."

- Connect these concepts to provide an adequate answer.

And thanks to this ability to interpret, VLMs can perform complex tasks, such as answering questions about images.

The "Bridge" – The Key Element

The most important component is a special fusion mechanism that builds a bridge between these two transformer systems, allowing them to communicate and share knowledge. It ensures that when the model "sees" an image of a cat, it can correctly associate it with the textual representation "cat" or "cute fluffy animal."

Thus, unlike traditional "single-channel" AI, VLMs understand the connection between photos and captions, X-rays and medical reports, or even video streams and security protocols.

These modern approaches underpin many of the AI functions we encounter today:

The 3 Dimensions of Scaling

Modern scaling methods adapt AI systems to new challenges without extensive retraining. They allow models to be efficiently deployed in environments with varying resources while maintaining high performance.

The goal of scaling is to maximize capabilities while minimizing computational costs. This is a search for the optimal balance between three critically important factors: model size, data volume, and computational resources.

Model Size

This involves increasing the architectural complexity and computational power of the model itself. To achieve this, the number of parameters is scaled, measured in millions or even billions.

It’s important because a larger model size allows it to memorize better, find subtle patterns, and retain broader knowledge. Due to this increase in size, the models demonstrate new abilities, such as complex logical thinking, that only appear after reaching a certain scale threshold.

The larger the model, the more expensive and complex it is to train and deploy.

Data Volume

This refers to the quantity and quality of "image-text" pairs used to train the model.

No model, regardless of its size, will be smart without enough data. Vast amounts of data allow the model to cover a wide range of concepts, making it more universal and robust to new tasks. Therefore, VLMs use both massive, but imperfect, web data for general alignment and smaller, high-quality curated data.

Computation

These are the physical resources and time required to complete the training process. The speed and availability of computation determine whether the desired model size and data volume can be realized.

Research shows that a certain proportion exists between these three elements. Performance quickly saturates if you only increase the model size but do not give it more data. Optimal scaling requires that all three factors remain in balance to create today's most powerful AI systems.

Scaling Up and Down for the Real World

There’s a gap between creating a giant, super-intelligent Vision-Language Model in the lab and running it on your mobile app. The success of VLMs in the real world depends on the perfect balance between two stages: scaling up for intelligence and scaling down for efficiency.

Creating Intelligence

This stage requires enormous resources and focuses on building the most versatile and powerful model. The larger the model, the better it can generalize.

To make a VLM versatile, for example, able to recognize both a cat and a rare tool, it's trained on billions of "image-text" pairs. This gives the model a deep understanding of the world, allowing it to work in a Zero-Shot manner—performing tasks it was never specifically trained for.

Naturally, Scaling Up requires thousands of GPUs and millions of dollars. It’s expensive, but it’s the only path to creating truly intelligent AI.

Product Implementation

After creating the giant "brain," it needs to be made suitable for use in daily products where speed, cost, and privacy are important.

Giant models are too large for a phone. Quantization solves this by reducing the precision of the numbers the model operates with, for example, from 32-bit to 4-bit. The practical result is that the model becomes several times smaller in file size and runs significantly faster.

Even an innovative model needs to be taught to be helpful. This is achieved by fine-tuning the model on a small sample of high-quality instructions. The practical result is that the model learns to provide clear and relevant answers to user queries, transforming it into an effective assistant.

Optimized models can run locally on the device. This provides instant response speed, confidentiality (your data doesn't leave the phone), and the ability to use the AI without an internet connection.

This engineering approach allows the most expensive and complex academic breakthroughs to be turned into useful, fast, and cost-effective products we use daily.

FAQ

What are the main goals of training Vision-Language models?

The primary goal is to create systems that can understand and combine information from visual data and natural language. This involves forming joint representations that allow the model to solve tasks such as visual Question & Answer (VQA) or generating captions for images.

How does multimodal AI differ from traditional single-channel systems?

Multimodal AI processes visual and textual data simultaneously, enabling a deeper understanding of context. Unlike traditional systems that analyze images or text separately, architectures like CLIP or Flamingo use cross-attention layers to fuse features from visual encoders and language transformers.

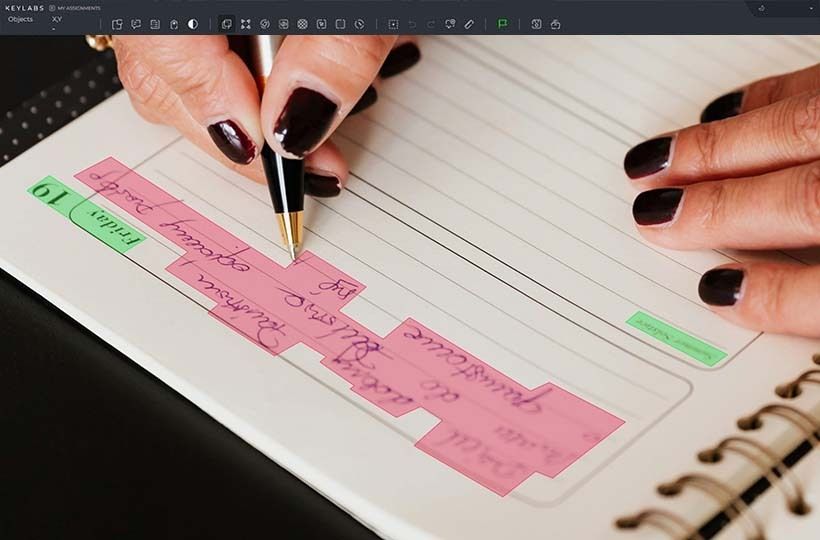

How is high data quality ensured for training?

Data quality is always a priority when it comes to model training. Therefore, all data undergoes meticulous annotation, noise filtering, and expert review to minimize bias and errors. This typically involves specialized multimodal annotation programs working with the data.

Which industries benefit most from the integration of vision and language?

Medicine: automatic generation of X-ray or MRI reports. E-commerce: generating product descriptions and visual search. Automotive: Systems like Tesla Autopilot Vision use multimodal models for real-time object recognition and voice warnings.

What challenges arise when scaling these systems for business?

Training requires massive, carefully curated datasets (e.g., LAION-5B) and powerful computational resources. The main problem is finding the balance between operating\inference speed, and accuracy. For example, in the NVIDIA NeMo system, optimizing these parameters remains a key challenge when implementing multimodal models in real-world applications.