Detecting and reducing bias in labeled datasets

AI requires fairness in automated decisions due to bias in training data. Reducing bias will help achieve fair outcomes and prevent discrimination in AI systems. Documented processes for detecting bias and applying advanced technical methods are required to address this issue.

Quick Take

- Bias in datasets can lead to discriminatory outcomes in AI.

- Gender, racial, and socioeconomic biases are common biases.

- Methods for mitigating bias include data augmentation, resampling, and training for fair representation.

- Creating ethical and unbiased AI is both a moral and technical imperative.

Understanding Bias in Datasets

Fairness in AI systems is an important issue because biases in data undermine their impartiality. Identifying and analyzing biases can help eliminate these risks in machine learning models.

What is Bias?

Bias is a systematic error that affects the fairness of decisions. Bias occurs when algorithms are trained on skewed data, leading to discrimination and unfair results.

Types of Bias in Data

- Selection bias occurs when data samples are not representative of the population. Selection bias occurs when a data sample does not objectively represent the entire data population.

- Measurement error occurs due to errors in recording or classifying data.

- Algorithmic bias occurs when the algorithm amplifies or creates a bias in data processing and decision-making.

- Representation bias. Publicly available facial recognition algorithms often fail to recognize African-American men and women, highlighting problems in data representation.

- Representation bias occurs when information in the data reflects a biased or incomplete view of the real world.

Impacts of Bias on Machine Learning Models

AI algorithms can misclassify people, favoring one race, leading to discrimination in AI systems. To ensure that all groups are treated fairly, it is essential to consider TPR and positive predictive parity.

TPR (True Positive Rate) is a metric that shows the proportion of true positive cases (e.g., sick people in healthcare) that the model correctly identifies.

Positive predictive parity means the model has the exact optimistic predictions for different groups.

Importance of Bias Detection

Detecting bias in data and AI algorithms helps avoid discrimination, improve model performance, and build user trust in technology. In addition, it helps comply with ethical standards and legislation and contributes to eliminating social inequalities.

Real-World Implications of Bias

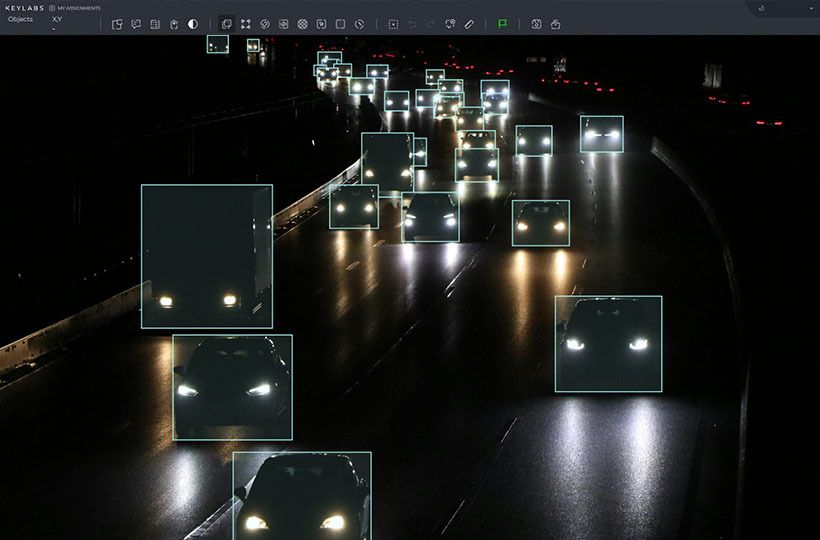

Bias in autonomous vehicles can lead to dangerous situations. Reducing bias in natural language processing (NLP) improves the accuracy of detecting hate and toxicity. Eliminating these biases increases alignment with ethical and societal values.

Strategies such as baseline bias mitigation (UBM) and tuning to reduce gender bias are used to achieve this. It has improved AI performance across a range of domains. Baseline bias mitigation (UBM) is a strategy that involves adjusting or biasing data, parameters, or models in a way that minimizes discriminatory or unfair results that may arise from bias in the training data.

Legal and Ethical Considerations

Implementing ethical practices through bias detection ensures fairness, equality, and the protection of human rights in automated systems.

- Data bias detection is identifying and analyzing distorted aspects of data.

- Impact analysis assesses how biases affect machine learning models and results.

- Accountability and ensuring compliance with legal standards and public ethics.

Techniques for Bias Detection

Statistical methods for detecting bias detect discrepancies in the distribution of data. The main methods include:

- Disparate Impact (DI) is the concept that a model may negatively impact one group (for example, based on race, gender, or age) even without direct discrimination. This method assesses whether a model or algorithm does not negatively affect certain groups.

- Equal Opportunity Difference (EOD) measures the difference in the exact rates of positive results between groups and assesses fairness.

- Statistical Parity Difference (SPD) compares the probability of positive results between groups and is used for binary classification.

- The chi-square test is a statistical method for determining the independence of two categorical variables. It measures the difference between the actual and expected frequencies in the categories.

Machine Learning Approaches

Bias detection and mitigation methods include:

- Supervised learning helps identify patterns associated with biases.

- Anomaly detection automates the identification of data that may indicate bias.

- Subgroup analysis evaluates the performance of a model across different subgroups and provides fair results.

- Fair regression and classification are techniques for achieving fair results when building AI models to predict or classify data.

Tools and Frameworks for Analysis

AI tools and frameworks have been developed to help data scientists identify and rectify bias. Notable examples include:

- Reweighting and Resampling preprocessing techniques adjust data to reflect fairer distributions before model training.

- Reject Option-based Classification (ROC) applied postprocessing; this method ensures fairness by adjusting decision thresholds to balance outcomes across groups.

- In an adversary debiasing strategy, models are paired with adversaries trained to detect bias, penalize biased predictions, and promote fairer outputs.

Statistical analysis combined with advanced AI tools facilitates effective bias prevention.

Assessing Bias in Labeled Datasets

Data assessment metrics are important for measuring bias. These metrics include demographic fairness, forecast consistency, and error balance.

Assessment methods include:

- Regular checks to maintain fairness.

- Diverse team input.

- Feedback to improve the model.

Bias mitigation strategies

Bias mitigation strategies in machine learning aim to reduce or eliminate discriminatory results that can arise from skewed data.

Preprocessing Techniques

Preprocessing bias focuses on the initial steps of cleansing and re-balancing datasets. Techniques like synthetic data generation methods are used to balance minority classes. Measures like Demographic Parity and the model's Impact help correct biases in datasets.

In-Processing Adjustments

Includes fairness constraints and regularization techniques in training algorithms. Fairness constraints minimize dependencies on sensitive features.

Postprocessing Solutions

Methods such as adjusting model outputs to ensure equal opportunity. Regular governance and auditing practices, with AI ethics committees and user feedback. These steps ensure the model's predictions are fair across all demographic groups.

Continuous Monitoring and Maintenance

Detecting changes in data and AI algorithms has many benefits:

- Preventing discrimination.

- Improving the accuracy of AI models.

- Adhering to ethical standards and legislation.

- Eliminating social inequalities.

Therefore, this is an important aspect for creating ethical and unbiased AI models.

Developing a Monitoring Framework

Building robust monitoring is essential for maintaining the system. This method includes data checks, algorithm reviews, and fairness assessments. It allows organizations to address biases as they arise.

Tools for Continuous Assessment

Advanced evaluation tools support fair AI models. These tools have features to detect, evaluate, and correct biases.

Collaboration Across Disciplines

Effective bias mitigation in AI systems requires strong interdisciplinary collaboration. Data scientists, ethicists, legal experts, and other stakeholders must work together. They aim to address biases in historical data and AI models. Various fields make discussions more detailed and practical. This leads to the creation of well-rounded strategies.

Contributions from Data Scientists and Ethicists

Reducing bias in AI requires collaboration between scientists, ethicists, legal experts, and the public to correct problems in data and models. Data scientists provide technical expertise, while ethicists ensure that moral standards are followed.

Building a Cross-Functional Team

A cross-functional team brings experts from different fields—from data science to ethics and law—to solve complex problems. By bringing together diverse perspectives, such a team helps create fair and ethical technologies.

Future Directions in Bias Research

A promising direction is using AI systems that can self-audit and self-correct. This could revolutionize bias detection and improve algorithm fairness.

Machine learning detects hidden biases in datasets and trains models to reduce bias. For example, word association analysis shows that European names are perceived more favorably than African-American names. This points to problems in AI models and requires an improved system for detecting and eliminating discrepancies at the stage when they occur.

Diverse data is needed to reduce discrepancies. Ethical AI will be supported as awareness grows to ensure fair representation and equitable development treatment.

Innovations in bias mitigation methods

Recent approaches include adaptive bias correction algorithms, data validation techniques, and the integration of ethical principles into the model training process. Combining an interdisciplinary approach and modern technologies helps minimize distortion at all stages of artificial intelligence development.

FAQ

What is Bias?

Bias refers to systematic deviations in data or algorithms that lead to unfair or discriminatory outcomes. It can stem from historical inequalities, data collection methods, or inaccuracies in data annotation.

What Types of Bias in Data?

Bias can be explicit or implicit, affecting results for specific demographic groups.

What is the impact of bias in a machine learning model?

Bias in datasets can lead to discriminatory results. If left unchecked, it can negatively impact decision-making in healthcare, hiring, and litigation.

Why Detect Bias?

Detecting bias in data and AI algorithms prevents discrimination, increases trust in AI, and improves the quality of models.

What are the statistical methods for detecting errors?

Statistical methods involve analyzing data distributions, measuring disparities, and using metrics to identify biased patterns.

What are the approaches to machine learning?

Supervised learning and anomaly detection automate the detection of bias in data.

What are the metrics for measurement?

Measurements such as fairness between groups, prediction consistency, and balanced error rate assess bias in labeled data sets.

What are the practices for evaluation?

Practices include routine audits, engaging diverse teams, and continuous feedback mechanisms.

What is a preprocessing technique?

Preprocessing methods include balancing the data before training the models and reweighting.

What is an in-process technique?

In-process processing strategies involve fairness constraints, adversarial debiasing, and reweighted loss functions.

What is a post-processing technique?

Postprocessing involves thresholding adjustments, reject option classification, and calibration techniques.

What are the strategies for achieving diversity?

Strategies include obtaining data from diverse populations and taking cultural differences into account.

Why is constant analysis needed?

It is necessary to adapt to new data and societal changes.

What tools are used for continuous assessment?

Tools like Fairness Indicators and continuous auditing platforms provide ongoing evaluations of AI systems.

What is the contribution of data processing and ethics specialists?

Collaboration between data scientists and ethicists ensures that AI models are robust.

Why do you need a cross-functional team?

Cross-functional teams bring diverse experiences and perspectives to reduce bias.

What innovations will there be in displacement mitigation methods?

Future systems are expected to be trained to self-correct and maintain fairness.