DIY AI Image Recognition: A Beginner's Guide to Building Your First Project

The CIFAR-10 dataset holds 60,000 images in 10 categories, each only 32x32 pixels. These images are key for learning about deep learning. With the rise of DIY AI, computer vision and deep learning concepts have caught the eye of many. TensorFlow, launched by Google in 2015, has made tasks such as image classification truly efficient. This tutorial is your guide to creating an image recognition model with TensorFlow.

Getting started with TensorFlow can feel overwhelming, especially for beginners. Navigating through Android development on top of that can be pretty challenging. Many tutorials lack in-depth explanations. Our guide bridges this gap. It covers everything from setting up TensorFlow to creating and deploying your model in an Android app. We'll use Google's code labs and educational materials to prepare you to build a top-notch image recognition model.

Key Takeaways

- Understand the essentials of DIY AI projects in computer vision.

- Learn the fundamental concepts of deep learning and TensorFlow.

- Step-by-step guidance in setting up your development environment.

- Detailed tutorial on training a custom image classification model.

- Deploy your model in an Android app that provides real-world applications.

Introduction to AI Image Recognition

Artificial Intelligence (AI) image recognition stands at the forefront, changing how we identify items, scenes, and actions in pictures through intricate algorithms. This journey began in 1963 with Lawrence Roberts' work on extracting 3D data from 2D images. Since then, we've achieved significant milestones, such as creating the Hough Transform in the 1970s and 1980s. This method revolutionized shape identification in images.

Fast forward to today, and neural networks, especially Convolutional Neural Networks (CNNs), are key. They've enhanced our ability to find objects and faces in images. CNNs are exceptional at recognizing complex patterns by breaking images down into higher-level features.

What is AI Image Recognition?

At the heart of it, AI image recognition depends on deep learning to dissect and comprehend visual information. It falls under supervised learning, where a model refines its accuracy through continuous training on labeled images. Central to this are CNN network structures, which consist of various layers, each serving a vital role in feature extraction and recognition.

Major algorithms in AI image recognition, like Faster RCNN, SSD, and YOLO, focus on different aspects of quick and precise object recognition in images. This field has opportunities for those looking to dive into machine learning and deep learning.

Why Choose Image Recognition for Your First AI Project?

Embarking on an AI image recognition project opens up various applications, including OCR for extracting text and facial recognition for security or user verification. It also plays a critical role in medical imaging, aiding in identifying health issues. This versatility makes it a sought-after skill.

The market size in the Image Recognition market is projected to reach USD 15.37 billion in 2025. The market size is expected to show an annual growth rate (CAGR 2025-2031) of 14.39%, resulting in a market volume of USD 34.44 billion by 2031.

Understanding TensorFlow and Its Benefits

TensorFlow is Google's breakthrough for AI projects. Its extensive features and flexibility are highly favored in machine learning.

What is TensorFlow?

It's a leading open-source library specializing in deep learning operations like image and speech recognition. TensorFlow aids in managing data, cleaning, and pre-processing, facilitating efficient machine learning at scale. With support for multiple languages and mobile integration, it's immensely versatile.

Why Use TensorFlow for Image Recognition?

TensorFlow excels in image recognition because it supports easier and swifter distributed training. This is amplified by seamless error-spotting through the Keras API. Another strong point is its adaptability to various environments, from servers to mobile devices to TPUs.

Moreover, it promotes key data and model management practices, maintaining model performance and accuracy. An example is its use in a flower image dataset, showcasing its robust recognition capabilities. The tutorial divided the dataset appropriately for practical training.

The TensorFlow model featured three convolution layers and was fine-tuned over 10 epochs using specific algorithms. Aided by visual data insights, it optimized the image recognition process.

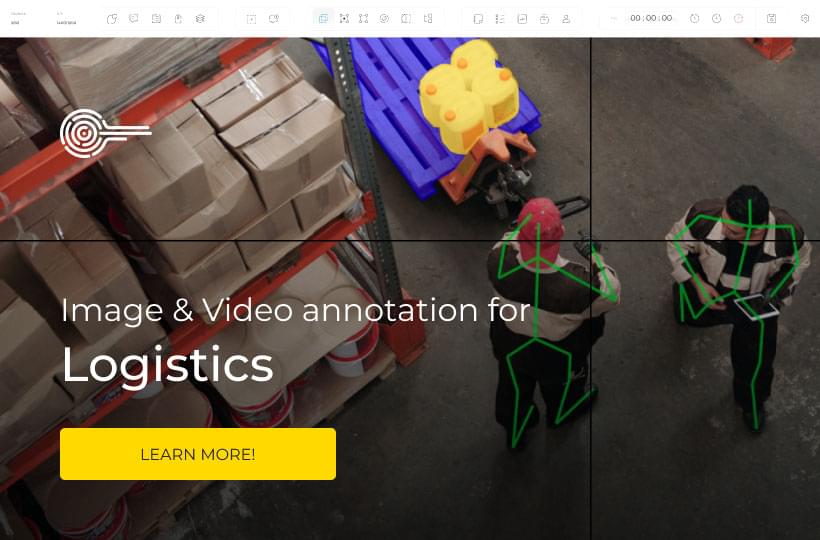

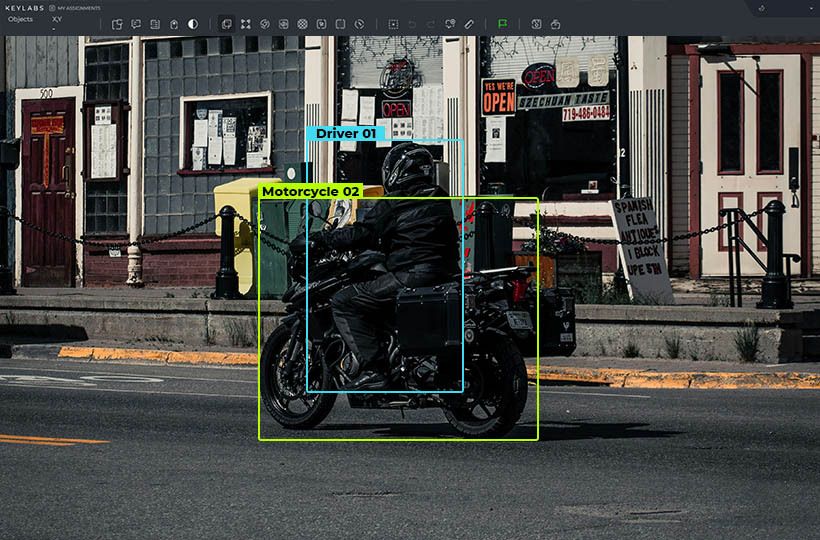

TensorFlow's benefits are key for aspiring machine learning enthusiasts. They pave the way for developing advanced and scalable image recognition systems. If you're interested in creating robust systems, you need great annotated datasets, which is why this article from Keylabs is handy.

Setting Up Your Development Environment

A robust environment is key before diving into TensorFlow and AI image recognition. This includes the crucial step of installing Python and TensorFlow. Then, it entails configuring an Integrated Development Environment (IDE) for smooth coding.

Installing Python and TensorFlow

The core development environment relies on installing Python with TensorFlow. Python's simplicity and vast libraries make it a prime choice for machine-learning projects. A staggering 90% of AI image recognition efforts use Python, placing it at the forefront of AI technologies.

Begin by downloading Python from its official website. After this, adopt TensorFlow through pip. Pip, as Python's primary package manager, eases TensorFlow's installation. A straightforward command initiates the process:

pip install TensorFlow

Statistics suggest 87.6% of developers rely on TensorFlow for AI model implementations. This figure underlines TensorFlow's efficiency in handling sophisticated image recognition challenges.

Setting Up an IDE

After establishing Python with TensorFlow, the focus shifts to an IDE. This tool is vital for efficient AI project management. It aids debugging and seamlessly interacts with TensorFlow, facilitating code writing and testing.

Top IDE choices include PyCharm, VS Code, and Jupyter Notebook, with varied benefits. A well-configured IDE enhances your ability to manage project essentials, run tests, and improve your AI work.

Selecting an optimal IDE configuration significantly smoothes your AI project operation. This choice allows focus on model development and refinement rather than grappling with setup challenges.

Data Pre-processing Essentials

Data pre-processing is a cornerstone in AI image recognition projects. It ensures that the data fed into a model is clean and ready for training. Understanding image data handling and strategic pre-processing is key to model efficiency.

Understanding Image Data

Due to their diverse origins, real-world datasets often face issues like missing, inconsistent, and noisy data. Proper data handling is essential for solving these challenges. Data pre-processing minimizes the impact of outliers and inconsistencies, which is necessary for accurate predictions.

In machine learning, feature extraction focuses on independent variables. It's crucial to understand which features are influencing model predictions. Thus, thorough data pre-processing is vital in ensuring optimal feature quality. Methods for this include:

- Data Cleaning: Filling in missing values, smoothing noisy data, and removing outliers.

- Data Integration: Merging data from various sources, such as healthcare databases, into a coherent platform.

- Data Transformation: Utilizing techniques like normalization and reduction to prepare data for analysis.

Data Normalization Techniques

Normalization techniques are pivotal for scaling pixel values within a standardized range, like 0 to 1. This makes data model-ready. For example, Normalize() from sci-kit-image can boost machine learning model performance.

Additional normalization methods include:

- Resizing images to standard sizes such as 224x224 or 256x256 pixels to ease algorithm processing.

- Grayscaling simplifies and reduces costs by converting RGB to grayscale with cvtColor().

- Adjusting contrast through histogram equalization uses equalizeHist() to improve contrast.

Augmenting and Cleaning Data

Data augmentation enhances training set diversity by applying realistic transformations. It boosts model generalization and helps avoid overfitting. Common augmentations include rotation, flipping, and zooming.

Cleaning data is essential to rid the dataset of irrelevant or misleading information. Strategies for cleaning involve:

- Fill in missing values for a complete dataset.

- Smoothing noisy data to ensure coherence in the learning process.

- Removing outliers to maintain the dataset's statistical integrity.

Evaluating and assuring data quality is pivotal for high-quality models. This includes continuous profiling, cleaning, and monitoring. A strong approach to data pre-processing lays a reliable foundation for models, ensuring accurate predictions and robust performance.

Choosing the Right Image Dataset

Choosing the best image dataset is critical for AI image recognition. It directly impacts the accuracy and performance of your model. We'll explore popular datasets suitable for newcomers and how to prepare your dataset efficiently.

Popular Image Datasets for Beginners

Starting with well-structured, renowned datasets can smooth out the learning journey. Datasets like MNIST and CIFAR-10 are highly recommended for beginners. MNIST holds 70,000 labeled images of handwritten digits, and CIFAR-10 offers 60,000 color images organized into 10 groups.

Preparing Your Dataset for Training

Several essential steps should be followed to prepare your dataset for AI. Start by ensuring the data's relevance and quality. Eliminate any redundant features, as they could lead to inaccurate predictions. Having at least 200 images for training is crucial, but collecting more is better.

The next step is to divide your dataset into three parts:

- Training Set: This is the most significant part of teaching your model.

- Validation Set: It's vital for adjusting model settings and avoiding overfitting.

- Test Set: Used as a final evaluation to check how well the model performs on new data.

Additionally, utilizing data augmentation can significantly improve your dataset. It can tenfold reduce the time spent on labeling and lower labeling errors by the same factor.

Lastly, remember that the quality and structure of your training datasets significantly affect your model's learning and generalization capabilities. Therefore, meticulous preparation is key to obtaining reliable and accurate outcomes.

Building an AI Image Recognition Project

Undertaking the challenge of building an AI image recognition project requires several key steps. These steps merge technical skills with an understanding of the project's goals. This approach ensures the final product is both valuable and applicable.

The first key step is acquiring and initially handling a dataset. Due to their broad scope, datasets like CIFAR-10, with 60,000 images sorted into 10 classes, are often used. Each image's 32 x 32-pixel size brings 3,072 data points. Thus, handling these images effectively is critical for your computer vision project.

Next in the deep learning process is model selection and development. TensorFlow, a library supported by Google since 2015, is a valuable resource. It helps construct neural network components like Conv2D, MaxPooling2D, and dense layers. Python is commonly employed to ensure the seamless incorporation of these steps.

The model is then trained using supervised learning. Here, the model gets its knowledge from labeled data points. Key training settings include a 32-image batch, 10 rounds of learning, and a 10% sample for validation. Enhancing the model's efficiency through methods like the binary cross-entropy loss function and Adam optimizer is instrumental. It improves the model's ability to accurately identify images, a central objective in the training phase.

The final phases focus on assessing and using the model. The key is the model's capability to identify different classes accurately. For instance, the model can be tested with an image like "test_image.jpg" to evaluate its capacity to distinguish cats from dogs. Successful deployment involves making the model available to users through a friendly interface. This approach renders the computer vision project not only operational but also user-accessible.

Creating the Training Model

The journey to create an effective model for image recognition starts with gaining insight into neural networks. These networks serve as the fundamental design of any AI model. They comprise interconnected nodes that algorithmically process data across layers. Among these, convolutional neural networks (CNNs) excel in managing visual data.

Understanding Neural Networks

Neural networks mirror the human brain and underpin AI model constructions. They consist of layers that process data, identify patterns, and forecast outcomes. Each layer successively refines the information, simplifying the identification of intricate features. This process is pivotal for precise image interpretation.

Designing a Simple Convolutional Neural Network

Creating a CNN model involves composing it of diverse layers. Convolutional layers extract features from images using filters. Following this, activation layers modify these features into a more understandable form for the network. Pooling layers then condense the spatial properties of features, reducing overfitting risks and enhancing efficiency.

Designing models for tasks such as tumor detection in healthcare demands careful layer planning to pinpoint cancerous areas in scans. Similarly, CNNs are critical for airport security and autonomous vehicles to spot risks and obstacles. Their success underscores the importance of meticulous AI model design and testing.

Using Transfer Learning for Efficiency

Transfer learning is instrumental in refining training processes. It allows models to integrate knowledge from pre-trained networks like ResNet or VGG, which are already versed in extensive data. Training can be expedited and refined by tailoring these models to specific datasets—perhaps images from Google Images or Scrapy.

Notably, transfer learning markedly decreases the time and computational demands of training. For instance, with the resources of Fast.ai, efficient model training is possible in a mere 18 minutes on vast sets like Imagenet. This is especially advantageous in new undertakings with scant data, ensuring rapid yet robust model performance.

In summary, insights into neural network functioning, innovative CNN construction, and transfer learning applications form the bedrock of advanced image recognition. This trio guarantees both the efficacy and efficiency of models, addressing the highly exacting needs of varied real-world contexts.

Training Your AI Model

Starting the AI model training process is pivotal in any image recognition endeavor. It is recommended to begin with a dataset with a minimum of 200 images per category. Data prep is key here. You'll want to use various techniques like resizing and enhancing to get your dataset ready.

Data augmentation can be a game changer, significantly speeding up image labeling and helping to reduce mistakes. This step is crucial for moving forward efficiently.

As your model trains, it goes through multiple epochs. An epoch means the model has seen the entire dataset once. Typically, models require about 10 epochs to become well-trained. Techniques like convolutional neural networks (CNNs) are preferred because they extract important image features. They use specific layers to focus on essential details, boosting the overall model's capability.

Three data sets are essential for training: the training itself, the validation of the model's progress, and the test set, which is used for a final assessment. The best practice is to reserve 10% of your data for validation. Using a batch size of 32 helps maximize your computing power. Tools like TensorFlow, Keras, PyTorch, and OpenCV are crucial for this step. They streamline the model optimization process.

It's noted that spending more time on training often leads to superior results. Regularly optimizing hyperparameters is a must. When testing your model, accuracy assessments are used to gauge its efficiency. Achieving high accuracy is essential, as is ensuring the model works well with new, unseen data. This emphasizes carefully dividing your data into training, validation, and test sets.

Implementing GPU Acceleration

Integrating GPU acceleration is key for efficient deep-learning model training. GPUs' parallel processing power boosts deep learning performance, pivotal for managing complex calculations and big datasets. GPUs have made a remarkable impact on AI research. They played a significant role in the success of Alex Krizhevsky's AlexNet in the 2012 ImageNet competition, which outperformed human-coded software.

Why GPU is Important for Deep Learning

The role of GPUs in deep learning is significant. In 2011, it was found that 12 NVIDIA GPUs equaled 2,000 CPUs in performance. By 2015, deep learning models powered by GPUs reached superhuman levels, surpassing human ability in the ImageNet challenge. NVIDIA GPUs can speed up the training of deep neural networks by 10-20 times, reducing weeks of work to days.

Setting Up GPU Support in TensorFlow

To set up a TensorFlow GPU, you need compatible hardware and software. For example, a Nvidia GeForce GTX 1080 TI with specific versions of CUDA and cuDNN can greatly enhance computation. The setup process includes Python 3.11, Ubuntu 23.10, and OpenCV 4.9.0 for CUDA support. You can ensure successful configuration by using OpenCV to check the CUDA-enabled device count: `cv2.cuda.getCudaEnabledDeviceCount()`.

The partnership between NVIDIA and other companies has grown tremendously. This is due to the significant advantages GPU acceleration offers in deep learning. GPUs are expected to bring substantial changes to technologies like computerized driver assistance. This may lead to an 80% reduction in car accidents in the next 20 years, saving about 1 million lives yearly.

Evaluating Model Performance

Once the AI model is trained, its performance evaluation is key. This step ensures its efficiency. Evaluating the model involves examining specific metrics and deep analysis. This helps us understand how effectively the model classifies data.

Accuracy and Loss Metrics

Accuracy metrics show how often your model makes correct predictions. It calculates the percentage of correct guesses out of all tries. In contrast, loss metrics look at the errors the model makes. Both are crucial since they quickly show if your AI is learning well. Lower losses signal fewer mistakes, and higher accuracy means more accurate predictions.

Confusion Matrix

The confusion matrix analysis offers detailed insights into your model's performance. It presents predictions in a matrix form, showing strengths and weaknesses. The matrix distinguishes between true positives, false positives, and false negatives. This view is vital for targeted enhancements, ensuring true performance quality beyond surface readings.

Using accuracy and loss metrics alongside a confusion matrix provides a strong framework. It aids in pinpointing areas for improvement. This framework steers you towards a model that is both effective and precise.

Deploying Your AI Image Recognition Model

Deployment marks your AI image recognition project's critical shift from theoretical to practical. It involves key actions: model exporting and interface development for AI projects. These steps are vital for the model to function outside controlled development environments.

Exporting the Model

AI model deployment requires exporting the trained model into a suitable format. For instance, if your model targets mobile devices, it should be converted to TensorFlow Lite. Keras makes The process easier, which allows saving the model's architecture, state, and weights.

When exporting, the model must meet the performance goals set during testing. Validation datasets help confirm the model's reliability. Use data augmentation techniques during training to fast-track image labeling and lower mistakes, improving your model's final performance.

Building a Simple Interface

After exporting, the focus shifts to creating a user-friendly interface. Developing a straightforward interface for AI projects is crucial. It lets users interact smoothly with the model. The interface should support uploading images, conducting analyses, and easily displaying findings.

Consider tools like Flask or Django for web apps and Android Studio for mobile applications to create this interface. These frameworks empower you to build personalized interfaces that work seamlessly with your model.

Complete the process with extensive image testing, including images not part of the training. This step is vital to verify the interface's performance in real-world scenarios. Regular tests and feedback are necessary to keep the AI model deployment smooth and beneficial for users.

"Deployment is the final step that brings the AI image recognition project to practical, real-world use. Ensuring seamless integration and user accessibility is key to a successful deployment."

Mastering successful AI model deployment and crafting a user-friendly interface development distinguishes superior AI projects. By adhering to these suggestions, your AI product stands a better chance of broad acceptance and efficient utilization.

Summary

Finishing your DIY AI image recognition project is a significant step in exploring deep learning, using TensorFlow, and applying machine learning. This guide has taken you from the beginning, setting up your environment, to the end, with a complete model. As AI progresses, the ways we can use image recognition are growing fast, thanks to new technologies like YOLOv9 in early 2024.

You've learned the key parts of creating a strong image recognition model in this project. This included preparing data, training the model, and measuring its success with various tests. Everything from accuracy metrics to confusion matrices has helped make your AI model work. The skills you've developed are a strong basis for future AI projects, no matter how complex.

As tech focuses more on AI and seeing as humans do, staying current is vital. This guide has given you the tools to succeed in this exciting, ever-changing area. The true success of an image recognition project is in its real-world application. So, go forward into your next AI adventure knowing you're prepared to make a difference.

FAQ

What is AI Image Recognition?

AI Image Recognition refers to software that identifies objects, scenes, and actions in images using algorithms and machine learning. The process uses supervised learning, where AI improves by exploring labeled images over time.

Why Choose Image Recognition for Your First AI Project?

For beginners, image recognition is a clear and engaging entry to AI. It holds value for personal uses, like sorting photos or identifying content. It's accessible and offers practical applications to learn from.

What is TensorFlow?

TensorFlow, a Google creation, is a powerhouse in machine learning. It stands out for deep learning methods in image recognition. This library supports multiple languages, including Java and Python, making it a favorite in technology.

Why Use TensorFlow for Image Recognition?

TensorFlow shines in training deep learning models efficiently, perfect for image recognition. It provides the necessary infrastructure and tools for creating, testing, and deploying computer vision models. This streamlines the development of image recognition algorithms.

How to set up a development environment?

To prepare your environment, install Python and TensorFlow. These are key for your machine learning projects. Also, configure an IDE. It eases project management, integrates with TensorFlow, and simplifies debugging.

What Are the Essentials of Data Pre-processing?

Data pre-processing involves readying image data for analysis. Steps may include normalization, data augmentation, and cleaning. Each is crucial for practical model training, ensuring your model can make sense of the data it's fed.

What Are Neural Networks?

Neural networks model the brain's neural connections to detect data patterns. CNNs excel at image recognition. They use layers to sift through image data and pinpoint essential features.

Why Is GPU Acceleration Important for Deep Learning?

GPU acceleration drastically cuts down training time over CPUs. This is essential for image recognition models dealing with vast amounts of data. It allows for the efficient processing of complex tasks.