How to Train an Image Classification Model

Image classification has transformed industries from e-commerce to self-driving cars. An AI model is trained to classify images using deep learning and convolutional neural networks. These models accurately recognize and categorize objects in images.

You will then analyze a dataset, create an input pipeline, design a convolutional neural network (CNN), train an AI model, and iteratively improve its performance. You will understand the machine learning workflow and techniques for preventing overfitting, such as data augmentation and dropout.

Quick Take

- You can train an image classification model using example datasets.

- The machine learning workflow includes data analysis, input pipeline construction, AI model construction, training, and evaluation.

- Overfitting reduction techniques include data augmentation and dropout.

Introduction to Image Classification

Image classification is a computer vision task in which an artificial intelligence model determines an image's class.

There are several types of image classification methods, including:

- Binary classification. Categorizing elements into two distinct categories.

- Multiclass classification. Categorizing elements into three or more classes.

- Multilabel classification. Assigning elements to multiple labels at the same time.

- Hierarchical classification. Organizing classes into a hierarchical structure based on similarity.

The image classification process has three main steps. Image preprocessing, feature extraction, and object classification. Preprocessing techniques such as resizing and normalization prepare the image for analysis. Feature extraction identifies visual patterns using methods such as edge detection or deep learning algorithms. The extracted features are used to classify the image into predefined categories.

Real-world applications of image classification

Prerequisites for building an image classification model

Understand the structure of image data. Effectively training an image classification model requires organizing your data. Create separate folders for your training and testing sets. The training set folder should contain a CSV file with the images' names and labels. Also, create a subfolder of images for your current training images.

Your initial training set should contain at least 30 images per tag. Use formats such as .jpg, .png, .bmp, or .gif; the file size should be less than 6 MB. For performance, the shortest edge of the image should be at least 256 pixels.

A well-structured dataset improves the accuracy of your AI model and simplifies development.

Prepare your development environment

Google Colab has a cloud-based Jupyter Notebook environment with access to GPUs and TPUs.

You can create a local environment by installing the necessary libraries and frameworks. The main components of the local environment include:

- TensorFlow and Keras for building and training deep learning models.

- NumPy and Pandas for data manipulation and analysis.

- Matplotlib and OpenCV for visualization and image processing.

- Update your libraries to use the latest features and fixes.

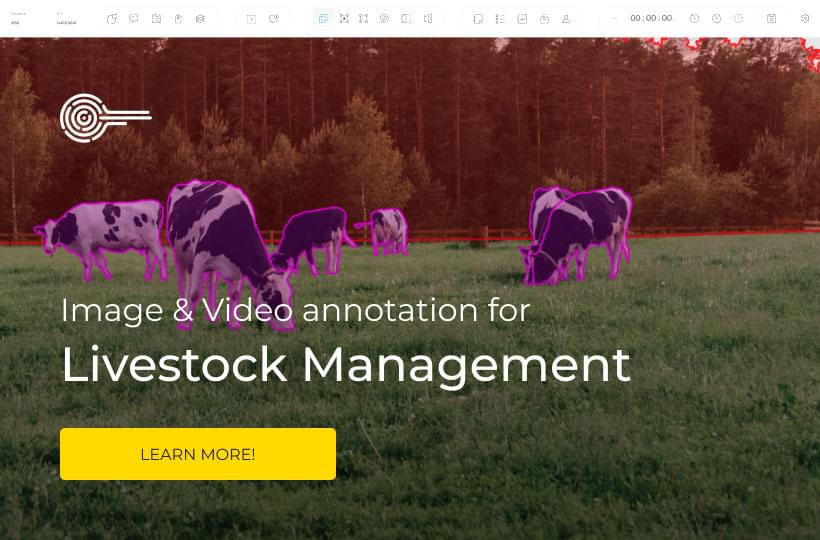

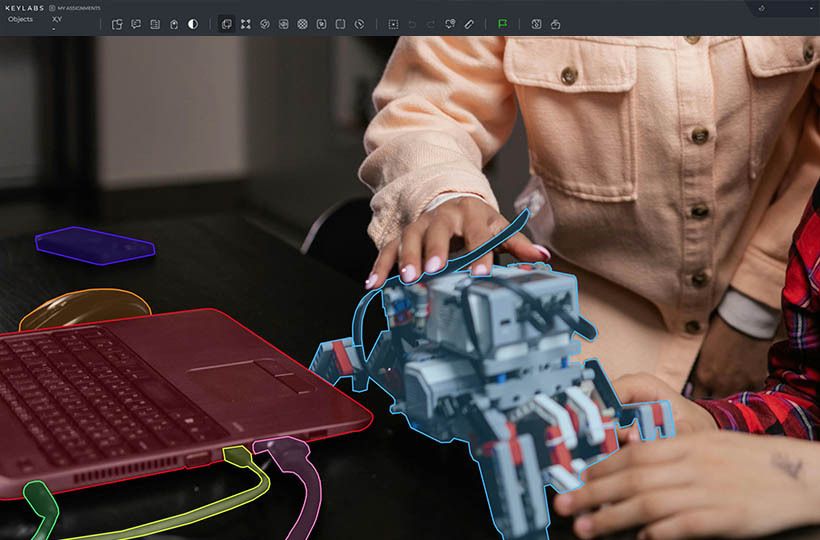

Also, check out tools and services for building image classification models. For example, Amazon Rekognition offers pre-trained models for various use cases. At Keylabs, we maintain proper documentation of the data labeling and annotation platform for computer vision datasets.

Obtaining and Preparing the Dataset

To get started, you'll need the right dataset. The Fashion MNIST dataset, from Zalando Research, contains 70,000 grayscale images of clothing and accessories, all 28x28 pixels in size.

You'll then need to download this dataset. Once downloaded, place the contents in a location on your computer.

This dataset is divided into two parts:

- Training set. Contains 60,000 images to train the AI model.

- Test set. Includes 10,000 images to test the performance of the AI model.

Each image in the Fashion MNIST dataset has a label that contains the class to which it belongs. The dataset contains 10 different classes of clothing and accessories:

Splitting the data into training and test sets

Splitting the data helps you test how well the AI model performs on new data. The training set helps the AI model learn from the annotated images. The test set tests how well the AI model generalizes to new data.

Data separation ensures that the AI model's fair performance evaluation reflects its ability to classify new images.

Defining Convolutional Neural Network Architecture

The architecture of a convolutional neural network (CNN) is based on convolutional layers. These layers apply filters to the input image, detecting local patterns and features. The filters are moved across the image, performing multiplications and summations, creating feature maps. The size of the filters depends on the complexity of the task, often using a 3x3 matrix.

After convolution, the activation function introduces nonlinearity. ReLU eliminates negative values, allowing the network to learn complex patterns. This feature promotes fast learning and reduces the risk of vanishing gradients.

BatchNorm2d and MaxPool improve CNN performance. BatchNorm2d normalizes the previous layer's activations, reduces the covariates' internal bias, and speeds up learning. MaxPool minimizes the number of feature maps, preserves the more important features, and reduces the spatial dimensions.

The features are passed through convolution and pooling layers, which make them abstract and high-level. They are then aligned and fed into linear layers for classification. These layers learn how the extracted features are related to the target classes. The last layer outputs the predicted probabilities for each class.

The choice of CNN architecture depends on the complexity of the task and resources. Popular architectures such as ResNet50, VGG19, Xception, and Inception handle image classification. These architectures are pre-trained on large datasets and fine-tuned for specific tasks using transfer learning.

Image Data Loading and Preprocessing

Understanding the structure and format of image files is a key aspect of success. Images are loaded in batches and standardized in size.

Data Augmentation Methods

Data augmentation is a method that provides AI models with more aspects of the data and reduces overtraining. Random transformations to training images, artificially expanding the size and diversity of the dataset. Methods include:

- Random flips (horizontal and/or vertical).

- Random rotations.

- Random scaling and cropping.

- Adjusting brightness, contrast, and saturation.

- Adding noise or blur.

These transformations can be replicated using libraries, TensorFlow, or Keras.

Image Normalization and Resizing

Normalization involves scaling pixel values to a constant range, typically between 0 and 1. This speeds up training and improves performance. Resizing images to a standard size is required for pretrained CNN architectures.

Here's how to normalize and resize images using the Keras ImageDataGenerator class:

It normalizes pixel values by dividing by 255, sets aside 20% for validation, and resizes images to 180x180 pixels.

All of these techniques improve the performance and reliability of the image classification model.

Training an Image Classification Model

Before training, set the hyperparameters that control the behavior of the AI model during training. These include:

- Epochs are the number of times the entire training dataset is passed through the AI model. The higher the number of epochs, the better the performance. However, if it is not balanced, this can also cause overtraining.

- Batch size. The number of training samples processed in each iteration before updating the AI model weights. Smaller batch sizes require more memory but result in faster convergence.

- Learning rate. The step size at which the AI model weights are updated during optimization. A higher learning rate speeds up training, but the AI model overshoots the optimal solution.

- Loss function. A metric to measure the discrepancy between the AI model's predictions and the actual labels. These include cross-entropy loss and mean square error.

- Optimizer. An algorithm that updates the weights of an AI model based on gradients computed during backpropagation. Optimizers include Adam, RMSprop, and Stochastic Gradient Descent (SGD).

Monitoring Training Progress

Metrics to watch:

- Training Loss. The value of the loss function on the training dataset. It should decrease as the AI model learns and improves its predictions.

- Validation Loss. The loss is computed on a separate validation dataset used to assess the AI model's generalization ability. If the validation loss increases while the training loss decreases, this indicates overtraining.

- Accuracy. The percentage of correctly classified images in the training and validation datasets. Higher accuracy means better performance for the AI model.

Overfitting avoidance methods

To reduce overfitting, the following techniques are used:

- L1 or L2 regularization of the AI model weights adds a penalty term to the loss function, which prevents large weight values.

- Removing some neurons during training forces the AI model to learn reliable and generalized features.

- Supplementing the data in the training dataset through random transformations (rotations, flips, scaling) to the images gives the AI model a wide range of variations.

- Early stopping of training is needed to avoid overfitting the AI model.

Evaluating Model Performance

Measuring accuracy on a test set. Accuracy is the ratio of correct predictions to the total number of predictions. To measure it, you apply a trained AI model to a test set and compare the predicted and actual labels.

Other metrics for a complete evaluation include:

- Recall. The proportion of true optimistic predictions among all actual positives.

- F1-score. The average accuracy value and name provide a balanced measure of the performance of the AI model.

A confusion matrix helps you better understand the performance of an AI model. A confusion matrix is a table that breaks down the predictions into true positives, true negatives, false positives, and false negatives for each class. This helps identify the classes the AI model is struggling with and highlights areas for improvement.

A necessary metric is the area under the curve (AUC) of the receiver operating characteristic (ROC) curve. The ROC curve shows the ratio of the actual positive rate to the false positive rate at different classification thresholds. A higher AUC indicates a better overall performance of the AI model in distinguishing between classes.

Visualizing predictions on sample images

Visualizing an AI model's predictions on sample test images provides insight into its strengths and weaknesses. To do this, select a few images from each class and run them through the trained AI model. The images are then displayed with the real labels and the AI model's generated labels.

Analyzing correctly and incorrectly classified images reveals patterns and sources of confusion. Finding standard features among incorrectly classified images guides further improvement of the AI model or training data.

Visualizing predictions on sample images is an effective way to gain insight into an AI model's decision-making process and identify areas for improvement.

Using an image classification model's evaluations, you can determine its performance, strengths, and weaknesses and make informed decisions about further optimization.

Fine-tuning and optimization of the model

Fine-tuning and optimization help adapt a pre-trained AI model to a specific task and dataset. This reduces the time and resources required compared to training from scratch. It also allows the AI model to achieve performance with limited annotated data, making it a viable solution for real-world scenarios.

Experimenting with different CNN architectures involves adjusting the number and size of layers to find the best configuration for the task. Using pre-trained AI models, transfer training improves performance and reduces training time. It takes knowledge from large datasets and fine-tunes the AI model with fewer resources. Freezing or unfreezing layers during fine-tuning affects the adaptation of the AI model to new data.

Hyperparameter tuning involves finding optimal settings, such as learning rate, batch size, and regularization strength. Methods like grid or random search systematically explore combinations to find the best configuration. During fine-tuning, learning is slowed to adapt to new data, and previously trained features are retained.

FAQ

What is image classification, and how does it work?

Image classification determines which class an object in an image belongs to. It uses neural networks to analyze pixels and image features and predict a label.

What are some real-world applications of image classification?

It helps detect and respond to road objects such as pedestrians, signs, and other vehicles in self-driving cars. In e-commerce, it sorts and suggests products based on their visual similarity.

What are the prerequisites for building an image classification model?

To build an image classification model, you need annotated image data. Next, you need to split it into training and test sets. The training set trains the AI model, and the test set tests its performance.

How do you obtain and prepare a dataset for image classification?

You collect and annotate your images or use public datasets such as Fashion MNIST or ImageNet to do this. Then, the images are resized, pixel values are normalized, and padding is applied to increase the size of the training set.

What is a convolutional neural network (CNN), and how is it used for image classification?

A convolutional neural network (CNN) is a deep learning model for image processing. It has convolutional, pooling, and connected layers.

What methods are used to improve the performance of an image classification model?

Data augmentation, transfer learning, grid, or random search are used. Regularization methods such as pruning or L2 prevent overfitting and improve generalization.

How do you evaluate the performance of an image classification model?

You can evaluate the performance of an image classification model using accuracy, precision, completeness, and F1-score metrics.