Moderating Viral Content With Deep Learning Methods and Labeling Tools

Viral content can be a great way to increase brand awareness and reach new audiences. However, managing and ensuring that the content is appropriate for your brand can also be challenging. This is where moderation comes in. Moderating viral content can help you control your content's tone and ensure that it is appropriate for your target audience.

Facebook has famously lost millions of users. Zuckerburg has also lost millions of investor dollars in 2022. Meta points to other social media like TikTok. They look outward to everyone else but themselves for why they have halted growth. Why do they not appeal to the under-25-year-olds demographic who refer to Facebook as “Boomerbook”?

Any regular Facebook user can tell you why they are losing users. That reason is their poorly implemented automatic content moderation system. None of Meta's products and services are actually private. It is much harder to maintain the comforting illusion of privacy when you know "they" are reading and censoring your messages in real time.

Their automated content moderation system also frequently makes mistakes. Those mistakes cause innocent users to be penalized with restrictions. The main restriction available is not to allow a user to use Facebook for some time or ban them. Meanwhile, other far more offensive users are not restricted, even when other users report them.

They also have a very poor appeals process, when Facebook users are allowed to appeal a decision at all. There is not enough human involvement in Facebook's automated content management system. So they can not make up for their algorithm's shortcomings.

As Facebook's community standards change all the time, it is hard to follow the rules. In addition, because the automated content moderation system can not be explained, users don't trust it.

Without any real explanations, users have many misconceptions. They create conspiracy theories in the absence of any better information. They believe that the system is biased against them and favors people that they don't like. All these problems make users more paranoid, which drives people away.

Elon Musk's Twitter is also suffering from poor automatic content moderation. They also have problems with arbitrary and uneven human content moderation. Because so many of Twitter's staff were publicly laid off, fired, or quit, they will now have a hard time coming up with solutions.

Social media's current automated content moderation uses methods that are easily thwarted. For example, if they don't want you to say bad words, they make a database with those bad words in them. Then they check users' posts for those bad words, censor them, or block the entire post and may penalize the user.

So frustrated, the clever user, before feeling driven off entirely, will find ways around the system. They will come up with alternate spellings or simple codes, not in the database. Everyone still knows what they said, and so the censor is thwarted. That creates a frustrating experience for everyone involved.

Copyright filters are beaten in a similar but more technical way. The current models store hashes, unique numbers created from copyright content like music, videos, and pictures. Then every time a user uploads a sound file, video, or picture, it is checked against the stored hashes. This has the advantage of not needing to store a lot of copyright, illegal or offensive content.

The problem with that system is that if the user speeds up the sound, lowers the resolution or quality, or uses a different file type, there are hash changes. Without a match to the hash, that offending content passes right through the copyright filter uncensored. That is one reason why major websites like YouTube and Facebook still host content that violates someone else's copyright. Storing a list of bad words and a database of hashes is no replacement for data labeling tools.

Challenges of Automated Content Moderation

● Content Recognition - It is sometimes difficult to recognize content with accuracy, which leads to mistakes.

● Context Recognition - Sometimes, content is flagged by mistake because of a keyword or phrase when it is not against the rules because the system failed to recognize the context.

● Even rules enforcement and content moderation is often enforced unfairly and unevenly.

● Distrust. - Users subject to automated content recognition that is not explained do not trust the system's decisions.

Deep Learning and Labeling Tools Hold the Answers

Taken as a whole, the internet is a self-correcting system that views censorship as a fault or error to be worked around. As a result, users who want to break the rules, work around the system, or manipulate it will continue to do so. This can be viewed as a constant stream of attacks. In cybersecurity, we learn that security actually improves under such conditions.

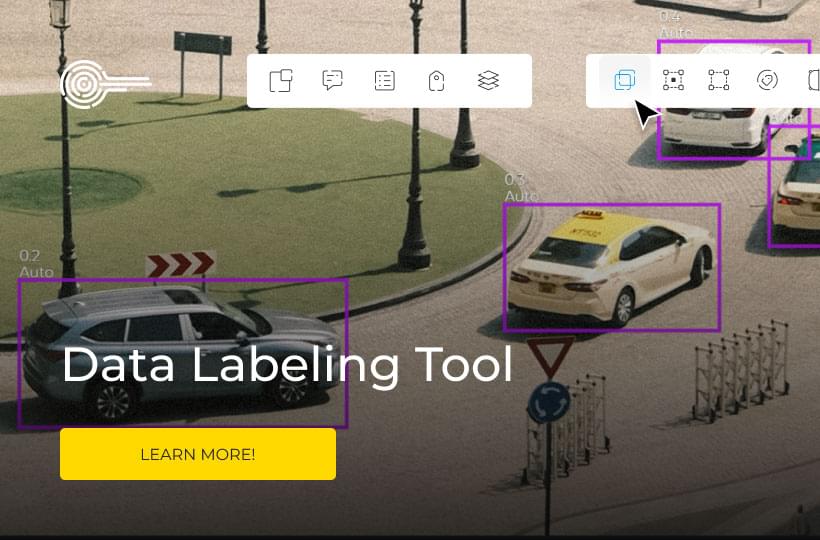

When they can't do or say whatever they want, end users will go someplace else, and then they are not your problem. Instead of relying on the old vulnerable methods, AI can do a much better job at automating content moderation. For example, using an image labeling tool for classification on a very large, scalable dataset and training an AI is better than comparing against a database of hashes.

An AI can be trained to recognize all manner of content and can not be offended. It can also learn to understand the difference between a graphic picture and real life and computer graphics. That way, violent video games are not mistaken for graphic images of real violence. AI is also good at looking for similarities. That means, unlike comparing against a hash, an AI can understand that it is looking at an altered copy of copyright content.

After an AI is created and trained, it also has the strength of not needing the companies who use it to store a large database of content to check against. Instead, that database is only needed for the processes of data labeling and deep learning used to create the final product.

Unlike the old methods, you don't need examples of every possible piece of undesirable content. Instead, the AI can look for more abstract similarities. For example, if it is a repost of copyright content, the AI can simply determine that the underlying video or sound comes from the same source.

AI can generate new predictions using deep learning methods to inform automatic moderation decisions. It can also flag content for a human to review.