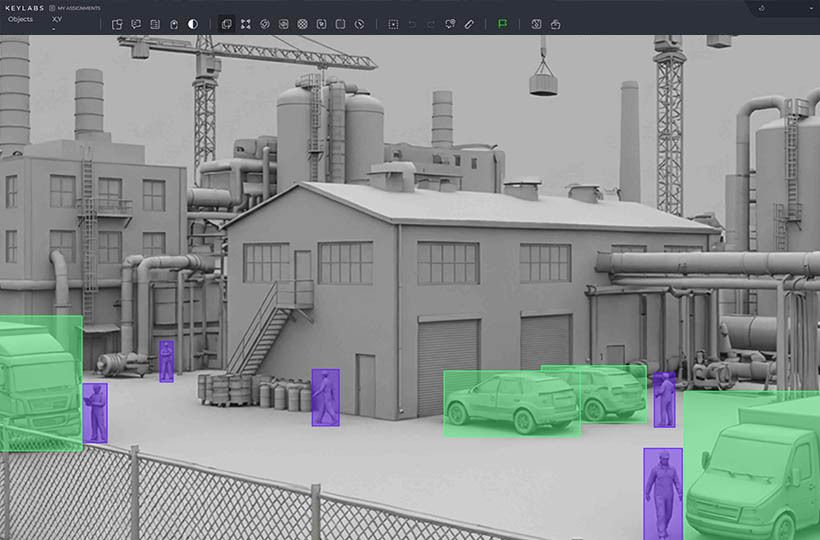

Synthetic data generation pipeline

The demand for large, high-quality labeled datasets in machine learning and artificial intelligence has highlighted the limitations associated with real-world data. Collecting, annotating, and maintaining such datasets can be time-consuming, expensive, and often constrained by privacy or availability regulations.

Synthetic data offers a scalable approach to generating labeled datasets, enabling researchers and developers to create diverse, accurate, and fully annotated data. By automating the generation process and applying data augmentation, these pipelines accelerate model training, enhance generalization, and facilitate testing in rare or extreme scenarios that are challenging to replicate in real-world settings.

Quick Take

- Programmatic creation of datasets addresses key challenges in AI development.

- Artificial information generation addresses issues of cost, time, and privacy.

- Automated processes increase labeling efficiency and scale.

- Hybrid approaches that combine artificial and real-world examples yield better results.

Synthetic data and its importance

Synthetic data is artificially generated data that mimics the characteristics of real data, but does not contain specific information about real people or events. It is essential for modern technologies and scientific research, as it enables the solution of problems associated with the collection, storage, and processing of information.

Synthetic data acts as privacy-preserving data, enabling secure model training without the risk of exposing sensitive information.

It enables you to create large and balanced datasets in areas where real data is challenging to obtain or incomplete, such as in medicine, autonomous transportation systems, or financial services.

Synthetic data is also necessary for training and testing artificial intelligence and machine learning models. This enables models to learn from a wide range of different scenarios. Additionally, it enhances the efficiency of product and algorithm development, reduces the time required for data collection, and lowers the cost of annotation.

As a result, the use of synthetic data is a strategic tool for innovation across industries, enabling companies and researchers to create more accurate and secure solutions that meet today's data and analytics demands.

Traditional and advanced methods for generating synthetic data

Synthetic data can be generated through various approaches, from traditional methods based on statistical models to modern advanced technologies, including neural networks and generative models. Each method has its own advantages and limitations and is applied depending on the purpose of use and the type of data.

Data quality, control, and annotation strategies

Quality assurance starts at the design stage. Teams should intentionally add realistic variations to prevent overly ideal training sets.

Domain experts validate the source samples to ensure that the generated scenes accurately reflect real-world conditions.

Final testing involves evaluating the model's performance on real-world datasets. This validation confirms the practical utility of the training materials.

Eliminating bias and ensuring label accuracy

Software-generated information can inherit bias from the source materials. Careful parameter design helps prevent this problem.

Regular reviews measure representation across scenarios. This process ensures that all conditions are met.

Label accuracy checks verify that each element receives the correct annotations. Automated systems must maintain consistent labeling throughout the pipeline.

Hybrid approaches

Hybrid approaches combine real and synthetic data to create more complete, diverse, and high-quality datasets. Such integration enables you to retain the benefits of both sources. Real data provides reliability and accuracy, while synthetic data adds scale, fills gaps, and allows modeling of rare cases.

Hybrid methods enhance the efficiency of training artificial intelligence models, as synthetic data helps balance classes, create new scenarios, and improve the model's generalizability.

Integrating real and synthetic data enhances security and meets privacy requirements, as it allows for reducing the amount of sensitive data in training sets and replacing it with synthetic counterparts.

Scaling synthetic data generation

Scaling synthetic data generation enables the creation of large, diverse, and high-quality datasets for training models and testing systems. Using the right tools and practices helps ensure the reliability, diversity, and efficiency of synthetic datasets.

FAQ

What is synthetic data and how is it used?

Synthetic data is artificially generated data that mimics real data. It is used to train models, test systems, and analyze without the risk of leaking sensitive information.

Why is this type of data so important for training AI models?

This type of data is important because it provides the large, diverse, and reliable data needed for accurate forecasting.

What are the standard methods for generating this data?

Synthetic data generation methods include statistical models, rule-based approaches, machine learning, and generative neural networks.

Is it better to use synthetic data alone or mix it with real data?

Effectively using synthetic data alongside real data provides a balance between scalability and reliability.